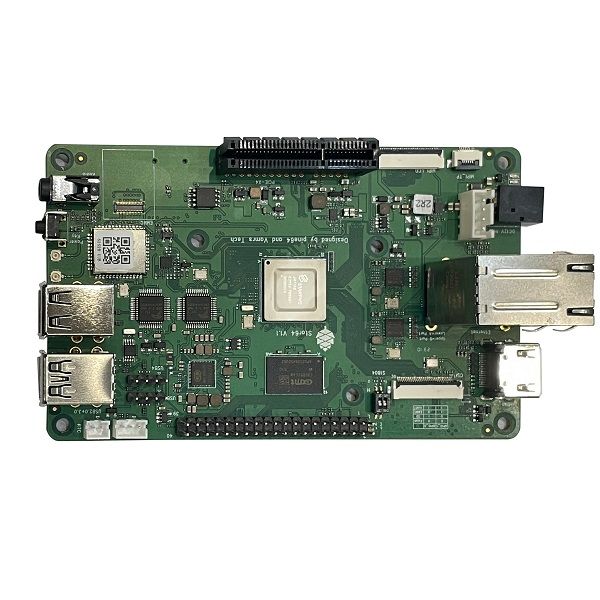

FAN control OMV Auyfan 0.10.12: gitlab-ci-linux-build-184, Kernel 5.6

-

Hey,

I will do it in english, hope it is okay. Thanks, for very informative forum.

My question is, is it possible to control the FAN in the newest OMV Auyfan 0.10.12: gitlab-ci-linux-build-184, Kernel 5.6?

The install was very easy no problems at all, the only problem is i did try some different commands to reach the fan, but was not able to get it going. Do somebody know how? Thanks.Best Regards.

Soeren -

Hi Soeren,

I have this succesfully running.

GitHub - tuxd3v/ats

Contribute to tuxd3v/ats development by creating an account on GitHub.

GitHub (github.com)

Kind Regards

Martin -

Hi Soeren,

I have this succesfully running.

GitHub - tuxd3v/ats

Contribute to tuxd3v/ats development by creating an account on GitHub.

GitHub (github.com)

Kind Regards

Martin@mabs sagte in FAN control OMV Auyfan 0.10.12: gitlab-ci-linux-build-184, Kernel 5.6:

Hi Soeren,

I have this succesfully running.

GitHub - tuxd3v/ats

Contribute to tuxd3v/ats development by creating an account on GitHub.

GitHub (github.com)

Kind Regards

MartinThanks, i will try the fan tool again. I'm almost sure i did try without luck, have you edited something in the fan tool conf..?

-

Hi,

I only played with two parameters.

I have two rockpro64 boards, currently one has a small fan and the other one has a large fan.

Therefore I changed PROFILE_NR accordingly.

Also I have ALWAYS_ON on true on the one with the large fan, but don't see a difference. The fan goes on and off still, maybe I misinterpreted the parameter.

Also I just noticed now that the CTL settings of the version on github changed slightly, but I think this only improved the detection depending of the kernel settings.

M

-

With the new OMV kernel 5.6 image from Auyfan, i can't get the FAN to spin - If the fan spins it runs really slow, no sound from the fan.

With the FAN tool the master installation "failed" and with the release installation it did report "active"..

I have installed a 92mm FAN inside the NAS case, it runs on Armbian with kernel 5.4.32I will open op the case later today to be sure. Thanks.

-

Hi,

Now i did find the fan, here it goes "nano /sys/devices/platform/pwm-fan/hwmon/hwmon3/pwm1" it is at "0" can be controlled to "255"

Best Regards.

-

The tool works on all image under 5.4 or so for me. But yes i am at the finish line, just wanted the fan to be always on. Just wanted to share, if someone will run in the same problems. Thanks.

-

Helpful Thread!

But, ATS don't work for me on kernel 5.6 with ayufan release. Only this command works.

nano /sys/devices/platform/pwm-fan/hwmon/hwmon3/pwm1Thanks @soerenderfor for the hint.

-

Ok, problem in ATS is this?

-- FAN Control[ String ] PWM_CTL = { "/sys/class/hwmon/hwmon0/pwm1", "/sys/devices/platform/pwm-fan/hwmon/hwmon0/pwm1", "/sys/devices/platform/pwm-fan/hwmon/hwmon1/pwm1" },@FrankM - did you try fix the ATS tool, if yes. Will it Work?

Best Regards.

-

Hi,

since I'm currently change my rockpro64 setup I came across this.

With the kernel from ayufan you need to set PWM_CTL to

/sys/devices/platform/pwm-fan/hwmon/hwmon3/pwm1for my self compiled one I need

/sys/devices/platform/pwm-fan/hwmon/hwmon0/pwm1But I got it only working with one entry for PWM_CTL e.g.

PWM_CTL = "/sys/devices/platform/pwm-fan/hwmon/hwmon0/pwm1",after that you need to start ats again

sudo systemctl stop ats sudo systemctl start atsinitially the fan should start immediately for a short period of time.

In case it is even a different one on your kernel you can find the right one using this command.

sudo find /sys -name pwm1 | grep hwmonSo far I'm not sure which kernel parameter or modul changes this.

Martin