Kernel 4.4.x

-

4.4.154-1126-rockchip-ayufan released

ayufan: defconfig: use CONFIG_HZ=250 (20dac551) · Commits · ayufan-repos / rock64 / linux-kernel · GitLab

Change-Id: I8009db93ee7c5af5e7804a004ab03d0ba97d20e2

GitLab (gitlab.com)

-

4.4.154-1130-rockchip-ayufan released

dts: rockpro64: Enabled sdio0 and defer it until pcie is ready

Max defer time is 2000ms which should be enough for pcie to

get initialized. This is a workaround for issue with unstable

pcie training if both sdio0 and pcie are enabled in rockpro64

device tree. -

- 4.4.167-1146-rockchip-ayufan

- 4.4.167-1148-rockchip-ayufan

- 4.4.167-1151-rockchip-ayufan

- 4.4.167-1153-rockchip-ayufan

Änderungen:

- ayufan: rockchip-vpu: fix compilation errors

- ayufan: dts: rockpro64: fix es8316 support

- ayufan: dts: rockpro64: add missing gpu_power_model for MALI

- ayufan: dts: pinebook-pro: fix support for sound-out

Ayufan bereitet die Images für das kommende Pinbook Pro vor.

-

- 4.4.167-1155-rockchip-ayufan

- 4.4.167-1157-rockchip-ayufan

- 4.4.167-1159-rockchip-ayufan

- 4.4.167-1161-rockchip-ayufan

Änderungen

- ayufan: dts: pinebook-pro: change bt/audio supply according to Android changes

- ayufan: dts: rock64: remove unused

ir-receiver - ayufan: dts: pinebook-pro: fix display port output

- ayufan: dts: pinebook-pro: fix display port output

-

4.4.167-1169-rockchip-ayufan released

- nuumio: dts/c: rockpro64: add pcie scan sleep and enable it for rockpro64 (#45)

-

4.4.167-1175-rockchip-ayufan released

- Old driver is

rockchip-drm-rga

- Old driver is

-

-

linux-mainline-u-boot

Angeheftet Images -

ROCKPro64 - Anpassen resize_rootfs.sh

Angeheftet ROCKPro64 -

-

-

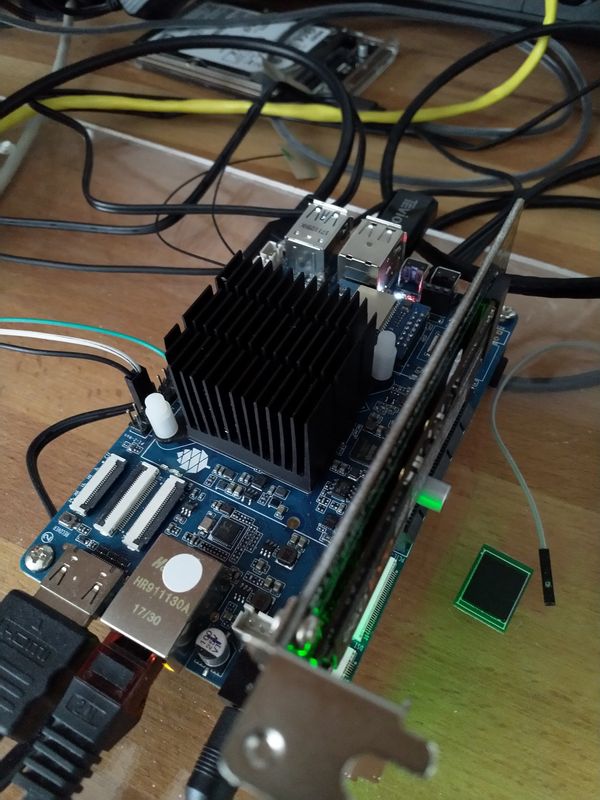

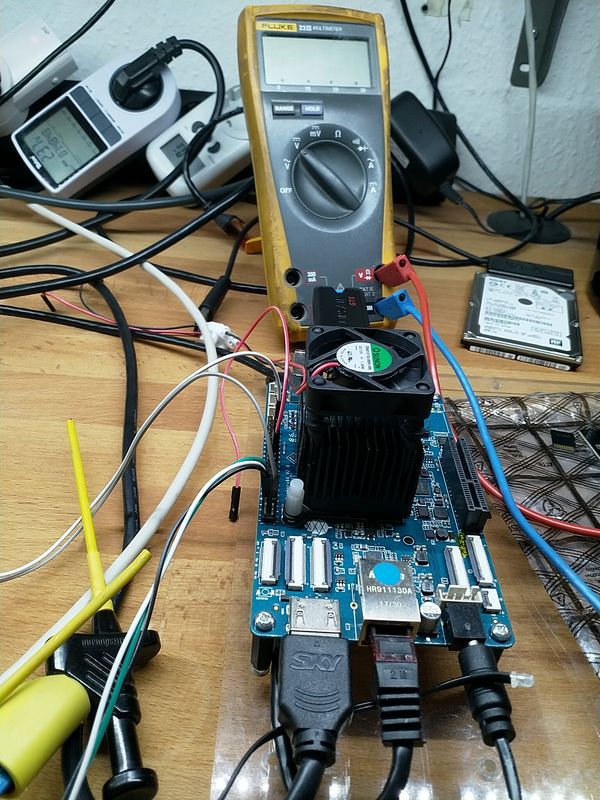

NAS Gehäuse für den ROCKPro64

Verschoben Hardware -

-