Ansible - Proxmox Server bearbeiten

-

Aktuell habe ich durch eine Erkrankung etwas mehr Zeit für die Konsole, sodass ich angefangen habe, die Setups aller meiner Server zu vereinheitlichen. Anfangen wollte ich dazu mit meinem lokalen Proxmox. Dabei kam mir wieder in den Sinn, das ich auch noch ein Debian Bookworm 12 Template brauchte.

Also, das aktuelle Debian Image heruntergeladen. Mit diesem dann einen Debian Bookworm 12 Server aufgesetzt. Jetzt brauchte ich zu diesem Zeitpunkt einen Zugang mit SSH-Key (für mein Semaphore).

Also habe ich schon mal zwei SSH-Keys eingefügt. Einmal meinen Haupt-PC und einmal den Semaphore Server. Danach den Server in ein Template umgewandelt.

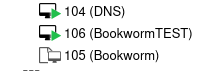

105 ist das Template, 106 ein damit erstellter Test-Server. Ok, das läuft wie erwartet, jetzt möchte ich den Server durch konfigurieren, so wie ich das gerne haben möchte. Da es hier um Ansible geht, brauche ich dazu ein Playbook.

--- ############################################### # Playbook for my Proxmox VMs ############################################### - name: My task hosts: proxmox_test tasks: ##################### # Update && Upgrade installed packages and install a set of base software ##################### - name: Update apt package cache. ansible.builtin.apt: update_cache: yes cache_valid_time: 600 - name: Upgrade installed apt packages. ansible.builtin.apt: upgrade: 'yes' - name: Ensure that a base set of software packages are installed. ansible.builtin.apt: pkg: - crowdsec - crowdsec-firewall-bouncer - duf - htop - needrestart - psmisc - python3-openssl - ufw state: latest ##################### # Setup UFW ##################### - name: Enable UFW community.general.ufw: state: enabled - name: Set policy IN community.general.ufw: direction: incoming policy: deny - name: Set policy OUT community.general.ufw: direction: outgoing policy: allow - name: Set logging community.general.ufw: logging: 'on' - name: Allow OpenSSH rule community.general.ufw: rule: allow name: OpenSSH - name: Allow HTTP rule community.general.ufw: rule: allow port: 80 proto: tcp - name: Allow HTTPS rule community.general.ufw: rule: allow port: 443 proto: tcp ##################### # Setup CrowdSEC ##################### - name: Add one line to crowdsec config.yaml ansible.builtin.lineinfile: path: /etc/crowdsec/config.yaml #search_string: '<FilesMatch ".php[45]?$">' insertafter: '^db_config:' line: ' use_wal: true' ##################### # Generate Self-Signed SSL Certificate # for this we need python3-openssl on the client ##################### - name: Create a new directory www at given path ansible.builtin.file: path: /etc/ssl/self-signed_ssl/ state: directory mode: '0755' - name: Create private key (RSA, 4096 bits) community.crypto.openssl_privatekey: path: /etc/ssl/self-signed_ssl/privkey.pem - name: Create simple self-signed certificate community.crypto.x509_certificate: path: /etc/ssl/self-signed_ssl/fullchain.pem privatekey_path: /etc/ssl/self-signed_ssl/privkey.pem provider: selfsigned - name: Check if the private key exists stat: path: /etc/ssl/self-signed_ssl/privkey.pem register: privkey_stat - name: Renew self-signed certificate community.crypto.x509_certificate: path: /etc/ssl/self-signed_ssl/fullchain.pem privatekey_path: /etc/ssl/self-signed_ssl/privkey.pem provider: selfsigned when: privkey_stat.stat.exists and privkey_stat.stat.size > 0 ##################### # Check for new kernel and reboot ##################### - name: Check if a new kernel is available ansible.builtin.command: needrestart -k -p > /dev/null; echo $? register: result ignore_errors: yes - name: Restart the server if new kernel is available ansible.builtin.command: reboot when: result.rc == 2 async: 1 poll: 0 - name: Wait for the reboot and reconnect wait_for: port: 22 host: '{{ (ansible_ssh_host|default(ansible_host))|default(inventory_hostname) }}' search_regex: OpenSSH delay: 10 timeout: 60 connection: local - name: Check the Uptime of the servers shell: "uptime" register: Uptime - debug: var=Uptime.stdoutIn dem Inventory muss der Server drin sein, den man bearbeiten möchte. Also, so was

[proxmox_test] 192.168.3.19 # BookwormTESTPlaybook

- Wir aktualisieren alle Pakete

- Wir installieren, von mir festgelegte Pakete

- Konfiguration UFW

- Konfiguration CrowdSec

- Konfiguration selbst signiertes Zertifikat

- Kontrolle ob neuer Kernel vorhanden ist, wenn ja Reboot

- Uptime - Kontrolle ob Server erfolgreich gestartet ist

Danach ist der Server so, wie ich ihn gerne hätte.

- Aktuell

- ufw - Port 22, 80 und 443 auf (SSH, HTTP & HTTPS)

- CrowdSec als fail2ban Ersatz

- Selbst signierte Zertifikate benutze ich nur für lokale Server

Die erfolgreiche Ausgabe in Semaphore, sieht so aus.

12:38:16 PM Task 384 added to queue 12:38:21 PM Preparing: 384 12:38:21 PM Prepare TaskRunner with template: Proxmox configure Proxmox Template 12:38:22 PM Von https://gitlab.com/Bullet64/playbook 12:38:22 PM e7c8531..c547cfc master -> origin/master 12:38:22 PM Updating Repository https://gitlab.com/Bullet64/playbook.git 12:38:23 PM Von https://gitlab.com/Bullet64/playbook 12:38:23 PM * branch master -> FETCH_HEAD 12:38:23 PM Aktualisiere e7c8531..c547cfc 12:38:23 PM Fast-forward 12:38:23 PM proxmox_template_configuration.yml | 5 +++++ 12:38:23 PM 1 file changed, 5 insertions(+) 12:38:23 PM Get current commit hash 12:38:23 PM Get current commit message 12:38:23 PM installing static inventory 12:38:23 PM No collections/requirements.yml file found. Skip galaxy install process. 12:38:23 PM No roles/requirements.yml file found. Skip galaxy install process. 12:38:26 PM Started: 384 12:38:26 PM Run TaskRunner with template: Proxmox configure Proxmox Template 12:38:26 PM 12:38:26 PM PLAY [My task] ***************************************************************** 12:38:26 PM 12:38:26 PM TASK [Gathering Facts] ********************************************************* 12:38:28 PM ok: [192.168.3.19] 12:38:28 PM 12:38:28 PM TASK [Update apt package cache.] *********************************************** 12:38:29 PM ok: [192.168.3.19] 12:38:29 PM 12:38:29 PM TASK [Upgrade installed apt packages.] ***************************************** 12:38:30 PM ok: [192.168.3.19] 12:38:30 PM 12:38:30 PM TASK [Ensure that a base set of software packages are installed.] ************** 12:38:31 PM ok: [192.168.3.19] 12:38:31 PM 12:38:31 PM TASK [Enable UFW] ************************************************************** 12:38:32 PM ok: [192.168.3.19] 12:38:32 PM 12:38:32 PM TASK [Set policy IN] *********************************************************** 12:38:34 PM ok: [192.168.3.19] 12:38:34 PM 12:38:34 PM TASK [Set policy OUT] ********************************************************** 12:38:36 PM ok: [192.168.3.19] 12:38:36 PM 12:38:36 PM TASK [Set logging] ************************************************************* 12:38:37 PM ok: [192.168.3.19] 12:38:37 PM 12:38:37 PM TASK [Allow OpenSSH rule] ****************************************************** 12:38:37 PM ok: [192.168.3.19] 12:38:37 PM 12:38:37 PM TASK [Allow HTTP rule] ********************************************************* 12:38:38 PM ok: [192.168.3.19] 12:38:38 PM 12:38:38 PM TASK [Allow HTTPS rule] ******************************************************** 12:38:38 PM ok: [192.168.3.19] 12:38:38 PM 12:38:38 PM TASK [Add one line to crowdsec config.yaml] ************************************ 12:38:39 PM ok: [192.168.3.19] 12:38:39 PM 12:38:39 PM TASK [Create a new directory www at given path] ******************************** 12:38:39 PM ok: [192.168.3.19] 12:38:39 PM 12:38:39 PM TASK [Create private key (RSA, 4096 bits)] ************************************* 12:38:41 PM ok: [192.168.3.19] 12:38:41 PM 12:38:41 PM TASK [Create simple self-signed certificate] *********************************** 12:38:43 PM ok: [192.168.3.19] 12:38:43 PM 12:38:43 PM TASK [Check if the private key exists] ***************************************** 12:38:43 PM ok: [192.168.3.19] 12:38:43 PM 12:38:43 PM TASK [Renew self-signed certificate] ******************************************* 12:38:44 PM ok: [192.168.3.19] 12:38:44 PM 12:38:44 PM TASK [Check if a new kernel is available] ************************************** 12:38:44 PM changed: [192.168.3.19] 12:38:44 PM 12:38:44 PM TASK [Restart the server if new kernel is available] *************************** 12:38:44 PM skipping: [192.168.3.19] 12:38:44 PM 12:38:44 PM TASK [Wait for the reboot and reconnect] *************************************** 12:38:55 PM ok: [192.168.3.19] 12:38:55 PM 12:38:55 PM TASK [Check the Uptime of the servers] ***************************************** 12:38:55 PM changed: [192.168.3.19] 12:38:55 PM 12:38:55 PM TASK [debug] ******************************************************************* 12:38:55 PM ok: [192.168.3.19] => { 12:38:55 PM "Uptime.stdout": " 12:38:55 up 19 min, 2 users, load average: 0,84, 0,29, 0,10" 12:38:55 PM } 12:38:55 PM 12:38:55 PM PLAY RECAP ********************************************************************* 12:38:55 PM 192.168.3.19 : ok=21 changed=2 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0 12:38:55 PM

-

-

-

-

-

Rest-Server

Verschoben Restic -

-

-