Images 0.7.x

-

schrieb am 6. Juli 2018, 04:51 zuletzt editiert von FrankM

Kamil ist wieder am schrauben.

Commits · 0.7.1 · ayufan-repos / rock64 / linux-build · GitLab

Rock64 Linux build scripts, tools and instructions

GitLab (gitlab.com)

0.7.x

- 0.7.0: Introduces heavy refactor splitting all components into separate repos, and separate independent releases (u-boot, kernel, kernel-mainline, compatibility package),

- 0.7.0: Dry run everything,

Bin gespannt auf das Ergebnis, im Moment hat das Script mit Fehlern abgebrochen, aber Kamil kennt das bestens

-

schrieb am 6. Juli 2018, 17:41 zuletzt editiert von

Es gibt noch nicht Neues, aber hier kann man schon ein wenig sehen was er macht.

- 0.7.3: Speed-up build process,

- 0.7.2: Pin packages,

- 0.7.1: Use GitLab CI for releasing all images,

- 0.7.0: Introduces heavy refactor splitting all components into separate repos, and separate independent releases (u-boot, kernel, kernel-mainline, compatibility package),

LATEST_UBOOT_VERSION=2017.09-rockchip-ayufan-1009-g501b20dc14

LATEST_KERNEL_VERSION=4.4.132-1042-rockchip-ayufan-g521c26bc8ee0

LATEST_PACKAGE_VERSION=0.7-5Quelle: https://gitlab.com/ayufan-repos/rock64/linux-build/commit/b557556d38760cfd1f7e9a818e7a51c873520161

-

schrieb am 6. Juli 2018, 21:34 zuletzt editiert von FrankM 7. Juli 2018, 09:27

Wenn ich das jetzt richtig beobachtet habe, hat Kamil ganze zwei Tage damit zugebracht, den Build Prozeß umzubauen. Da läuft jetzt wohl alles auf gitlab ab, die Releases findet man aber immer noch hier. Was da wie, womit zusammen arbeitet, kann ich Euch nicht sagen. Bin auf dem Gebiet mit Sicherheit kein Experte.

Das Gute, es gibt einen neuen Release. 0.7.2

Das Schlechte, bootet nicht, da scheint was mit dem u-boot nicht zu passen.

Kamil schrieb

(23:24:08) ayufan1: I will fix it later

(23:24:15) ayufan1: I basically changed 80% of the build process

(23:24:21) ayufan1: so I expect something not to work

Dann warten wir mal..

und DANKE für die Arbeit - Kamil!!

-

schrieb am 7. Juli 2018, 07:25 zuletzt editiert von FrankM 7. Juli 2018, 09:28

Gestern Nacht kam noch 0.7.3

- 0.7.3: Fix generation of extlinux.conf (linux booting),

Das Problem des nicht Bootens wurde damit behoben, aber das Image ist unstabil. Kamil weiß das , ich bin sicher das er heute weiter dran rumschraubt.

Dann mal dran erinnern was im Moment gut funktioniert.

- 0.6.58: jenkins-linux-build-rock-64-271 installieren

- und dann auf 4.18.0-rc3-1035-ayufan upgraden

Die Releases werden übrigens weiterhin auf github veröffentlicht!

-

schrieb am 7. Juli 2018, 08:47 zuletzt editiert von

0.7.3 released

- 0.7.3: Fix generation of extlinux.conf (linux booting),

- 0.7.2: Pin packages,

- 0.7.2: Improve performance of build process,

- 0.7.1: Use GitLab CI for releasing all images,

- 0.7.0: Introduces heavy refactor splitting all components into separate repos, and separate independent releases (u-boot, kernel, kernel-mainline, compatibility package),

- 0.7.0: Dry run everything,

Kamil hat seinen kompletten Build-Prozeß umgebaut. Diese 0.7.3 Version bootet (nur ohne PCIe NVMe-Karte). Außerdem habe ich Unstabilitäten über die LAN-Schnittstelle bemerkt (Freeze). Nicht empfehlenswert, im Moment.

-

schrieb am 9. Juli 2018, 10:59 zuletzt editiert von FrankM

0.7.4 released

- 0.7.4: Fix resize_rootfs.sh script to respect boot flags (fixes second boot problem introduced by 0.7.0),

- 0.7.4: Add rock(pro)64_erase_spi_flash.sh,

- 0.7.4: Fix cursor on desktop for rockpro64,

Bootet nur ohne PCIe NVMe Karte! Kamil will das Fixen.

-

schrieb am 16. Juli 2018, 21:06 zuletzt editiert von FrankM

0.7.5 released

- 0.7.5: Various stability fixes for kernel and u-boot,

- 0.7.5: Added memtest to kernels and extlinux,

- 0.7.5: Show early boot log when booting kernels,

Kurzer Test - bootet jetzt mit PCIe NVMe Karte

-

schrieb am 17. Juli 2018, 20:24 zuletzt editiert von

0.7.6 released

- 0.7.6: Change OPP's for Rock64 and RockPro64: ayufan-rock64/linux-kernel@4.4.132-1059-rockchip-ayufan...ayufan-rock64:4.4.132-1062-rockchip-ayufan,

Soll mehr Stabilität bringen, macht es leider nicht. So langsam wird es ärgerlich, das mit jedem Release nichts nach vorne geht.

-

schrieb am 19. Juli 2018, 17:15 zuletzt editiert von FrankM

0.7.7 released

- 0.7.7: Fix memory corruptions caused by Mali/Display subsystem (4.4),

- 0.7.7: Enable SDR104 mode for SD cards (this requires u-boot upgrade if booting from SD),

Langsam wird es, kann man jetzt zig mal booten ohne Probleme. Einziges Problem was ich im Moment nach einem kurzen Test festgestellt habe, das Erkennen der NVMe Karte ist Zufall und gelingt nur selten. Seltsamerweise im Mainline kein Problem!?

Kamil hat das für 4.4 gefixt -> https://github.com/ayufan-rock64/linux-kernel/commit/bfb0d6c371d14b0d1fc60326b9bc84985a26f848?diff=unified

Hier gibt es den Kernel zum herunter laden -> https://github.com/ayufan-rock64/linux-kernel/releases/tag/4.4.132-1070-rockchip-ayufan

-

schrieb am 21. Juli 2018, 21:40 zuletzt editiert von FrankM

0.7.8 released

- 0.7.8: Improve eMMC compatibility on RockPro64,

- 0.7.8: Disable sdio (no wifi/bt) to fix pcie/nvme support on 4.4 for RockPro64,

- 0.7.8: Fix OMV builds (missing initrd.img),

- 0.7.8: Make all packages virtual, conflicting and replacing making possible to do linux-rock64/rockpro64 to replace basesystem,

(22:32:51) ayufan1: pushed one final release before vacations

(22:32:59) ayufan1: consider this one to be the future release

(22:33:15) ayufan1: if people do confirm that they do work on rockpro64 and rock64 I will make it finally stable

(22:33:21) ayufan1: it is probably the best support so far

(22:33:48) ayufan1: not yet the most performant (for rockpro64), I had to limit in bunch of places freqs, disable stuff, but it should be stable and support base ops

(22:34:01) ayufan1: but, first stability, then we gonna add extra stuffKamil macht Urlaub, da haben wir ja ein wenig Freizeit

-

schrieb am 26. Juli 2018, 14:27 zuletzt editiert von

0.7.9 released

- 0.7.9: Fix upgrade problem (u-boot-* packages),

Kamil macht Urlaub

Ok, es gab wohl ein paar kleinere Probleme die Platinen upzugraden. Kamil hat noch schnell die Probleme gefixt und das 0.7.9 Image veröffentlicht.

Ok, es gab wohl ein paar kleinere Probleme die Platinen upzugraden. Kamil hat noch schnell die Probleme gefixt und das 0.7.9 Image veröffentlicht. -

schrieb am 7. Okt. 2018, 17:34 zuletzt editiert von

0.7.10 released

- 0.7.10: Rebased rockchip and mainline kernels,

- 0.7.10: Support USB gadgets for rock/pro64,

- 0.7.10: Disable TX checksumming for RockPro64,

- 0.7.10: Improve FAN for RockPro64,

- 0.7.10: Improve sdmmc0 stability for Rock64,

- 0.7.10: Enable binfmt-misc,

- 0.7.10: Improve stability of PCIE for RockPro64,

- 0.7.10: Fix eMMC stability on RockPro64 mainline kernel,

Die Container Images fehlen, Kamil hatte da ein Problem mit. Deshalb auch die Kennzeichnung als Pre-Release.

-

schrieb am 8. Okt. 2018, 15:03 zuletzt editiert von

Die Container-Images für 0.7.10 sind jetzt auch fertig!

Releases · ayufan-rock64/linux-build

Rock64 Linux build scripts, tools and instructions - Releases · ayufan-rock64/linux-build

GitHub (github.com)

-

schrieb am 25. Okt. 2018, 17:48 zuletzt editiert von FrankM

0.7.11 released

- 0.7.11: Rebased mainline kernel,

- 0.7.11: Run rockchip kernel at 250Hz to increase performance,

- 0.7.11: Add support for usb gadgets for rockchip,

- 0.7.11: Introduce

change-default-kernel.shscript to easily switch between kernels,

-

schrieb am 25. Feb. 2019, 20:39 zuletzt editiert von

0.7.12 released

- 0.7.12: Rebased mainline kernel,

- 0.7.12: Rockchip kernel has patches for enabling sdio0 and pcie concurrently,

- 0.7.12: A bunch of dependencies updates,

-

schrieb am 1. März 2019, 18:08 zuletzt editiert von FrankM 3. Jan. 2019, 19:09

Ich kann 0.7.12 nicht empfehlen. Bei der Installation eines Systems mit PCIe NVMe SSD auf einem ROCKPro64 v2.0 4GB RAM habe ich folgenden Fehler gehabt.

0.7.12_with_pcie_nvme_ssd - Pastebin.com

Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

Pastebin (pastebin.com)

Danach war die Partition auf der SSD weg.

Ich bin jetzt wieder auf 0.7.11 mit dem letzten Kernel der funktioniert 4.4.154-1128-rockchip-ayufan

Dran denken, was ich mit diesem Zeichen

kennzeichne, funktioniert.

kennzeichne, funktioniert. -

schrieb am 3. März 2019, 21:49 zuletzt editiert von FrankM 3. März 2019, 22:49

0.7.13 released

- 0.7.13: Enable support for RockPro64 WiFi/BT module,

- 0.7.13: Fix LXDE build: updated libdrm,

PCIe NVMe SSD & WiFi = Crash!

WiFi alleine habe ich bei einem kurzen Test auf stretch minimal zum Laufen bekommen - aber unstabil!Alles in allem, noch viel Arbeit. Nur für Leute mit Spaß am Testen geeignet

Morgen, geht's weiter

Morgen, geht's weiter

-

schrieb am 6. März 2019, 17:32 zuletzt editiert von

0.7.14 released

- 0.7.14: Update rockchip kernel to 4.4.167,

- 0.7.14: Update mainline kernel to 5.0,

Aktuell noch ungetestet.

-

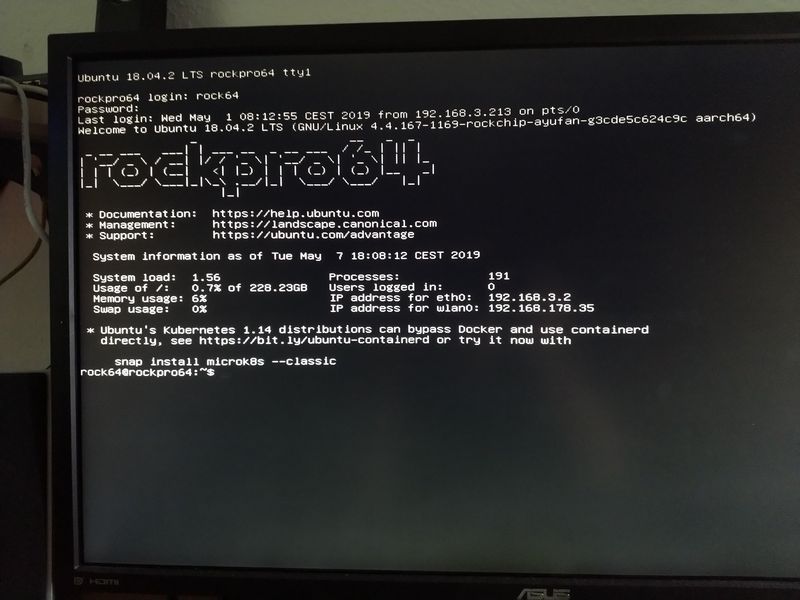

schrieb am 7. März 2019, 19:31 zuletzt editiert von FrankM 3. Aug. 2019, 15:25

0.7.14 ist nicht empfehlenswert. (Bei mir zumindestens!!)

Was geht?

- WiFi

Was nicht geht?

- WiFi & PCIe NVMe SSD zusammen - crash!

- PCIe NVMe SSD alleine - crash!

Für mich ist der angebliche Fix, der irgendwie was drumherum bastelt, damit PCIe & WiFi zusammen funktioniert keine Lösung. Das macht jetzt aktuell mehr Probleme als vorher.

Meine Idee wäre, zwei dts Files, einmal ist WiFi an, für Leute die es brauchen und einmal ist es aus. Hoffentlich findet jemand eine vernünftige Lösung!!

Ich bleibe weiterhin bei 0.7.11 - absolut stabil, PCIe stabil, SATA läuft (mit der richtigen Karte), auf WiFi kann ich persönlich verzichten!

Ich möchte hier aber erwähnen, das es wohl Leute gibt, bei denen das funktioniert.

-

schrieb am 8. März 2019, 14:24 zuletzt editiert von

Mal ein 0.7.14 Image eingeschmissen und Mainline angetestet. Und jetzt wird es verwirrend..

Was geht?

- PCIe (auch mit gestecktem WiFi-Modul)

Was nicht geht?

- WiFi