Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task

-

schrieb am 17. Juni 2025, 23:01 zuletzt editiert von

So basically LLM is new eugenics, we turn people back into animals.

-

Same reason I don't use GPS. I want to actually learn, use my brain and grow as a human.

No one grows when the work is done for them.

schrieb am 18. Juni 2025, 08:38 zuletzt editiert vonI use them to find a new route. I'll try and drive back on memory. After that I should be able to find it on my own

-

"ChatGPT, summarize this study for me"

schrieb am 18. Juni 2025, 12:29 zuletzt editiert vonYou suck at learning.

-

"EEG" on "AI"? I heard you like pseudo-science, so I put extra pseudo-science in your pseudo-science, dawg!!!

schrieb am 18. Juni 2025, 12:35 zuletzt editiert von monkdervierte@lemmy.zip- ☐ you misunderstand EEG

- ☐ you confuse EEG with something else

-

schrieb am 18. Juni 2025, 14:27 zuletzt editiert von

As an anecdote I can attest to this personally. I stopped using AI assistance tools for my work bc I noticed I'd stopped thinking about what I was doing

-

Same reason I don't use GPS. I want to actually learn, use my brain and grow as a human.

No one grows when the work is done for them.

schrieb am 18. Juni 2025, 14:48 zuletzt editiert vonYour brain doesn't have real time traffic information.

-

"ChatGPT, summarize this study for me"

schrieb am 18. Juni 2025, 15:41 zuletzt editiert vonChatgpt is so hopelessly and blindly pro science it will be incapable to address its shortcomings unless you actively rub its nose in it. And that would mean you know how the sausage is made well enough that you don't need a summary.

-

You would think the world right now is led by bright, clever, thoughtful individuals considering the AI panic lol

schrieb am 18. Juni 2025, 15:44 zuletzt editiert vonWe have the meanest dumfucks instead, we're tired of playing on easy mode.

-

- ☐ you misunderstand EEG

- ☐ you confuse EEG with something else

schrieb am 18. Juni 2025, 15:45 zuletzt editiert vonIt's low energy brain porn, nmr brain imaging, gray matter v white matter, all the same flavour of reading tea leaves.

-

As an anecdote I can attest to this personally. I stopped using AI assistance tools for my work bc I noticed I'd stopped thinking about what I was doing

schrieb am 18. Juni 2025, 20:40 zuletzt editiert vonI can attest to the exact same thing.

-

It's low energy brain porn, nmr brain imaging, gray matter v white matter, all the same flavour of reading tea leaves.

schrieb am 19. Juni 2025, 09:25 zuletzt editiert von- ☒ you misunderstand EEG

-

With Gemini I have had several instances of the referenced article saying nothing like what the llm summarized. Ie: The LLM tried to answer my question and threw up a website on the general topic with no bearing on the actual question

schrieb am 19. Juni 2025, 20:44 zuletzt editiert vonSame, especially when searching technical or niche topics. Since there aren't a ton of results specific to the topic, mostly semi-related results will appear in the first page or two of a regular (non-Gemini) Google search, just due to the higher popularity of those webpages compared to the relevant webpages. Even the relevant webpages will have lots of non-relevant or semi-relevant information surrounding the answer I'm looking for.

I don't know enough about it to be sure, but Gemini is probably just scraping a handful of websites on the first page, and since most of those are only semi-related, the resulting summary is a classic example of garbage in, garbage out. I also think there's probably something in the code that looks for information that is shared across multiple sources and prioritizing that over something that's only on one particular page (possibly the sole result with the information you need). Then, it phrases the summary as a direct answer to your query, misrepresenting the actual information on the pages they scraped. At least Gemini gives sources, I guess.

The thing that gets on my nerves the most is how often I see people quote the summary as proof of something without checking the sources. It was bad before the rollout of Gemini, but at least back then Google was mostly scraping text and presenting it with little modification, along with a direct link to the webpage. Now, it's an LLM generating text phrased as a direct answer to a question (that was also AI-generated from your search query) using AI-summarized data points scraped from multiple webpages. It's obfuscating the source material further, but I also can't help but feel like it exposes a little of the behind-the-scenes fuckery Google has been doing for years before Gemini. How it bastardizes your query by interpreting it into a question, and then prioritizes homogeneous results that agree on the "answer" to your "question". For years they've been doing this to a certain extent, they just didn't share how they interpreted your query.

-

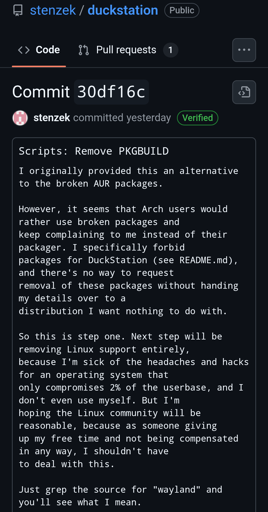

Duckstation(one of the most popular PS1 Emulators) dev plans on eventually dropping Linux support due to Linux users, especially Arch Linux users.

Technology37 vor 6 Tagenvor 12 Tagen 2

2

-

-

-

China’s Next-Gen TV Anchors Hustle for Jobs AI Already Does: The rise of AI in broadcasting is pushing China’s top journalism schools to rethink what skills still set human anchors apart.

Technology 8. Juli 2025, 09:36 1

1

-

-

-

Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task

Technology 16. Juni 2025, 11:21 1

1

-