Wikimedia Foundation's plans to introduce AI-generated article summaries to Wikipedia

-

It really depends on what you're looking at. The history section of some random town? Absolutely bog-standard prose. I'm probably missing lots of implications as I'm no historian but at least I understand what's going on. The article on asymmetric relations? Good luck getting your mathematical literacy from wikipedia all the maths articles require you to already have it, and that's one of the easier ones. It's a fucking trivial concept, it has a glaringly obvious example... which is mentioned, even as first example, but by that time most people's eyes have glazed over. "Asymmetric relations are a generalisation of the idea that if a < b, then it is necessarily false that a > b: If it is true that Bob is taller than Tom, then it is false that Tom is taller than Bob." Put that in the header.

Or let's take Big O notation. Short overview, formal definition, examples... not practical, but theoretical, then infinitesimal asymptotics, which is deep into the weeds. You know what that article actually needs? After the short overview, have an intuitive/hand-wavy definition, then two well explained "find an entry in a telephone book", examples, two different algorithms: O(n) (naive) and O(log n) (divide and conquer), to demonstrate the kind of differences the notation is supposed to highlight. Then, with the basics out of the way, one to demonstrate that the notation doesn't care about multiplicative factors, what it (deliberately) sweeps under the rug. Short blurb about why that's warranted in practice. Then, directly afterwards, the "orders of common functions" table but make sure to have examples that people actually might be acquainted with. Then talk about amortisation, and how you don't always use hash tables "because they're O(1) and trees are not". Then get into the formal stuff, that is, the current article.

And, no, LLMs will be of absolutely no help doing that. What wikipedia needs is a didactics task force giving specialist editors a slap on the wrist because xkcd 2501.

As I said in an another comment, I find that traditional encyclopedias fare better than Wikipedia in this respect. Wikipedians can muddle even comparatively simple topics, e.g. linguistic purism is described like this:

Linguistic purism or linguistic protectionism is a concept with two common meanings: one with respect to foreign languages and the other with respect to the internal variants of a language (dialects). The first meaning is the historical trend of the users of a language desiring to conserve intact the language's lexical structure of word families, in opposition to foreign influence which are considered 'impure'. The second meaning is the prescriptive[1] practice of determining and recognizing one linguistic variety (dialect) as being purer or of intrinsically higher quality than other related varieties.

This is so hopelessly awkward, confusing and inconsistent. (I hope I'll get around to fixing it, btw.) Compare it with how the linguist RL Trask defines it in his Language and Linguistics: The Key Concepts:

[Purism] The belief that words (and other linguistic features) of foreign origin are a kind of contamination sullying the purity of a language.

Bam! No LLMs were needed for this definition.

So here's my explanation for this problem: Wikipedians, specialist or non-specialist, like to collect and pile up a lot of cool info they've found in literature and online. When you have several such people working simultaneously, you easily end up with chaotic texts with no head or tails, which can always be expanded further and further with new stuff you've found because it's just a webpage with no technical limits. When scholars write traditional encyclopedic texts, the limited space and singular viewpoint force them to write something much more coherent and readable.

-

It's kind of indirectly related, but adding a query parameter

udm=14to the url of your Google searches removes the AI summary at the top, and there are plugins for Firefox that do this for you. My hopes for this WM project are that similar plugins will be possible for Wikipedia.The annoying thing about these summaries is that even for someone who cares about the truth, and gathering actual information, rather than the fancy autocomplete word salad that LLMs generate, it is easy to "fall for it" and end up reading the LLM summary. Usually I catch myself, but I often end up wasting some time reading the summary. Recently the non-information was so egregiously wrong (it called a certain city in Israel non-apartheid), that I ended up installing the udm 14 plugin.

In general, I think the only use cases for fancy autocomplete are where you have a way to verify the answer. For example, if you need to write an email and can't quite find the words, if an LLM generates something, you will be able to tell whether it conveys what you're trying to say by reading it. Or in case of writing code, if you've written a bunch of tests beforehand expressing what the code needs to do, you can run those on the code the LLM generates and see if it works (if there's a Dijkstra quote that comes to your mind reading this: high five, I'm thinking the same thing).

I think it can be argued that Wikipedia articles satisfy this criterion. All you need to do to verify the summary is read the article. Will people do this? I can only speak for myself, and I know that, despite my best intentions, sometimes I won't. If that's anything to go by, I think these summaries will make the world a worse place.

Which Dijkstra quote?

-

Et tu, Wikipedia?

My god, why does every damn piece of text suddenly need to be summarized by AI? It's completely insane to me. I want to read articles, not their summaries in 3 bullet points. I want to read books, not cliff notes, I want to read what people write to me in their emails instead of AI slop. Not everything needs to be a fucking summary!

It seriously feels like the whole damn world is going crazy, which means it's probably me...

Then skip the AI summary.

-

AI threads on lemmy are always such a disappointment.

Its ironic that people put so little thought into understanding this and complain about "ai slop". The slop was in your heads all along.

To think that more accessibility for a project that is all about sharing information with people to whom information is least accessible is a bad thing is just an incredible lack of awareness.

Its literally the opposite of everything people might hate AI for:

- RAG is very good and accurate these days that doesn't invent stuff. Especially for short content like wiki articles. I work with RAG almost every day and never seen it hallucinate with big models.

- it's open and not run a "big scary tech"

- it's free for all and would save millions of editor hours and allow more accuracy and complexity in the articles themselves.

And to top it all you know this is a lost fight even if you're right so instead of contributing to steering this societal ship these people cover their ears and "bla bla bla we don't want it". It's so disappointingly irresponsible.

I don't trust even the best modern commercial models to do this right, but with human oversight it could be valuable.

You're right about it being a lost fight, in some ways at least. There are lawsuits in flight that could undermine it. How far that will go remains to be seen. Pissing and moaning about it won't accelerate the progress of those lawsuits, and is mainly an empty recreational activity.

-

If people use AI to summarize passages of written words to be simpler for those with poor reading skills to be able to more easily comprehend the words, then how are those readers going to improve their poor reading skills?

Dumbing things down with AI isn't going to make people smarter I bet. This seems like accelerating into Idiocracy

Why do you think their reading skills are poor?

-

Is the point of Wikipedia to provide everyone with information, or to allow editors to spew jargon into opaque articles that are only accessible to experts?

I think it's the former. There are very few topics that can't be explained simply, if the author is willing to consider their audience. Best of all, absolutely nothing is lost when an expert reads a well written article.

Many people who are in a position to write opaque jargon lack the perspective that would be required to explain it to a person who isn't already very well-versed. Math articles are often like that, which doesn't surprise me. I've had a few math professors who appeared completely unable to understand how to explain the subject to anyone who wasn't already good at it. I had to drop their classes and try my luck with others.

I feel like a few of them are in this thread!

-

Relax, this is not the doom and gloom some of y'all think this is and that is pretty telling.

Given the degree to which the modern day Wiki mods jump on to every edit and submission like a pack of starved lions, unleashing a computer to just pump out vaguely human-sounding word salad sounds like a bad enough idea on its face.

If the AI is being given priority over the editors and mods, it sounds even worse. All of that human labor, the endless back-and-forth in the Talk sections, arguing over the precise phrasing or the exact validity of sources or the relevancy of newly released information... and we're going to occlude it with the half-wit remarks of a glorified chatbot?

Woof. Enshittification really coming for us all.

-

If you can't make people smarter, make text dumber.

Problem: Most people only process text at the 6th grade level

Proposal: Require mainstream periodicals to only generate articles accessible to people at the 6th grade reading level

Consequence: Everyone accepts the 6th grade reading level as normal

But... New Problem: We're injecting so many pop-ups and ad-inserts into the body of text that nobody ever bothers to read the whole thing.

Proposal: Insert summaries of 6th grade material, which we will necessarily have to reduce and simplify.

Consequence: Everyone accepts the 3rd grade reading level as normal.

But... New Problem: This isn't good for generating revenue. Time to start filling those summaries with ad-injects and occluding them with pop ups.

-

Which Dijkstra quote?

Paraphrasing, but: "testing can only show presence of bugs, not their absence"

-

Many people who are in a position to write opaque jargon lack the perspective that would be required to explain it to a person who isn't already very well-versed. Math articles are often like that, which doesn't surprise me. I've had a few math professors who appeared completely unable to understand how to explain the subject to anyone who wasn't already good at it. I had to drop their classes and try my luck with others.

I feel like a few of them are in this thread!

Trolling aside, yeah, being able to explain a concept in everyday terms takes careful thought and discipline. I'm consistently impressed by the people who write Simple articles on Wikipedia. I wish there were more of those articles.

-

How dare you bring nuance, experience and moderation into the conversation.

Seriously, though, I am a firm believer that no tech is inherently bad, though the people who wield it might well be. It's rare to see a good, responsible use of LLMs but I think this is one of them.

Whether technology is inherently bad is of nearly no matter. The problem we're dealing with is the technologies with exherent badness.

-

Et tu, Wikipedia?

My god, why does every damn piece of text suddenly need to be summarized by AI? It's completely insane to me. I want to read articles, not their summaries in 3 bullet points. I want to read books, not cliff notes, I want to read what people write to me in their emails instead of AI slop. Not everything needs to be a fucking summary!

It seriously feels like the whole damn world is going crazy, which means it's probably me...

-

Paraphrasing, but: "testing can only show presence of bugs, not their absence"

I like it

-

Trolling aside, yeah, being able to explain a concept in everyday terms takes careful thought and discipline. I'm consistently impressed by the people who write Simple articles on Wikipedia. I wish there were more of those articles.

I wasn't trolling

-

Giving people incorrect information is not an accessibility feature

RAG on 2 pages of text does not hallucinate anything though. I literally use it every day.

-

I don't think the idea itself is awful, but everyone is so fed up with AI bullshit that any attempt to integrate even an iota of it will be received very poorly, so I'm not sure it's worth it.

I don't think it's everyone either - just a very vocal minority.

-

TIL: Wikipedia uses complex language.

It might just be me, but I find articles written on Wikipedia much more easier to read than shit sometimes people write or speak to me. Sometimes it is incomprehensible garbage, or without much sense.

You've clearly never tried to use Wikipedia to help with your math homework

-

You've clearly never tried to use Wikipedia to help with your math homework

I never did any homework unless absolutely necessary.

Now I understand that I should have done it, because I am not good at learning shit in classrooms where there is bunch of people who distract me and I don't learn anything that way. Only many years later I found out that for most things it's best for me to study alone.

That said, you are most probably right, because I have opened some math-related Wikipedia articles at some point, and they were pretty incomprehensible to me.

-

Then skip the AI summary.

For those of us who do skip the AI summaries it's the equivalent of adding an extra click to everything.

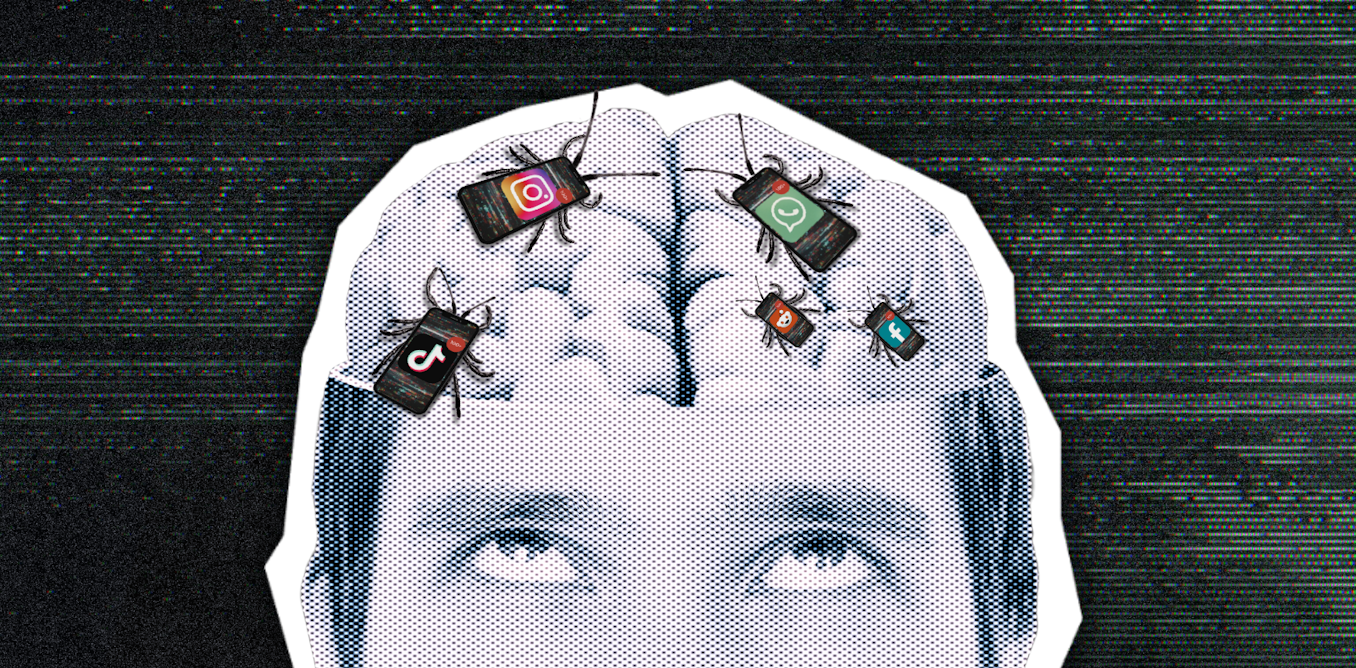

I would support optional AI, but having to physically scroll past random LLM nonsense all the time feels like the internet is being infested by something equally annoying/useless as ads, and we don't even have a blocker for it.

-

It's kind of indirectly related, but adding a query parameter

udm=14to the url of your Google searches removes the AI summary at the top, and there are plugins for Firefox that do this for you. My hopes for this WM project are that similar plugins will be possible for Wikipedia.The annoying thing about these summaries is that even for someone who cares about the truth, and gathering actual information, rather than the fancy autocomplete word salad that LLMs generate, it is easy to "fall for it" and end up reading the LLM summary. Usually I catch myself, but I often end up wasting some time reading the summary. Recently the non-information was so egregiously wrong (it called a certain city in Israel non-apartheid), that I ended up installing the udm 14 plugin.

In general, I think the only use cases for fancy autocomplete are where you have a way to verify the answer. For example, if you need to write an email and can't quite find the words, if an LLM generates something, you will be able to tell whether it conveys what you're trying to say by reading it. Or in case of writing code, if you've written a bunch of tests beforehand expressing what the code needs to do, you can run those on the code the LLM generates and see if it works (if there's a Dijkstra quote that comes to your mind reading this: high five, I'm thinking the same thing).

I think it can be argued that Wikipedia articles satisfy this criterion. All you need to do to verify the summary is read the article. Will people do this? I can only speak for myself, and I know that, despite my best intentions, sometimes I won't. If that's anything to go by, I think these summaries will make the world a worse place.

Thank you so much for this!!!