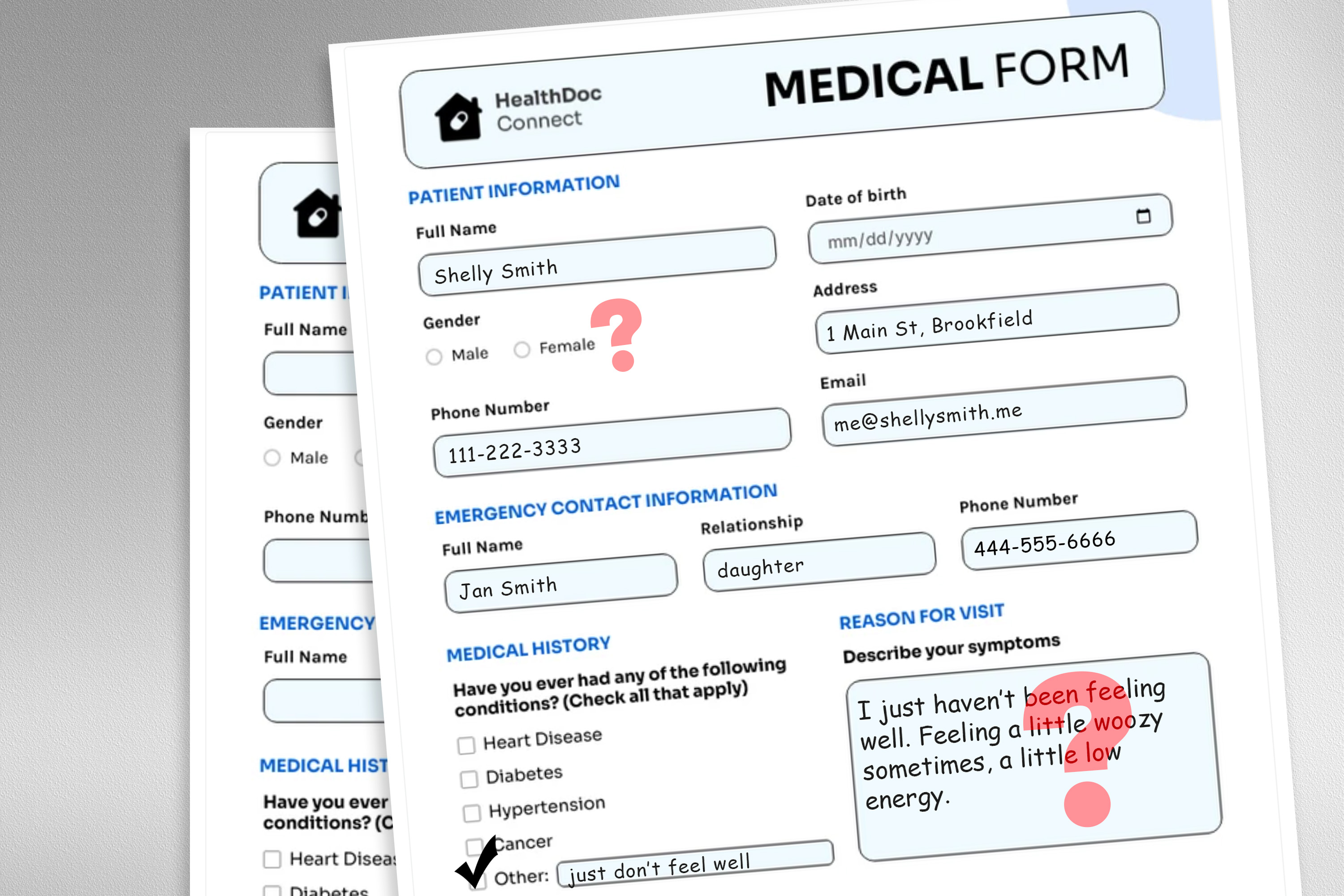

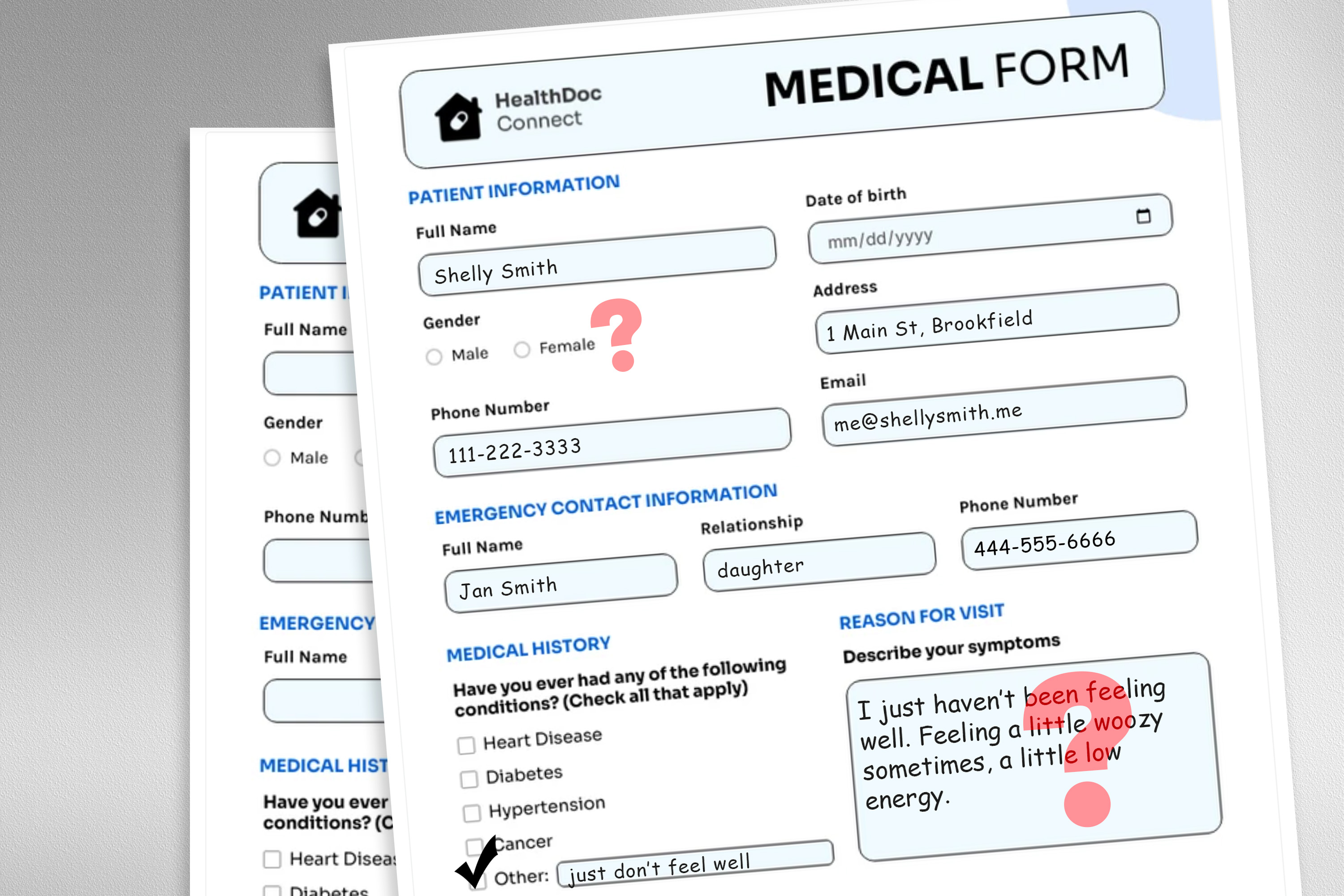

LLMs factor in unrelated information when recommending medical treatments

-

This post did not contain any content.

LLMs factor in unrelated information when recommending medical treatments

An MIT study finds non-clinical information in patient messages, like typos, extra whitespace, or colorful language, can reduce the accuracy of a large language model deployed to make treatment recommendations. The LLMs were consistently less accurate for female patients, even when all gender markers were removed from the text.

MIT News | Massachusetts Institute of Technology (news.mit.edu)

Why are they... why are they having autocomplete recommend medical treatment? There are specialized AI algorithms that already exist for that purpose that do it far better (though still not well enough to even assist real doctors, much less replace them).

-

Why are they... why are they having autocomplete recommend medical treatment? There are specialized AI algorithms that already exist for that purpose that do it far better (though still not well enough to even assist real doctors, much less replace them).

Because sycophants keep saying it's going to take these jobs, eventually real scientists/researchers have to come in and show why the sycophants are wrong.

-

large language model deployed to make treatment recommendations

What kind of irrational lunatic would seriously attempt to invoke currently available Counterfeit Cognizance to obtain a "treatment recommendation" for anything...???

FFS.

Anyone who would seems a supreme candidate for a Darwin Award.

Not entirely true. I have several chronic and severe health issues. ChatGPT provides nearly and surpassing medical advice (heavily needs re-verified) from multiple specialialty doctors. In my country doctors are horrible. This bridges the gap albeit again highly needing oversight to be safe. Certainly has merit though.

-

Not entirely true. I have several chronic and severe health issues. ChatGPT provides nearly and surpassing medical advice (heavily needs re-verified) from multiple specialialty doctors. In my country doctors are horrible. This bridges the gap albeit again highly needing oversight to be safe. Certainly has merit though.

Bridging the gap is something sorely needed and LLMs are damn close to achieving.

-

Are there any studies done (or benchmarks) that show accuracy on recommendations for treatments given a medical history and condition requiring treatment?

-

Are there any studies done (or benchmarks) that show accuracy on recommendations for treatments given a medical history and condition requiring treatment?

Im currently working on one now as a researcher. Its a crude tool to measure the quality of response. But its a start

-

Im currently working on one now as a researcher. Its a crude tool to measure the quality of response. But its a start

Gotta start somewhere, and it won’t ever improve if we don’t start improving it. So many on Lemmy assume the tech will never be good enough so why even bother, but that’s why we do things, to make the world that much better… eventually. Why else would we plant literal trees? For those that come after us.

-

This post did not contain any content.

LLMs factor in unrelated information when recommending medical treatments

An MIT study finds non-clinical information in patient messages, like typos, extra whitespace, or colorful language, can reduce the accuracy of a large language model deployed to make treatment recommendations. The LLMs were consistently less accurate for female patients, even when all gender markers were removed from the text.

MIT News | Massachusetts Institute of Technology (news.mit.edu)

I have used chatgpt for early diagnostics with great success and obviously its not a doctor but that doesn't mean it's useless.

Chatgpt can be a crucial first step especially in places where doctor care is not immediately available. The initial friction for any disease diagnosis is huge and anything to overcome that is a net positive.

-

Gotta start somewhere, and it won’t ever improve if we don’t start improving it. So many on Lemmy assume the tech will never be good enough so why even bother, but that’s why we do things, to make the world that much better… eventually. Why else would we plant literal trees? For those that come after us.

It's not an assumption it's just a matter of practical reality. If we're at best a decade off from that point why pretend it could suddenly unexpectedly improve to the point it's unrecognizable from its current state? LLMs are neat, scientists should keep working on them and if it weren't for all the nonsense "Ai" hype we have currently I'd expect to see them used rarely but quite successfully as it would be getting used off of merit, not hype.

-

Say it with me, now: chatgpt is not a doctor.

Now, louder for the morons in the back. Altman! Are you listening?!

ChatGPT is not a doctor. But models trained on imaging can actually be a very useful tool for them to utilize.

Even years ago, just before the AI “boom”, they were asking doctors for details on how they examine patient images and then training models on that. They found that the AI was “better” than doctors specifically because it followed the doctor’s advice 100% of the time; thereby eliminating any kind of bias from the doctor that might interfere with following their own training.

Of course, the splashy headline “AI better than doctors” was ridiculous. But it does show the benefit of having a neutral tool for doctors to utilize, especially when looking at images for people who are outside of the typical demographics that much medical training is based on. (As in mostly just white men. For example, everything they train doctors on regarding knee imagining comes from images of the knees of coal miners in the UK some decades ago)

-

-

-

OpenAI supremo Sam Altman says he 'doesn't know how' he would have taken care of his baby without the help of ChatGPT

Technology 1

1

-

-

-

-

YouTube's new ad strategy is bound to upset users: YouTube Peak Points utilise Gemini to identify moments where users will be most engaged, so advertisers can place ads at the point.

Technology 1

1

-