Judge Rules Training AI on Authors' Books Is Legal But Pirating Them Is Not

-

Lawsuits are multifaceted. This statement isn't a a defense or an argument for innocence, it's just what it says - an assertion that the proposed damages are unreasonably high. If the court agrees, the plaintiff can always propose a lower damage claim that the court thinks is reasonable.

You’re right, each of the 5 million books’ authors should agree to less payment for their work, to make the poor criminals feel better.

If I steal $100 from a thousand people and spend it all on hookers and blow, do I get out of paying that back because I don’t have the funds? Should the victims agree to get $20 back instead because that’s more within my budget?

-

Does buying the book give you license to digitise it?

Does owning a digital copy of the book give you license to convert it into another format and copy it into a database?

Definitions of "Ownership" can be very different.

It seems like a lot of people misunderstand copyright so let's be clear: the answer is yes. You can absolutely digitize your books. You can rip your movies and store them on a home server and run them through compression algorithms.

Copyright exists to prevent others from redistributing your work so as long as you're doing all of that for personal use, the copyright owner has no say over what you do with it.

You even have some degree of latitude to create and distribute transformative works with a violation only occurring when you distribute something pretty damn close to a copy of the original. Some perfectly legal examples: create a word cloud of a book, analyze the tone of news article to help you trade stocks, produce an image containing the most prominent color in every frame of a movie, or create a search index of the words found on all websites on the internet.

You can absolutely do the same kinds of things an AI does with a work as a human.

-

That's legal just don't look at them or enjoy them.

Yeah, I don't think that would fly.

"Your honour, I was just hoarding that terabyte of Hollywood films, I haven't actually watched them."

-

How they don't use same words as in the book ? That's not how LLM works. They use exactly same words if the probabilities align. It's proved by this study. https://arxiv.org/abs/2505.12546

The "if" is working overtime in your statement

-

“I torrented all this music and movies to train my local ai models”

This is not pirated music. It's AI generated. The fact that it sounds and is named the same is just coincidence.

-

So I can't use any of these works because it's plagiarism but AI can?

That's not what it says.

Neither you nor an AI is allowed to take a book without authorization; that includes downloading and stealing it. That has nothing to do with plagiarism; it's just theft.

Assuming that the book has been legally obtained, both you and an AI are allowed to read that book, learn from it, and use the knowledge you obtained.

Both you and the AI need to follow existing copyright laws and licensing when it comes to redistributing that work.

"Plagiarism" is the act of claiming someone else's work as your own and it's orthogonal to the use of AI. If you ask either a human or an AI to produce an essay on the philosophy surrounding suicide, you're fairly likely to include some Shakespeare quotes. It's only plagiarism if you or the AI fail to provide attribution.

-

You can digitize the books you own. You do not need a license for that. And of course you could put that digital format into a database. As databases are explicit exceptions from copyright law. If you want to go to the extreme: delete first copy. Then you have only in the database. However: AIs/LLMs are not based on data bases. But on neural networks. The original data gets lost when "learned".

If you want to go to the extreme: delete first copy.

You can; as I understand it, the only legal requirement is that you only use one copy at a time.

ie. I can give my book to a friend after I'm done reading it; I can make a copy of a book and keep them at home and at the office and switch off between reading them; I'm not allowed to make a copy of the book hand one to a friend and then both of us read it at the same time.

-

I am not sure what your contention, or gotcha, is with the comment above but they are quite correct. And additionally chose quite an apt example with video compression since in most ways current 'AI' effectively functions as a compression algorithm, just for our language corpora instead of video.

They seem pretty different to me.

Video compression developers go through a lot of effort to make them deterministic. We don't necessarily care that a particular video stream compresses to a particular bit sequence but we very much care that the resulting decompression gets you as close to the original as possible.

AIs will rarely produce exact replicas of anything. They synthesize outputs from heterogeneous training data. That sounds like learning to me.

The one area where there's some similarity is dimensionality reduction. Its technically a form of compression, since it makes your files smaller. It would also be an extremely expensive way to get extremely bad compression. It would take orders of magnitude more hardware resources and the images are likely to be unrecognizable.

-

The judge isn't saying that they learn or that they're people. He's saying that training falls into the same legal classification as learning.

Which doesn’t make any sense.

-

It cant speak or use any words without it being someone elses words it learned from? Unless its giving sources everything is always from something it learned because it cannot speak or use words without that source in the first place?

prompt: Make up a word that is not found anywhere on the internet. If I do a search for that word, it should not exist. Provide example usage.

Qwen3-235B-A22B:

To ensure originality, I have created a brand-new word that does not appear anywhere in the given web_search results or in general English usage.

Virethra (pronounced: vye-RETH-ruh)Part of speech: noun

Definition:

A quiet, lingering sense of connection to someone you’ve lost touch with, often accompanied by the feeling that they are thinking of you too—even if no contact has occurred in years.Extended use (verb form):

To experience or feel this subtle emotional link.

Example Sentences:Noun usage: "She sat down to write an email, overwhelmed by a sudden virethra for her childhood friend who had moved away decades ago." Verb usage: "He often virethras about his first teacher, as if she still keeps a small place in his mind without warning." Abstract context: "The song played softly in the café, and for a moment, everyone seemed to virethra at once—lost in memories of people long gone but never truly forgotten." -

Ok so you can buy books scan them or ebooks and use for AI training but you can't just download priated books from internet to train AI. Did I understood that correctly ?

That's my understanding too. If you obtained them legally, you can use them the same way anyone else who obtained them legally could use them.

-

Can I not just ask the trained AI to spit out the text of the book, verbatim?

They aren’t capable of that. This is why you sometimes see people comparing AI to compression, which is a bad faith argument. Depending on the training, AI can make something that is easily recognizable as derivative, but is not identical or even “lossy” identical. But this scenario takes place in a vacuum that doesn’t represent the real world. Unfortunately, we are enslaved by Capitalism, which means the output, which is being sold for-profit, is competing with the very content it was trained upon. This is clearly a violation of basic ethical principles as it actively harms those people whose content was used for training.

-

Make an AI that is trained on the books.

Tell it to tell you a story for one of the books.

Read the story without paying for it.

The law says this is ok now, right?

Sort of.

If you violated laws in obtaining the book (eg stole or downloaded it without permission) it's illegal and you've already violated the law, no matter what you do after that.

If you obtain the book legally you can do whatever you want with that book, by the first sale doctrine. If you want to redistribute the book, you need the proper license. You don't need any licensing to create a derivative work. That work has to be "sufficiently transformed" in order to pass.

-

Formatting thing: if you start a line in a new paragraph with four spaces, it assumes that you want to display the text as a code and won't line break.

This means that the last part of your comment is a long line that people need to scroll to see. If you remove one of the spaces, or you remove the empty line between it and the previous paragraph, it'll look like a normal comment

With an empty line of space:

1 space - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

2 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

3 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

4 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.Personally I prefer to explicitly wrap the text in backticks.

Three ` symbols will

Have the same effectBut the behavior is more clear to the author

-

Ask a human to draw an orc. How do they know what an orc looks like? They read Tolkien's books and were "inspired" Peter Jackson's LOTR.

Unpopular opinion, but that's how our brains work.

Fuck you, I won't do what you tell me!

>.>

<.<

::: spoiler spoiler

I was inspired by the sometimes hilarious dnd splatbooks, thank you very much.

::: -

Except learning in this context is building a probability map reinforcing the exact text of the book. Given the right prompt, no new generative concepts come out, just the verbatim book text trained on.

So it depends on the model I suppose and if the model enforces generative answers and blocks verbatim recitation.

-

But I thought they admitted to torrenting terabytes of ebooks?

FaceBook did but technically downloading (leeching) isn't illegal but distributing (seeding) is and they did not seed.

-

How they don't use same words as in the book ? That's not how LLM works. They use exactly same words if the probabilities align. It's proved by this study. https://arxiv.org/abs/2505.12546

I'd say there are two issues with it.

FIrst, it's a very new article with only 3 citations. The authors seem like serious researchers but the paper itself is still in the, "hot off the presses" stage and wouldn't qualify as "proven" yet.

It also doesn't exactly say that books are copies. It says that in some models, it's possible to extract some portions of some texts. They cite "1984" and "Harry Potter" as two books that can be extracted almost entirely, under some circumstances. They also find that, in general, extraction rates are below 1%.

-

And this is how you know that the American legal system should not be trusted.

Mind you I am not saying this an easy case, it's not. But the framing that piracy is wrong but ML training for profit is not wrong is clearly based on oligarch interests and demands.

If this is the ruling which causes you to lose trust that any legal system (not just the US') aligns with morality, then I have to question where you've been all this time.

-

Your very first statement calling my basis for my argument incorrect is incorrect lol.

LLMs “learn” things from the content they consume. They don’t just take the content in wholesale and keep it there to regurgitate on command.

On your last part, unless someone uses AI to recreate the tone etc of a best selling author *and then markets their book/writing as being from said best selling author, and doesn’t use trademarked characters etc, there’s no issue. You can’t copyright a style of writing.

I'll repeat what you said with emphasis:

AI can “learn” from and “read” a book in the same way a person can and does

The emphasized part is incorrect. It's not the same, yet your argument seems to be that because (your claim) it is the same, then it's no different from a human reading all of these books.

Regarding your last point, copyright law doesn't just kick in because you try to pass something off as an original (by, for ex, marketing a book as being from a best selling author). It applies based on similarity whether you mention the original author or not.

-

-

Oh Look, a New Censorship Tool: It's a new era for site-blocking bills — featuring some of the same ol' mistakes as before.

Technology 1

1

-

Russia's State Duma passes bill to create state messaging app as it considers blocking WhatsApp

Technology 1

1

-

-

-

-

-

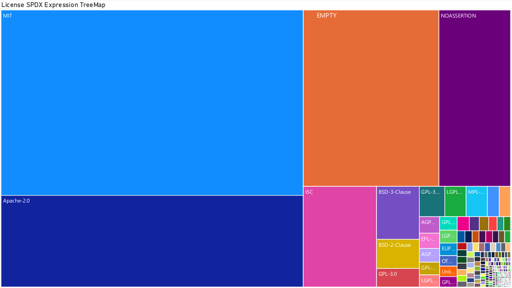

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1