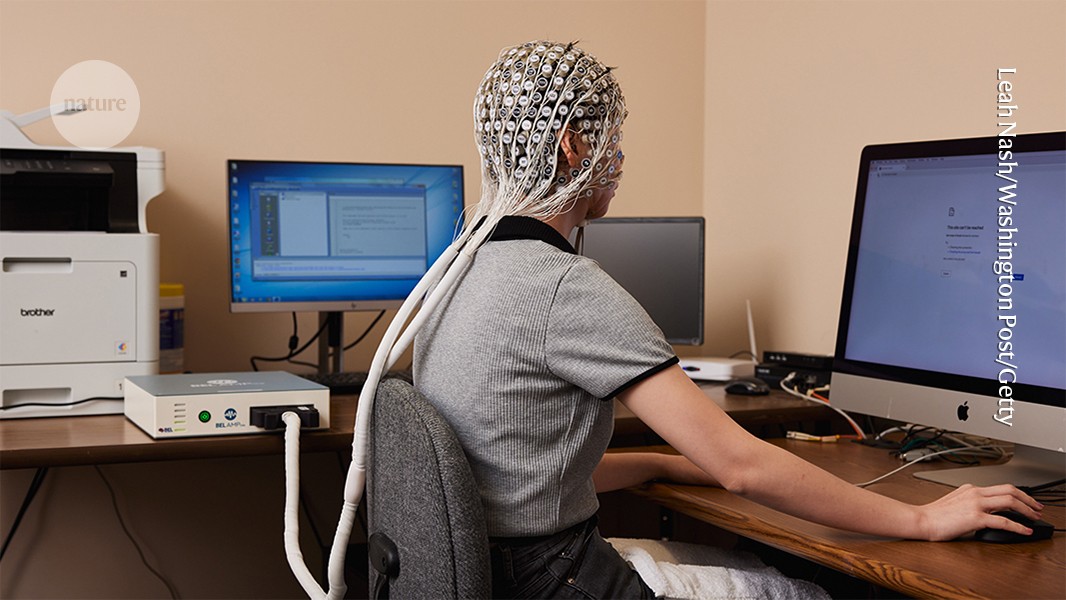

Schools are using AI to spy on students and some are getting arrested for misinterpreted jokes and private conversations

-

no paywall https://archive.md/1lSRA

Just about the same thing is happening in my school; that kind of system was implemented last year. While I'm not sure if it's AI (let's be real it likely is), let alone effective, I feel like students using the Internet for social media and/or communications between peers are mostly just using it for dumb unproductivity, rather than any kind of serious crime conducting.

While I don't doubt that students may do some kind of illegal activity (which I feel like 90% of is petty shit that doesn't effect anyone), it's such a waste of time and money to watch EVERYTHING they do on the Internet just to catch them say a fucked up joke among their friends. Instead of being so privacy-disrespecting and treating these students as though they're mini-Pablo Escobars, I would suggest that schools should, for lack of a better word, implement mental health courses, therapists, and generally try to improve student mental health free-of-charge or made cheap. Because if you're so concerned of the next Columbine happening, or cyberbullying potentially taking place, then also being concerned for the student's mental health & general well-being should be just as important. What about the students that have horrible & abusive parents at home, or have trauma, or other issues? They sadly are being deprioritised in favour of whatever this is.

I'm so tired of schools using the "Watch What Everyone Is Doing At All Times Or Restrict What They're Allowed To Do To Make Sure They're In Line" move to address security and safety issues when it's so lazy and a waste of money when those funds could be put into much better use toward more community-based, less authoritarian measures. I haven't even mentioned AI too, which goes to show how flawed this whole ordeal is in the first place.

-

Knowing that Europe literally has a problem with its soccer audiences making monkey noises at black athletes makes this particular bit of condescension all the more ridiculous.

Is this better, worse, or the same as throwing dildos at female WNBA athletes?

-

I didn’t realize the schools were using Run, Hide, Fight. That is the same policy for hospital staff in the event of an active shooter. Maddening.

Having worked in quite a few fields in the last 15 years or so, it's the same active shooter training they give everyone. Even in stores that sell guns.

I'll let the reader decide how fucked up it is that there's basically a countrywide accepted "standard response"

-

no paywall https://archive.md/1lSRA

I didn’t know Reddit admins were also school admins

-

My sense of humor is dry, dark, and absurdist. I’d go to jail every week for the sorts of things I joke about if I was a kid today. This is complete lunacy.

Example of an average joke on my part: speed up and run over that old lady crossing the street!

It makes my partner laugh. I laugh. We both know I don’t mean it. But a crappy AI tool wouldn’t understand that.

Yeah especially around middle school, the "darker" the "joke" the more funny it was

-

It is not the tool, but is the lazy stupid person that created the implementation. The same stupidity is true of people that run word filtering in conventional code. AI is just an extra set of eyes. It is not absolute. Giving it any kind of unchecked authority is insane. The administrators that implemented this should be what everyone is upset at.

The insane rhetoric around AI is a political and commercial campaign effort by Altmann and proprietary AI looking to become a monopoly. It is a Kremlin scope misinformation campaign that has been extremely successful at roping in the dopes. Don't be a dope.

This situation with AI tools is exactly 100% the same as every past scapegoated tool. I can create undetectable deepfakes in gimp or Photoshop. If I do so with the intent to harm or out of grossly irresponsible stupidity, that is my fault and not the tool. Accessibility of the tool is irrelevant. Those that are dumb enough to blame the tool are the convenient idiot pawns of the worst of humans alive right now. Blame the idiots using the tools that have no morals or ethics in leadership positions while not listening to these same types of people's spurious dichotomy to create monopoly. They prey on conservative ignorance rooted in tribalism and dogma which naturally rejects all unfamiliar new things in life. This is evolutionary behavior and a required mechanism for survival in the natural world. Some will always scatter around the spectrum of possibilities but the center majority is stupid and easily influenced in ways that enable tyrannical hegemony.

AI is not some panacea. It is a new useful tool. Absent minded stupidity is leading to the same kind of dystopian indifference that lead to the ""free internet"" which has destroyed democracy and is the direct cause of most political and social issues in the present world when it normalized digital slavery through ownership over a part of your person for sale, exploitation, and manipulation without your knowledge or consent.

I only say this because I care about you digital neighbor. I know it is useless to argue against dogma but this is the fulcrum of a dark dystopian future that populist dogma is welcoming with open arms of ignorance just like those that said the digital world was a meaningless novelty 30 years ago.

You seem to be handwaving all concerns about the actual tech, but I think the fact that "training" is literally just plagiarism, and the absolutely bonkers energy costs for doing so, do squarely position LLMs as doing more harm than good in most cases.

The innocent tech here is the concept of the neural net itself, but unless they're being trained on a constrained corpus of data and then used to analyze that or analogous data in a responsible and limited fashion then I think it's somewhere on a spectrum between "irresponsible" and "actually evil".

-

With the help of artificial intelligence, technology can dip into online conversations and immediately notify both school officials and law enforcement.

Not sure what's worse here: how the police overreacted or that the software immediately contacts law enforcement, without letting teachers (n.b.: they are the experts here, not the police) go through the positives first.

But oh, that would mean having to pay somebody, at least some extra hours, in addition to the no doubt expensive software. JFC.

I hate how fully leapfrogged the conversation about surveillance was. It's so disgusting that it's just assumed that all of your communications should be read by your teachers, parents, and school administration just because you're a minor. Kids deserve privacy too.

-

It is not the tool, but is the lazy stupid person that created the implementation. The same stupidity is true of people that run word filtering in conventional code. AI is just an extra set of eyes. It is not absolute. Giving it any kind of unchecked authority is insane. The administrators that implemented this should be what everyone is upset at.

The insane rhetoric around AI is a political and commercial campaign effort by Altmann and proprietary AI looking to become a monopoly. It is a Kremlin scope misinformation campaign that has been extremely successful at roping in the dopes. Don't be a dope.

This situation with AI tools is exactly 100% the same as every past scapegoated tool. I can create undetectable deepfakes in gimp or Photoshop. If I do so with the intent to harm or out of grossly irresponsible stupidity, that is my fault and not the tool. Accessibility of the tool is irrelevant. Those that are dumb enough to blame the tool are the convenient idiot pawns of the worst of humans alive right now. Blame the idiots using the tools that have no morals or ethics in leadership positions while not listening to these same types of people's spurious dichotomy to create monopoly. They prey on conservative ignorance rooted in tribalism and dogma which naturally rejects all unfamiliar new things in life. This is evolutionary behavior and a required mechanism for survival in the natural world. Some will always scatter around the spectrum of possibilities but the center majority is stupid and easily influenced in ways that enable tyrannical hegemony.

AI is not some panacea. It is a new useful tool. Absent minded stupidity is leading to the same kind of dystopian indifference that lead to the ""free internet"" which has destroyed democracy and is the direct cause of most political and social issues in the present world when it normalized digital slavery through ownership over a part of your person for sale, exploitation, and manipulation without your knowledge or consent.

I only say this because I care about you digital neighbor. I know it is useless to argue against dogma but this is the fulcrum of a dark dystopian future that populist dogma is welcoming with open arms of ignorance just like those that said the digital world was a meaningless novelty 30 years ago.

In such a world, hoping for a different outcome would be just a dream. You know, people always look for the easy way out, and in the end, yes, we will live under digital surveillance, like animals in a zoo. The question is how to endure this and not break down, especially in the event of collapse and poverty. It's better to hope for the worst and be prepared than to look for a way out and try to rebel and then get trapped.

-

You seem to be handwaving all concerns about the actual tech, but I think the fact that "training" is literally just plagiarism, and the absolutely bonkers energy costs for doing so, do squarely position LLMs as doing more harm than good in most cases.

The innocent tech here is the concept of the neural net itself, but unless they're being trained on a constrained corpus of data and then used to analyze that or analogous data in a responsible and limited fashion then I think it's somewhere on a spectrum between "irresponsible" and "actually evil".

If the world is ruled by psychopaths who seek absolute power for the sake of even more power, then the very existence of such technologies will lead to very sad consequences and, perhaps, most likely, even to slavery. Have you heard of technofeudalism?

-

Proper gun control?

Nah let's spy on kidsNo, rather, to monitor future slaves so that they are obedient.

-

And strip-searched!

Without notifying parents

-

It's for the children!!

/s

For them they are not children but rather wolves that can snap, so they try to make them obedient dogs.

-

I think that's illegal now too. Can't have anything interfering with the glorious vision of a relentlessly productive citizenry that ideally slave away for the benefits of their owners until they die in the office chair at age 74 - right before qualifying for pension.

Well, except for the health "care" system. That's an exception, but only because the only thing better than ruthless exploitation is diversified ruthless exploitation. Gotta keep the peons on their toes, lest they get uppity.

I think one rich man in the past said - I don't need a nation of thinkers, I need a nation of slaves. Unless I'm mistaken of course.

It's like saying predators have learned not to chase their prey but to raise it, giving it the illusion of freedom, although in fact they are leading it to slaughter like cattle. I like this idea with cattle I couldn't resist lol. :3 -

Idiots and assholes exist everywhere. At least ours don't have guns.

Yeah, they use knives instead.

-

no paywall https://archive.md/1lSRA

It seems that Big Brother is wathing you... But now it’s already a reality, oh and what will happen if someone commits a thoughtcrime?

-

Or we could have a legislation that would punish the companies that run these bullshit systems AND the authorities that allow and use them when they flop, like in this case.

Hey, dreaming is still free (don't know how much longer though).

How can I say this, if you only dream while sitting on the couch, then alas, everything will end sadly. Although if you implant a neurochip into your brain, then you won't be able to even dream lol. :3

-

If the world is ruled by psychopaths who seek absolute power for the sake of even more power, then the very existence of such technologies will lead to very sad consequences and, perhaps, most likely, even to slavery. Have you heard of technofeudalism?

Okay sure but in many cases the tech in question is actually useful for lots of other stuff besides repression. I don't think that's the case with LLMs. They have a tiny bit of actually usefulness that's completely overshadowed by the insane skyscrapers of hype and lies that have been built up around their "capabilities".

With "AI" I don't see any reason to go through such gymnastics separating bad actors from neutral tech. The value in the tech is non-existent for anyone who isn't either a researcher dealing with impractically large and unwieldy datasets, or of course a grifter looking to profit off of bigger idiots than themselves. It has never and will never be a useful tool for the average person, so why defend it?

-

Okay sure but in many cases the tech in question is actually useful for lots of other stuff besides repression. I don't think that's the case with LLMs. They have a tiny bit of actually usefulness that's completely overshadowed by the insane skyscrapers of hype and lies that have been built up around their "capabilities".

With "AI" I don't see any reason to go through such gymnastics separating bad actors from neutral tech. The value in the tech is non-existent for anyone who isn't either a researcher dealing with impractically large and unwieldy datasets, or of course a grifter looking to profit off of bigger idiots than themselves. It has never and will never be a useful tool for the average person, so why defend it?

There's nothing to defend. Tell me, would you defend someone who is a threat to you and deprives you of the ability to create, making art unnecessary? No, you would go and kill him while this bastard hasn't grown up. Well, what's the point of defending a bullet that will kill you? Are you crazy?

-

They’re not residents, you’re thinking of nursing homes. Roughly a third of hospital patients can walk without assistance, but yes. The rationale is staff doesn’t turn themselves into bullet sponges, because then who is left to remove the bullets once the shooter is dead? Either way, what do unarmed, untrained (to fight) people with the body armor equivalent of pajamas do to stop bullets?

The patient room doors don’t lock. Sometimes those doors are made of glass. But herding the patients who can walk into the halls is likely an opportunity for an active shooter to hit more targets. As such, everyone hunkers down, and the police take care of it. In theory, per the training modules. Police sometimes run drills with the hospital, depending on locale and interagency dealings.

Shutting all the fire doors is likely the only defense. Those nurses can be crafty on the fly, but there are limitations.

I can’t imagine a secondary piece of this policy isn’t hospitals avoiding liability regarding workplace injury/death lawsuits.

I just hadn’t known until now that in grasping for solutions schools found the standardized hospital policy and are running with it.

I guess that the hospital is one of the better places to get shot.

-

no paywall https://archive.md/1lSRA

This is exactly what is going to happen with the fucking chat control of the EU actually enforces it, but for an entire continent. Fuck this shit. Privacy is a human right.

-

-

-

Microsoft Came to Bargain: Use OneDrive for Device Backup, Opt into Loyalty Program and Use Their Products Till You Earn 1000 Points or Pay $30 and They Might Give You Security Updates till Oct 2026.

Technology 1

1

-

-

Most of us will leave behind a large ‘digital legacy’ when we die. Here’s how to plan what happens to it

Technology 1

1

-

1

1

-

-