960 EVO M.2 vs. 970 PRO M.2

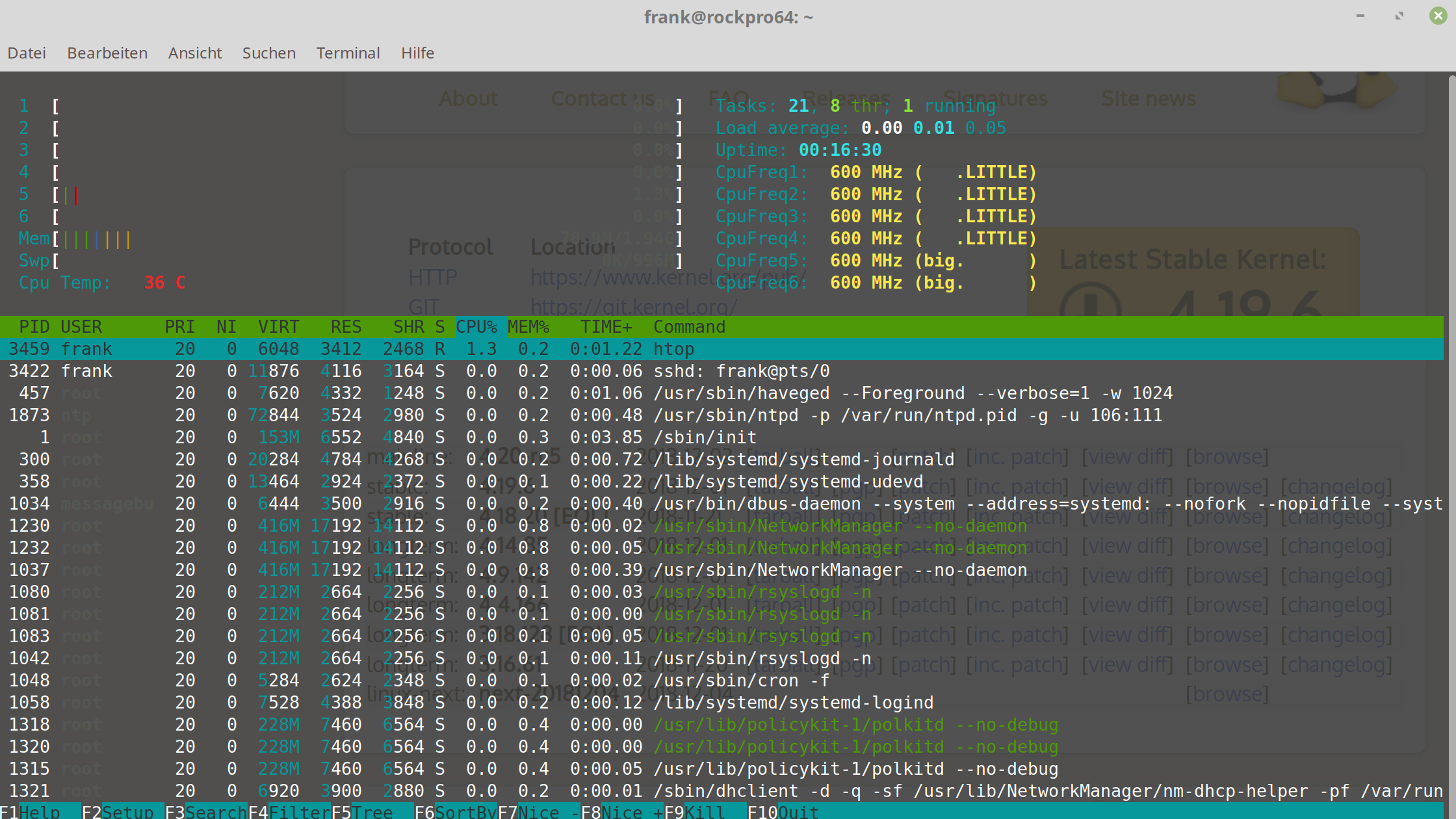

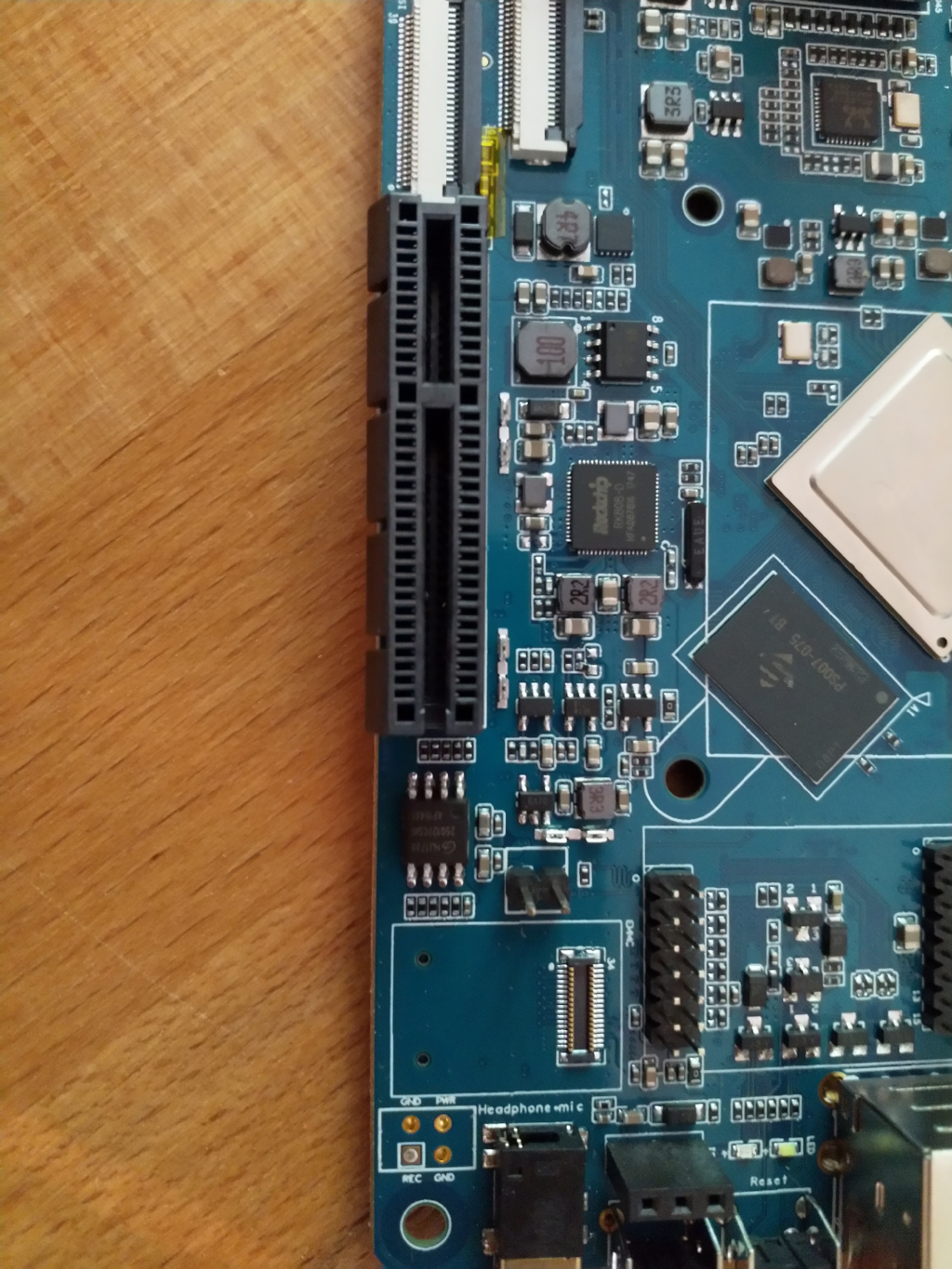

ROCKPro64

2

Beiträge

1

Kommentatoren

1.7k

Aufrufe

-

Hardware

- 960 EVO NVMe M.2 250GB

- 970 PRO NVMe M.2 500GB

Software Linux 4.18

rock64@rockpro64v2_0:/mnt$ uname -a Linux rockpro64v2_0 4.18.0-rc5-1050-ayufan-ge70bd2ab8802 #1 SMP PREEMPT Thu Jul 26 08:33:14 UTC 2018 aarch64 aarch64 aarch64 GNU/LinuxEVO

iozone

rock64@rockpro64v2_0:/mnt$ sudo iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Iozone: Performance Test of File I/O Version $Revision: 3.429 $ Compiled for 64 bit mode. Build: linux Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins Al Slater, Scott Rhine, Mike Wisner, Ken Goss Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR, Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner, Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone, Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root, Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer, Vangel Bojaxhi, Ben England, Vikentsi Lapa. Run began: Sat Jul 28 11:59:54 2018 Include fsync in write timing O_DIRECT feature enabled Auto Mode File size set to 102400 kB Record Size 4 kB Record Size 16 kB Record Size 512 kB Record Size 1024 kB Record Size 16384 kB Command line used: iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 102400 4 78392 146717 161310 163664 54188 142760 102400 16 272030 416470 446603 451929 198784 410356 102400 512 1032819 1054756 1010756 1039591 839020 1054094 102400 1024 1075290 1124016 1026463 1056224 942848 1126785 102400 16384 911810 1391243 1419922 1476347 1459080 1375922 iozone test complete.dd

rock64@rockpro64v2_0:/mnt$ sudo dd if=/dev/zero of=tempfile bs=1M count=1024 conv=fdatasync,notrunc 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 2.78991 s, 385 MB/s rock64@rockpro64v2_0:/mnt$ sudo echo 3 | sudo tee /proc/sys/vm/drop_caches 3 rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 1.29534 s, 829 MB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.911316 s, 1.2 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.532248 s, 2.0 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.714217 s, 1.5 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.528779 s, 2.0 GB/s rock64@rockpro64v2_0:/mnt$PRO

iozone

rock64@rockpro64v2_0:/mnt$ sudo iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Iozone: Performance Test of File I/O Version $Revision: 3.429 $ Compiled for 64 bit mode. Build: linux Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins Al Slater, Scott Rhine, Mike Wisner, Ken Goss Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR, Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner, Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone, Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root, Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer, Vangel Bojaxhi, Ben England, Vikentsi Lapa. Run began: Sat Jul 28 12:08:50 2018 Include fsync in write timing O_DIRECT feature enabled Auto Mode File size set to 102400 kB Record Size 4 kB Record Size 16 kB Record Size 512 kB Record Size 1024 kB Record Size 16384 kB Command line used: iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 102400 4 83920 146526 171217 172733 56965 145921 102400 16 271229 414900 454454 460018 193626 413496 102400 512 1021580 1033256 1007794 1057973 990788 1075201 102400 1024 1066333 1107758 1038792 1079089 1048932 1116344 102400 16384 918513 1418530 1433672 1529740 1523500 1389826 iozone test complete.dd

rock64@rockpro64v2_0:/mnt$ sudo dd if=/dev/zero of=tempfile bs=1M count=1024 conv=fdatasync,notrunc 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 1.76911 s, 607 MB/s rock64@rockpro64v2_0:/mnt$ echo 3 | sudo tee /proc/sys/vm/drop_caches 3 rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 1.71439 s, 626 MB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.574552 s, 1.9 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.724723 s, 1.5 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.70586 s, 1.5 GB/s rock64@rockpro64v2_0:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.512834 s, 2.1 GB/s rock64@rockpro64v2_0:/mnt$

Software Linux 4.4.132

rock64@rockpro64v2_1:/mnt$ uname -a Linux rockpro64v2_1 4.4.132-1075-rockchip-ayufan-ga83beded8524 #1 SMP Thu Jul 26 08:22:22 UTC 2018 aarch64 aarch64 aarch64 GNU/LinuxEVO

iozone

rock64@rockpro64v2_1:/mnt$ sudo iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Iozone: Performance Test of File I/O Version $Revision: 3.429 $ Compiled for 64 bit mode. Build: linux Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins Al Slater, Scott Rhine, Mike Wisner, Ken Goss Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR, Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner, Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone, Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root, Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer, Vangel Bojaxhi, Ben England, Vikentsi Lapa. Run began: Sat Jul 28 12:35:25 2018 Include fsync in write timing O_DIRECT feature enabled Auto Mode File size set to 102400 kB Record Size 4 kB Record Size 16 kB Record Size 512 kB Record Size 1024 kB Record Size 16384 kB Command line used: iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 102400 4 39260 84776 108205 107834 32124 72701 102400 16 120563 233999 269123 273692 117401 207395 102400 512 643522 575756 455850 462362 416099 548623 102400 1024 522939 613743 484305 491560 463470 617078 102400 16384 1085393 1168020 1064472 1089797 1088203 1123589 iozone test complete.dd

rock64@rockpro64v2_1:/mnt$ sudo dd if=/dev/zero of=tempfile bs=1M count=1024 conv=fdatasync,notrunc 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 2.26845 s, 473 MB/s rock64@rockpro64v2_1:/mnt$ sudo echo 3 | sudo tee /proc/sys/vm/drop_caches 3 rock64@rockpro64v2_1:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 1.53688 s, 699 MB/s rock64@rockpro64v2_1:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.743431 s, 1.4 GB/s rock64@rockpro64v2_1:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.686147 s, 1.6 GB/s rock64@rockpro64v2_1:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.638274 s, 1.7 GB/s rock64@rockpro64v2_1:/mnt$ sudo dd if=tempfile of=/dev/null bs=1M count=1024 1024+0 records in 1024+0 records out 1073741824 bytes (1.1 GB, 1.0 GiB) copied, 0.672767 s, 1.6 GB/s rock64@rockpro64v2_1:/mnt$Das sieht im iozone schon wesentlich schlechter aus, so das ich mir den Rest erspare.

-

Die 970 steckt jetzt in meinem Haupt-PC. Dort werkelt ein aktuelles Linux Mint Cinnamon 19. Zum Vergleich.

100M

frank@frank-MS-7A34:~$ sudo iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 [sudo] Passwort für frank: Iozone: Performance Test of File I/O Version $Revision: 3.429 $ Compiled for 64 bit mode. Build: linux-AMD64 Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins Al Slater, Scott Rhine, Mike Wisner, Ken Goss Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR, Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner, Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone, Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root, Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer, Vangel Bojaxhi, Ben England, Vikentsi Lapa. Run began: Sun Aug 19 16:52:19 2018 Include fsync in write timing O_DIRECT feature enabled Auto Mode File size set to 102400 kB Record Size 4 kB Record Size 16 kB Record Size 512 kB Record Size 1024 kB Record Size 16384 kB Command line used: iozone -e -I -a -s 100M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 102400 4 92640 121912 131074 139525 45719 116653 102400 16 254286 285267 285539 320370 108049 314486 102400 512 537947 581765 606103 598137 537701 588214 102400 1024 566892 547921 567369 597286 518014 558686 102400 16384 1407884 1642148 1941120 2115608 2006947 1668118 iozone test complete.1000M

frank@frank-MS-7A34:~$ sudo iozone -e -I -a -s 1000M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Iozone: Performance Test of File I/O Version $Revision: 3.429 $ Compiled for 64 bit mode. Build: linux-AMD64 Contributors:William Norcott, Don Capps, Isom Crawford, Kirby Collins Al Slater, Scott Rhine, Mike Wisner, Ken Goss Steve Landherr, Brad Smith, Mark Kelly, Dr. Alain CYR, Randy Dunlap, Mark Montague, Dan Million, Gavin Brebner, Jean-Marc Zucconi, Jeff Blomberg, Benny Halevy, Dave Boone, Erik Habbinga, Kris Strecker, Walter Wong, Joshua Root, Fabrice Bacchella, Zhenghua Xue, Qin Li, Darren Sawyer, Vangel Bojaxhi, Ben England, Vikentsi Lapa. Run began: Sun Aug 19 15:28:38 2018 Include fsync in write timing O_DIRECT feature enabled Auto Mode File size set to 1024000 kB Record Size 4 kB Record Size 16 kB Record Size 512 kB Record Size 1024 kB Record Size 16384 kB Command line used: iozone -e -I -a -s 1000M -r 4k -r 16k -r 512k -r 1024k -r 16384k -i 0 -i 1 -i 2 Output is in kBytes/sec Time Resolution = 0.000001 seconds. Processor cache size set to 1024 kBytes. Processor cache line size set to 32 bytes. File stride size set to 17 * record size. random random bkwd record stride kB reclen write rewrite read reread read write read rewrite read fwrite frewrite fread freread 1024000 4 95635 121379 108328 108265 45369 123356 1024000 16 239238 314359 245937 241877 105865 297193 1024000 512 596812 620661 442100 382367 351948 613525 1024000 1024 608903 611898 434687 417192 412018 646465 1024000 16384 1898738 2004622 2143647 2188062 2099674 1983240 iozone test complete.Da scheint auf dem ROCKPro64 noch ein wenig Luft nach oben.

-

ROCKPro64 - Debian Bullseye Teil 2

Verschoben ROCKPro64 -

ROCKPro64 - Anpassen resize_rootfs.sh

Angeheftet ROCKPro64 -

-

-

-

-

stretch-minimal-rockpro64

Verschoben Linux -