AI slows down some experienced software developers, study finds

-

Explain this too me AI. Reads back exactly what's on the screen including comments somehow with more words but less information

Ok....Ok, this is tricky. AI, can you do this refactoring so I don't have to keep track of everything. No... Thats all wrong... Yeah I know it's complicated, that's why I wanted it refactored. No you can't do that... fuck now I can either toss all your changes and do it myself or spend the next 3 hours rewriting it.

Yeah I struggle to find how anyone finds this garbage useful.

This was the case a year or two ago but now if you have an MCP server for docs and your project and goals outlined properly it's pretty good.

-

Same. I also like it for basic research and helping with syntax for obscure SQL queries, but coding hasn't worked very well. One of my less technical coworkers tried to vibe code something and it didn't work well. Maybe it would do okay on something routine, but generally speaking it would probably be better to use a library for that anyway.

I actively hate the term "vibe coding." The fact is, while using an LLM for certain tasks is helpful, trying to build out an entire, production-ready application just by prompts is a huge waste of time and is guaranteed to produce garbage code.

At some point, people like your coworker are going to have to look at the code and work on it, and if they don't know what they're doing, they'll fail.

I commend them for giving it a shot, but I also commend them for recognizing it wasn't working.

-

I like the saying that LLMs are “good” at stuff you don’t know. That’s about it.

When you know the subject it stops being much useful because you’ll already know the very obvious stuff that LLM could help you.

FreedomAdvocate is right, IMO the best use case of ai is things you have an understanding of, but need some assistance. You need to understand enough to catch atleast impactful errors by the llm

-

Fun how the article concludes that AI tools are still good anyway, actually.

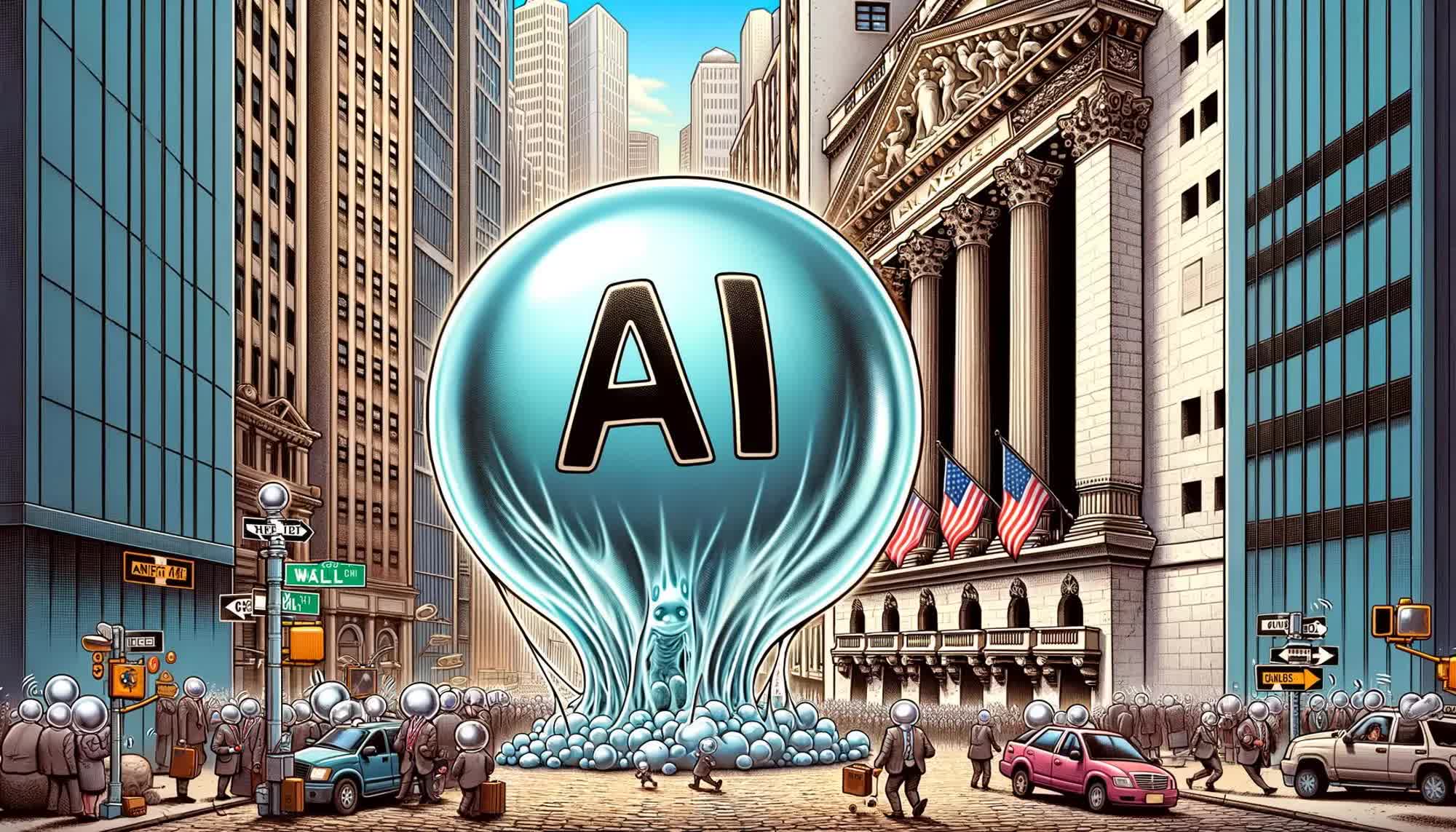

This AI hype is a sickness

LLMs are very good In the correct context, forcing people to use them for things they are already great at is not the correct context.

-

That's still not actually knowing anything. It's just temporarily adding more context to its model.

And it's always very temporary. I have a yarn project I'm working on right now, and I used Copilot in VS Code in agent mode to scaffold it as an experiment. One of the refinements I included in the prompt file to build it is reminders throughout for things it wouldn't need reminding of if it actually "knew" the repo.

- I had to constantly remind it that it's a yarn project, otherwise it would inevitably start trying to use NPM as it progressed through the prompt.

- For some reason, when it's in agent mode and it makes a mistake, it wants to delete files it has fucked up, which always requires human intervention, so I peppered the prompt with reminders not to do that, but to blank the file out and start over in it.

- The frontend of the project uses TailwindCSS. It could not remember not to keep trying to downgrade its configuration to an earlier version instead of using the current one, so I wrote the entire configuration for it by hand and inserted it into the prompt file. If I let it try to build the configuration itself, it would inevitably fuck it up and then say something completely false, like, "The version of TailwindCSS we're using is still in beta, let me try downgrading to the previous version."

I'm not saying it wasn't helpful. It probably cut 20% off the time it would have taken me to scaffold out the app myself, which is significant. But it certainly couldn't keep track of the context provided by the repo, even though it was creating that context itself.

Working with Copilot is like working with a very talented and fast junior developer whose methamphetamine addiction has been getting the better of it lately, and who has early onset dementia or a brain injury that destroyed their short-term memory.

From the article: "Even after completing the tasks with AI, the developers believed that they had decreased task times by 20%. But the study found that using AI did the opposite: it increased task completion time by 19%."

I'm not saying you didn't save time, but it's remarkable that the research shows that this perception can be false.

-

Most ides do the boring stuff with templates and code generation for like a decade so that's not so helpful to me either but if it works for you.

Yeah but I find code generation stuff I've used in the past takes a significant amount of configuration, and will often generate a bunch of code I don't want it to, and not in the way I want it. Many times it's more trouble than it's worth. Having an LLM do it means I don't have to deal with configuring anything and it's generating code for the specific thing I want it to so I can quickly validate it did things right and make any additions I want because it's only generating the thing I'm working on that moment. Also it's the same tool for the various languages I'm using so that adds more convenience.

Yeah if you have your IDE setup with tools to analyze the datasource and does what you want it to do, that may work better for you. But with the number of DBs I deal with, I'd be spending more time setting up code generation than actually writing code.

-

This was the case a year or two ago but now if you have an MCP server for docs and your project and goals outlined properly it's pretty good.

Not to sound like one of the ads or articles but I vice coded an iOS app in like 6 hours, it's not so complex I don't understand it, it's multifeatured, I learned a LOT and got a useful thing instead of doing a tutorial with sample project. I don't regret having that tool. I do regret the lack of any control and oversight and public ownership of this technology but that's the timeline we're on, let's not pretend it's gay space communism (sigh) but, since AI is probably driving my medical care decisions at the insurance company level, might as well get something to play with.

-

They're also bad at that though, because if you don't know that stuff then you don't know if what it's telling you is right or wrong.

I...think that's their point. The only reason it seems good is because you're bad and can't spot that is bad, too.

-

I have limited AI experience, but so far that's what it means to me as well: helpful in very limited circumstances.

Mostly, I find it useful for "speaking new languages" - if I try to use AI to "help" with the stuff I have been doing daily for the past 20 years? Yeah, it's just slowing me down.

and the only reason it's not slowing you down on other things is that you don't know enough about those other things to recognize all the stuff you need to fix

-

I actively hate the term "vibe coding." The fact is, while using an LLM for certain tasks is helpful, trying to build out an entire, production-ready application just by prompts is a huge waste of time and is guaranteed to produce garbage code.

At some point, people like your coworker are going to have to look at the code and work on it, and if they don't know what they're doing, they'll fail.

I commend them for giving it a shot, but I also commend them for recognizing it wasn't working.

I think the term pretty accurately describes what is going on: they don't know how to code, but they do know what correct output for a given input looks like, so they iterate with the LLM until they get what they want. The coding here is based on vibes (does the output feel correct?) instead of logic.

I don't think there's any problem with the term, the problem is with what's going on.

-

This post did not contain any content.

Yeah... It's useful for summarizing searches but I'm tempted to disable it in VSCode because it's been getting in the way more than helping lately.

-

Interesting idea… we actually have a plan to go public in a couple years and I’m holding a few options, but the economy is hitting us like everyone else. I’m no longer optimistic we can reach the numbers for those options to activate

Always keep an open mind. I stuck around in my first job until the sad and pathetic end for everyone, and when I finally did start looking the economy was worse than it had been when the writing was first on the wall.

-

I...think that's their point. The only reason it seems good is because you're bad and can't spot that is bad, too.

To be fair, when you're in Gambukistan and you don't even know what languages are spoken, a smart phone can bail you out and get you communicating basic needs much faster and better than waving your hands and speaking English LOUDLY AND S L O W L Y . A good human translator, you can trust, should be better - depending on their grasp of English, but there's another point... who do you choose to pick your hotel for you? Google, or a local kid who spotted you from across the street and ran over to "help you out"? That's a tossup, both are out to make a profit out of you, but which one is likely to hurt you more?

-

I work for an adtech company and im pretty much the only developer for the javascript library that runs on client sites and shows our ads. I dont use AI at all because it keeps generating crap

I have to use it for work by mandate, and overall hate it. Sometimes it can speed up certain aspects of development, especially if the domain is new or project is small, but these gains are temporary. They steal time from the learning that I would be doing during development and push that back to later in the process, and they are no where near good enough to make it so that I never have to do the learning at all

-

I think the term pretty accurately describes what is going on: they don't know how to code, but they do know what correct output for a given input looks like, so they iterate with the LLM until they get what they want. The coding here is based on vibes (does the output feel correct?) instead of logic.

I don't think there's any problem with the term, the problem is with what's going on.

That's fair. I guess what I hate is what the term represents, rather than the term itself.

-

For some of us that’s more useful. I’m currently playing a DevSecOps role and one of the defining characteristics is I need to know all the tools. On Friday, I was writing some Java modules, then some groovy glue, then spent the after writing a Python utility. While im reasonably good about jumping among languages and tools, those context switches are expensive. I definitely want ai help with that.

That being said, ai is just a step up from search or autocomplete, it’s not magical. I’ve had the most luck with it generating unit tests since they tend to be simple and repetitive (also a major place for the juniors to screw up: ai doesn’t know whether the slop it’s pumping out is useful. You do need to guide it and understand it, and you really need to cull the dreck)

I think about how much the planet is heating up because people like me are a little too lazy to be competent. I am glad my nieces and nephews get to pay our price we are raising every day on their behalf, to improve their world supposedly with our extra productivity, right?

-

That's kind of outside the software development discussion but glad you're enjoying it.

Ah. True.

I realise it now. -

Did you not read what I wrote?

Inflation went up due to the knock-on effects of the sanctions. Specifically prices for oil and gas skyrocketed.

And since everything runs on oil and gas, all prices skyrocketed.

Covid stimulus packages had nothing to do with that, especially in 2023, 2024 and 2025, when there were no COVID stimulus packages, yet the inflation was much higher than at any time during COVID.

Surely it is not too much to ask that people remember what year stuff happened in, especially if we are talking about things that happened just 2 years ago.

Yes I read what you wrote - most of it makes sense. I guess I never associated the interest rates going up with Ukraine, I always thought they were a response to the economy slowly getting better and worries of inflation caused by the 2020-2021 stimulus packages. Aka Biden was trying to prevent excessive inflation as the stimulus packages bore fruit (which obviously didn't work). But I do remember the interest rates being one of the big drivers of the layoffs once the tech companies no longer had near-infinite near-interest-free capital.

-

-

‘You never want to leave:’ TikTok employees raise concerns about the app’s impact on teens in newly unsealed video

Technology1

-

-

Microsoft buys more than a billion dollars’ worth of excrement, including human poop, to clean up its AI mess — company will pump waste underground to offset AI carbon emissions

Technology 1

1

-

-

-

-