OpenAI is storing deleted ChatGPT conversations as part of its NYT lawsuit

-

The only thing I can tell is that they were already saving the chats of personal accounts but their SLAs prevent them from doing so with some corporate accounts. Apparently there is some concern that proprietary information will now be made part of a public case. Personally I feel like that’s the price of being an early adopter of something most people said was a bad idea but what do I know?

Well, if classified information from government agencies comes to light in this case, there will be problems. Also important companies.

-

Well, if classified information from government agencies comes to light in this case, there will be problems. Also important companies.

If that happened wouldn't the Judge just dismiss/banhammer the evidence from the case somehow? (IDK IANAL)

-

From what I gather, a company is being asked to retain potential evidence during a lawsuit involving said data. Am I missing something? What’s outside the norm here?

We specifically have an enterprise contract (in the EU), checked by our lawyers, that says they can’t store our data or use it for training.

This decision goes against that contract.

-

We specifically have an enterprise contract (in the EU), checked by our lawyers, that says they can’t store our data or use it for training.

This decision goes against that contract.

so they never should have persisted that data to begin with, right? and if they didn't persist it, they wouldn't need to retain it

-

so they never should have persisted that data to begin with, right? and if they didn't persist it, they wouldn't need to retain it

I mean, it's more complicated than that.

Of course, data is persisted somewhere, in a transient fashion, for the purpose of computation. Especially when using event based or asynchronous architectures.

And then promptly deleted or otherwise garbage collected in some manner (either actively or passively, usually passively). It could be in transitory memory, or it could be on high speed SSDs during any number of steps.

It's also extremely common for data storage to happen on a caching layer level and not violate requirements that data not be retained since those caches are transitive. Let's not mention the reduced rate "bulk" non-syncronous APIs, Which will use idle, cheap, computational power to do work in a non-guaranteed amount of time. Which require some level of storage until the data can be processed.

A court order forcing them to start storing this data is a problem. It doesn't mean they already had it stored in an archival format somewhere, it means they now have to store it somewhere for long term retention.

-

I mean, it's more complicated than that.

Of course, data is persisted somewhere, in a transient fashion, for the purpose of computation. Especially when using event based or asynchronous architectures.

And then promptly deleted or otherwise garbage collected in some manner (either actively or passively, usually passively). It could be in transitory memory, or it could be on high speed SSDs during any number of steps.

It's also extremely common for data storage to happen on a caching layer level and not violate requirements that data not be retained since those caches are transitive. Let's not mention the reduced rate "bulk" non-syncronous APIs, Which will use idle, cheap, computational power to do work in a non-guaranteed amount of time. Which require some level of storage until the data can be processed.

A court order forcing them to start storing this data is a problem. It doesn't mean they already had it stored in an archival format somewhere, it means they now have to store it somewhere for long term retention.

I think it's debatable whether storing in volatile memory is persisting, but ok. And by debatable I mean depends on what is happening exactly.

A court order forcing them to no longer garbage, collect or delete data used for processing is a problem.

what, are they going to do memory dumps before every free() call?

-

If that happened wouldn't the Judge just dismiss/banhammer the evidence from the case somehow? (IDK IANAL)

The judge can declare certain evidence to be confidential, not to be part of public record, for attorney's eyes only. But with high level security clearances, the judge may not even be able to see it. So who knows!

-

I think it's debatable whether storing in volatile memory is persisting, but ok. And by debatable I mean depends on what is happening exactly.

A court order forcing them to no longer garbage, collect or delete data used for processing is a problem.

what, are they going to do memory dumps before every free() call?

I mean at this point you're just being intentionally obtuse no? You are correct of course, volatile memory if you consider it from a system point of view would be pretty asinine to try and store.

However, we're not really looking at this from a system's view are we? Clearly you ignored all the other examples I provided just to latch on to the memory argument. There are many other ways that this data could be stored in a transient fashion.

-

The only thing I can tell is that they were already saving the chats of personal accounts but their SLAs prevent them from doing so with some corporate accounts. Apparently there is some concern that proprietary information will now be made part of a public case. Personally I feel like that’s the price of being an early adopter of something most people said was a bad idea but what do I know?

I mean ChatGPT will straight insist this won’t happen. So no, it’s not the price of being an early adapter.

-

I mean ChatGPT will straight insist this won’t happen. So no, it’s not the price of being an early adapter.

If you are taking business advice from ChatGPT that includes purchasing a ChatGPT subscription or can’t be bothered to look up how it works beforehand then your business is probably going to fail.

-

It Took Many Years And Billions Of Dollars, But Microsoft Finally Invented A Calculator That Is Wrong Sometimes

Technology 1

1

-

-

-

-

-

-

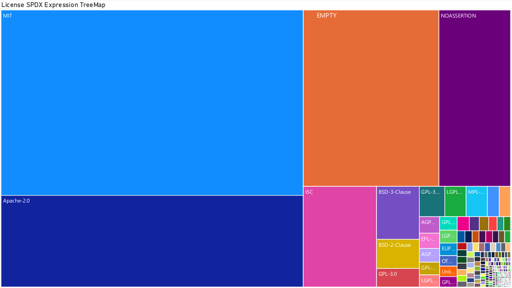

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1

-

Apple business executives ban Fortnite from iOS. People around the world - including in Europe - say their iPhone is preventing them from playing the videogame.

Technology1