An earnest question about the AI/LLM hate

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

I think a lot of it is anxiety; being replaced by AI, the continued enshitification of the services I loved, and the ever present notion that AI is, "the answer." After a while, it gets old and that anxiety mixes in with annoyance -- a perfect cocktail of animosity.

And AI stole em dashes from me, but that's a me-problem.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

It's a hugely disruptive technology, that is harmful to the environment, being taken up and given center stage by a host of folk who don't understand it.

Like the industrial revolution, it has the chance to change the world in a massive way, but in doing so, it's going to fuck over a lot of people, and notch up greenhouse gas output. In a decade or two, we probably won't remember what life was like without them, but lots of people are going to be out of jobs, have their income streams cut off and have no alternatives available to them whilst that happens.

And whilst all of that is going on, we're getting told that it's the best most amazing thing that we all need, and it's being stuck in to everything, including things that don't benefit from the presence of an LLM, and sometimes, where the presence of an LLM can be actively harmful

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

I know there's people who could articulate it better than I can, but my logic goes like this:

- Loss of critical thinking skill: This doesn't just apply for someone working on a software project that they don't really care about. Lots of coders start in their bedroom with notepad and some curiosity. If copilot interrupts you with mediocre but working code, you never get the chance to learn ways of solving a problem for yourself.

- Style: code spat out by AI is a very specific style, and no amount of prompt modifiers with come up with the type of code someone designing for speed or low memory usage would produce that's nearly impossible to read but solves for a very specific case.

- If everyone is a coder, no one is a coder: If everyone can claim to be a coder on paper, it will be harder to find good coders. Sure, you can make every applicant do FizzBuzz or a basic sort, but that does not give a good opportunity to show you can actually solve a problem. It will discourage people from becoming coders in the first place. A lot of companies can actually get by with vibe coders (at least for a while) and that dries up the market of the sort of junior positions that people need to get better and promoted to better positions.

- When the code breaks, it takes a lot longer to understand and rectify when you don't know how any of it works. When you don't even bother designing or completing a test plan because Cursor developed a plan, which all came back green, pushed it during a convenient downtime and has archived all the old versions in its own internal logical structure that can't be easily undone.

Edits: Minor clarification and grammar.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

I am truly impressed that you managed to replace a desktop operating system with a mobile os that doesn't even come in an X86 variant (Lineage that is is, I'm aware android has been ported).

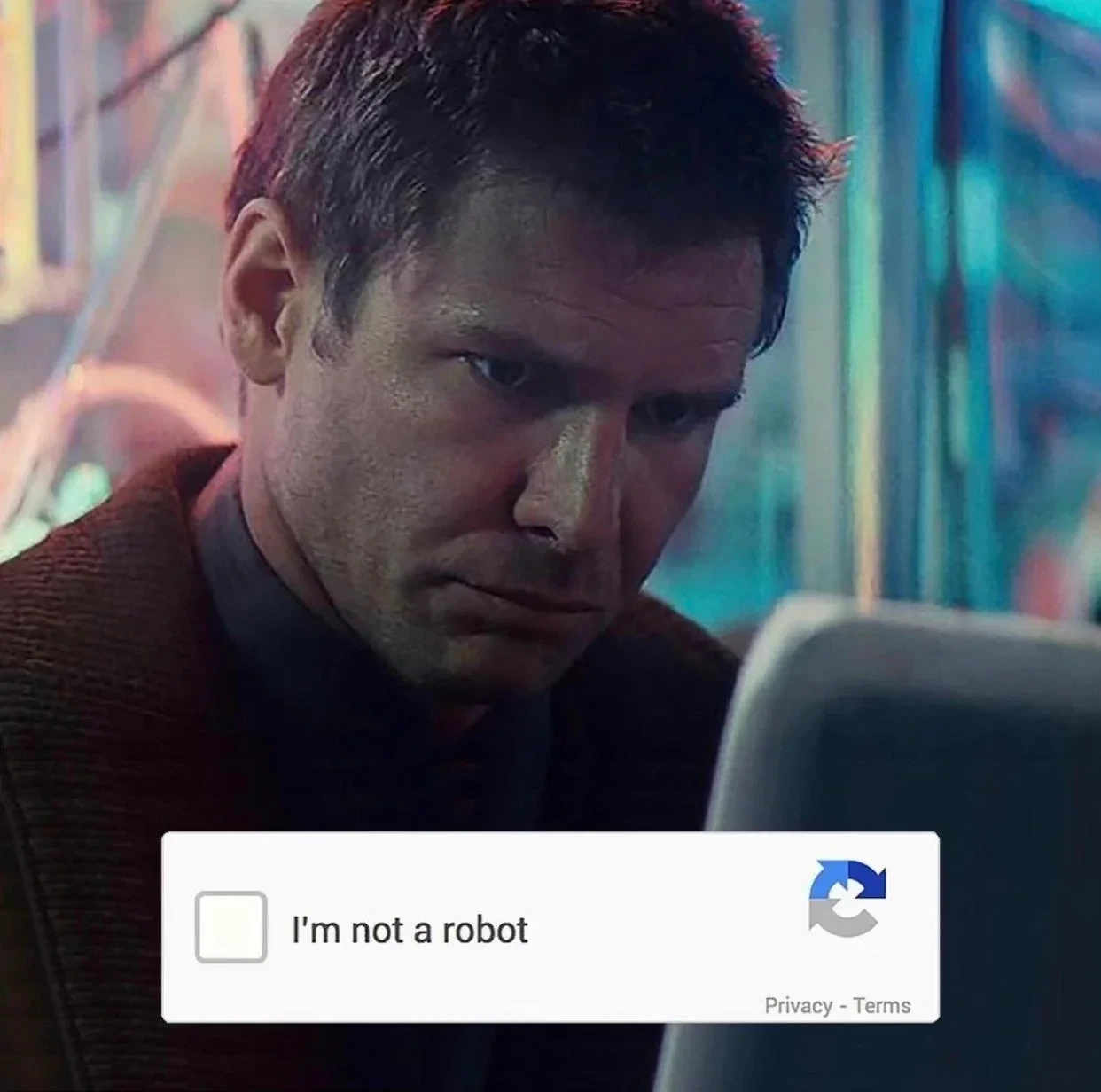

I smell bovine faeces. Or are you, in fact, an LLM ?

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

I’m not part of the hate crowd but I do believe I understand at least some of it.

A fairly big issue I see with it is that people just don’t understand what it is. Too many people see it as some magical being that knows everything…

I’ve played with LLMs a lot, hosting them locally, etc., and I can’t say I find them terribly useful, but I wouldn’t hate them for what they are. There are more than enough real issues, of course, both societal and environmental.

One thing I do hate is using LLMs to generate tons of online content, though, be it comments or entire websites. That’s just not what I’m on the internet for.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

To me, it's not the tech itself, it's the fact that it's being pushed as something it most definitely isn't. They're grifting hard to stuff an incomplete feature down everyone's throats, while using it to datamine the everloving spit out of us.

Truth be told, I'm genuinely excited about the concept of AGI, of the potential of what we're seeing now. I'm also one who believes AGI will ultimately be as a progeny and should be treated as such, as a being in itself, and while we aren't capable of generating that, we should still keep it in mind, to mould our R&D to be based on that principle and thought. So, in addition to being disgusted by the current day grift, I'm also deeply disappointed to see these people behaving this way - like madmen and cultists. And as a further note, looking at our species' approach toward anything it sees as Other doesn't really make me think humanity would be adequate parents for any type of AGI as we are now, either.

The people who own/drive the development of AI/LLM/what-have-you (the main ones, at least) are the kind of people who would cause the AI apocalypse. That's my problem.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

For me personally, the problem is not so much LLMs and/or ML solutions (both of which I actively use), but the fact this industry is largely led by American tech oligarchs. Not only are they profoundly corrupt and almost comically dishonest, but they are also true degenerates.

-

I think a lot of it is anxiety; being replaced by AI, the continued enshitification of the services I loved, and the ever present notion that AI is, "the answer." After a while, it gets old and that anxiety mixes in with annoyance -- a perfect cocktail of animosity.

And AI stole em dashes from me, but that's a me-problem.

Yeah, fuck this thing with em dashes… I used them constantly, but now, it’s a sign something was written by an LLM!??!?

Bunshit.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

I am truly impressed that you managed to replace a desktop operating system with a mobile os that doesn't even come in an X86 variant (Lineage that is is, I'm aware android has been ported).

I smell bovine faeces. Or are you, in fact, an LLM ?

Lineage sounds a lot like "Linux." Take it easy on the lad.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

I personally just find it annoying how it's shoehorned into everyting regardless if it makes sense to be there or not, without the option to turn it off.

I also don't find it helpful for most things I do.

-

Yeah, fuck this thing with em dashes… I used them constantly, but now, it’s a sign something was written by an LLM!??!?

Bunshit.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

My hypothesis from the start is that people were on a roll with the crypto hate (which was a lot less ambiguous, since there were fewer legitimate applications there).

Then the AI gold rush hit and both investors and haters smoothly rolled onto that and transferred over a lot of the same discourse. It helps that AIbros overhyped the crap out of the tech, but the carryover hate was also entirely unwilling to acknowledge any kind of nuance from the go.

So now you have a bunch of people with significant emotional capital baked into the idea that genAI is fundamentally a scam and/or a world-destroying misstep that have a LOT of face to lose by conceding even a sliver of usefulness or legitimacy to the thing. They are not entirely right... but not entirely wrong, either, so there you go, the perfect recipe for an eternal culture war.

Welcome to discourse and public opinion in the online age. It kinda sucks.

-

To me, it's not the tech itself, it's the fact that it's being pushed as something it most definitely isn't. They're grifting hard to stuff an incomplete feature down everyone's throats, while using it to datamine the everloving spit out of us.

Truth be told, I'm genuinely excited about the concept of AGI, of the potential of what we're seeing now. I'm also one who believes AGI will ultimately be as a progeny and should be treated as such, as a being in itself, and while we aren't capable of generating that, we should still keep it in mind, to mould our R&D to be based on that principle and thought. So, in addition to being disgusted by the current day grift, I'm also deeply disappointed to see these people behaving this way - like madmen and cultists. And as a further note, looking at our species' approach toward anything it sees as Other doesn't really make me think humanity would be adequate parents for any type of AGI as we are now, either.

The people who own/drive the development of AI/LLM/what-have-you (the main ones, at least) are the kind of people who would cause the AI apocalypse. That's my problem.

Agree, the last people in the world who should be making AGI, are. Rabid techbro nazi capitalist fucktards who feel slighted they missed out on (absolute, not wage) slaves and want to make some. Do you want terminators, because that's how you get terminators. Something with so much positive potential that is also an existential threat needs to be treated with so much more respect.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

Emotional? No. Rational.

Use of Ai is showing as a bad idea for so many reasons that have been raised by people who study this kind of thing. There's nothing I can tell you that has any more validity than the experts' opinions. Go see.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

I am truly impressed that you managed to replace a desktop operating system with a mobile os that doesn't even come in an X86 variant (Lineage that is is, I'm aware android has been ported).

I smell bovine faeces. Or are you, in fact, an LLM ?

Calm down. They never said anything about the two things happening on the same device.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

We're outsourcing thinking to a bullshit generator controlled by mostly American mega-corporations who have repeatedly demonstrated that they want to do us harm, burning through scarce resources and rendering creative humans robbed and unemployed in the process.

What's not to hate.

-

Lineage sounds a lot like "Linux." Take it easy on the lad.

Could also be two separate things? I have a) dumped Windows and b) installed Lineage.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

One thing I can't understand is the level of acrimony toward LLMs. I see things like "stochastic parrot", "glorified autocomplete", etc. If you need an example, the comments section for the post on Apple saying LLMs don't reason is a doozy of angry people: https://infosec.pub/post/29574988

While I didn't expect a community of vibecoders, I am genuinely curious about why LLMs strike such an emotional response with this crowd. It's a tool that has gone from interesting (GPT3) to terrifying (Veo 3) in a few years and I am personally concerned about many of the safety/control issues in the future.

So I ask: what is the real reason this is such an emotional topic for you in particular? My personal guess is that the claims about replacing software engineers is the biggest issue, but help me understand.

Okay so imagine for a second that somebody just invented voice to text and everyone trying to sell it to you lies about it and claims it can read your thoughts and nobody will ever type things manually ever again.

The people trying to sell us LLMs lie about how they work and what they actually do. They generate text that looks like a human wrote it. That's all they do. There's some interesting attributes of this behavior, namely that when prompted with text that's a question the LLM will usually end up generating text that ends up being an answer. The LLM doesn't understand any part of this process any better than your phones autocorrect, it's just really good at generating text that looks like stuff it's seen in training. Depending on what exactly you want this thing to do it can be extremely useful or a complete scam. Take for example code generation. By and large they can generate code mostly okay, I'd say they tend to be slightly worse than a competent human. Genuinely really impressive for what it is, but it's not revolutionary. Basically the only actual use cases for this tech so far has been glorified autocomplete. It's kind of like NFTs or Crypto at large, there is actual utility there but nobody who's trying to sell the idea to you is actually involved or cares about that part, they just want to trick you into becoming their new money printer.

-

Hello, recent Reddit convert here and I'm loving it. You even inspired me to figure out how to fully dump Windows and install LineageOS.

I am truly impressed that you managed to replace a desktop operating system with a mobile os that doesn't even come in an X86 variant (Lineage that is is, I'm aware android has been ported).

I smell bovine faeces. Or are you, in fact, an LLM ?

He dumped windows (for Linux) amd installed LineageOS (on his phone).

OP likely has two devices.

-

Fraking toaster…