Google confirms more ads on your paid YouTube Premium Lite soon

-

This post did not contain any content.

-

This post did not contain any content.

Premium Lite? What paid benefits are there to YouTube other than removing ads?

-

Premium Lite? What paid benefits are there to YouTube other than removing ads?

YouTube music. But since Lite has ads then idk what it's different. I'm assuming Lite also doesn't have music.

-

This post did not contain any content.

WTF is Premium Lite?

-

This post did not contain any content.

This is literately the equivalent of cable.

-

This post did not contain any content.

It will literally never end.

This is why anyone who was excusing advertising at all is part of the problem and should be seen as a useful idiot.

Stop lowering your standards so people richer than you can be even richer.

-

YouTube music. But since Lite has ads then idk what it's different. I'm assuming Lite also doesn't have music.

Youtube already has music.

Just pick your song and listen to it...

-

WTF is Premium Lite?

Premium but without the music. And with ads apparently.

-

This post did not contain any content.

No; I don't think there will be.

-

This post did not contain any content.

Christ, premium light is still fucking 8 dollars a month and you don't even get rid of ads. The greed is fucking astounding.

I'll stick to an adblocker, dickheads.

-

WTF is Premium Lite?

I guess it’s their “pay for ads” tier which sounds fucking stupid to me.

-

Youtube already has music.

Just pick your song and listen to it...

I remember when YouTube Music first came out it was pretty much just the audio from the videos in the most atrocious quality. Has it changed since then?

-

Youtube already has music.

Just pick your song and listen to it...

YouTube music is better than music on youtube, particularly for people with slow or capped internet.

-

WTF is Premium Lite?

It's Premium, but not really.

-

YouTube music is better than music on youtube, particularly for people with slow or capped internet.

How? I use my phone for internet and don't have any issues.

-

This post did not contain any content.

Isn’t this because the music industry insisted on taking a cut of all YouTube shorts revenue? Like even if their songs aren’t getting played, they still rake in money from views.

-

How? I use my phone for internet and don't have any issues.

You're not necessarily streaming video on YT music, just audio.

-

This is literately the equivalent of cable.

No, it's worse. With a cable DVR you can skip all commercials.

-

WTF is Premium Lite?

Premium Sike.

-

This post did not contain any content.

Or, and hear me out: just install a browser plugin and forget ads exist.

I hesitate to name extensions names, but I’ve literally never seen an ad on YouTube.

These companies don’t respect us, so I don’t feel the need to respect them by allowing them to force their meaningless bullshit down my throat.

I don’t use it that much, but you better believe if I go to YouTube to watch a video, that video is the only thing I’m watching.

-

-

-

-

-

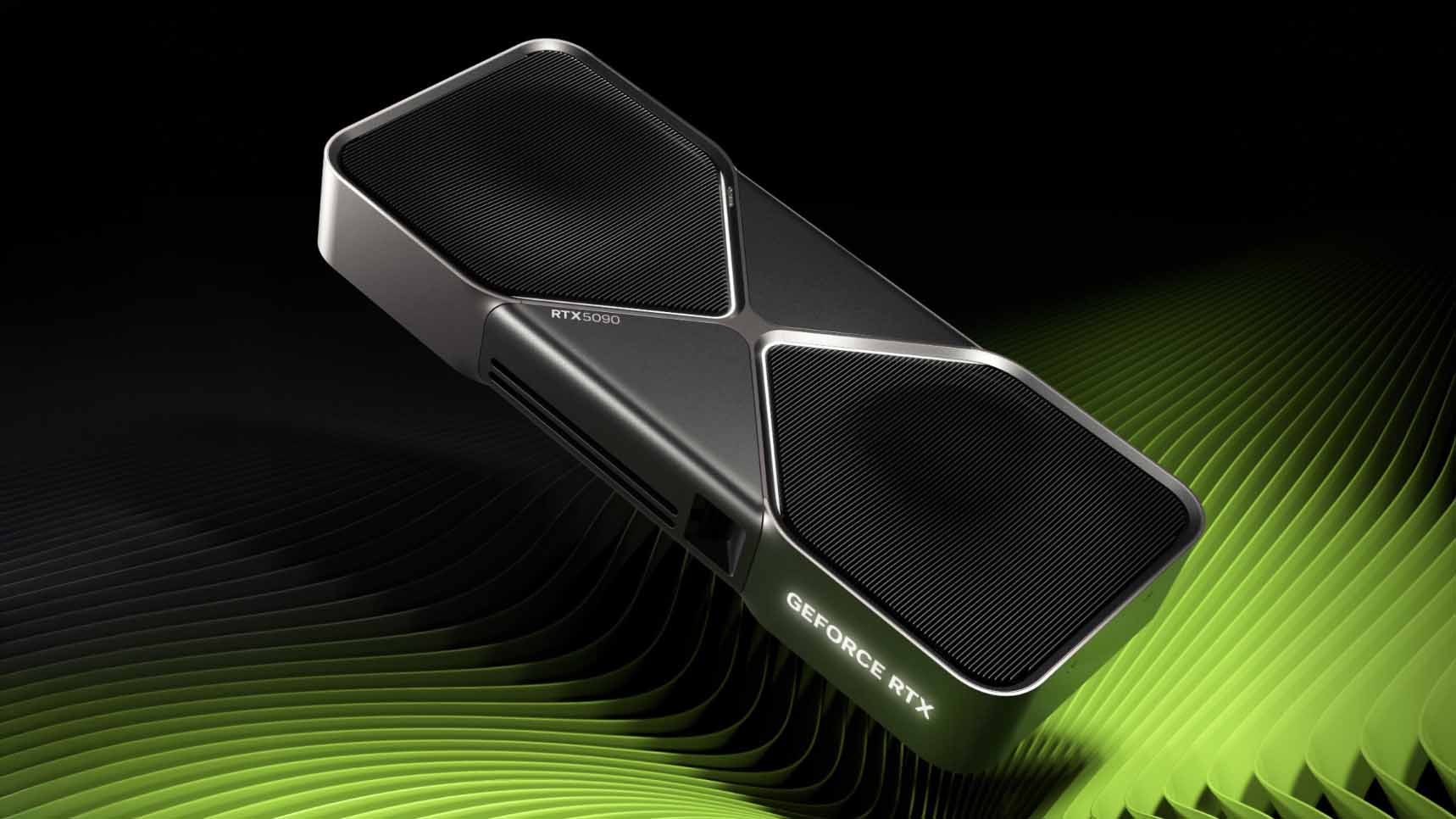

Prototype of RTX 5090 Appears With Four 16-Pin Power Connectors, Capable of Delivering 2,400W

Technology 1

1

-

Why does digital violence against LGBTI people in Thailand and Taiwan continue even after marriage equality?

Technology 1

1

-

-

Rebecca Shaw: I knew one day I’d have to watch powerful men burn the world down. But I didn't expect them to be such losers.

Technology 1

1