Jack Dorsey just Announced Bitchat(A secure, decentralized, peer-to-peer messaging app for iOS and macOS that works over Bluetooth mesh networks) Licensed Under Public Domain.

-

I don't trust Jack. But this does seem marginally interesting. Actually decentralized, no servers supposedly. We'll have to see. Again I sure as hell I'm not going to trust dorsey. And he's got it under some cringey edgelord "unlicense" license which basically appears to be MIT just with a different name. The actual concept seems intriguing. But definitely nothing to get excited about currently.

youtube-dl and yt-dlp are under unlicense. it's just boilerplate legalese for public domain

-

This post did not contain any content.

Bitch At

Lmao

-

Briar if you're on Android

My mobile stuff is on Android, but Briar desktop (despite being a Java application?..) swears at "unknown OS FreeBSD" and doesn't run.

-

Briar is the much better and much more mature version of this.

I actually liked the way this particular thing works, I've visited the repository and it's much like a real version of my toy of two months. (Except my toy doesn't work for anything real)

-

My mobile stuff is on Android, but Briar desktop (despite being a Java application?..) swears at "unknown OS FreeBSD" and doesn't run.

Sorry, I'm not a dev on the project, just have an interest in secure communications.

-

Sorry, I'm not a dev on the project, just have an interest in secure communications.

Yes, I didn't think you were, just shared ... In any case under Linuxulator with Linux JRE it swears a lot, but seems to work.

-

"IRC vibes" -> maybe intended, see BitchX.

Just realized that could be read as "bit chicks", which would explain such a name choice for an IRC client in the times when there actually were some bit chicks on popular IRC channels.

-

I wanted something like this for weddings or group camping type events for sharing photos to multiple others at once.

Isnt that what QuickShare and Airdrop solves?

-

Where my bitchat

Smack by Bitchup

-

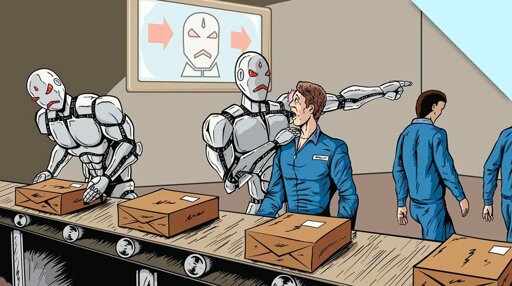

messaging app that works over Bluetooth mesh networks. No internet

So he's made a shitty version of Briar and crammed crypto into it?

Briar doesn’t have an iOS client an never will

This doesn’t have an android client

-

Isnt that what QuickShare and Airdrop solves?

And LocalSend

-

Oh great, yet another secure messaging app.

Getting people to move off Messenger or even WhatsApp is tricky enough already for to interview and resistance to change. But even when you can coax them to move, you then often end up in a debate about where to move to. Signal, Briar, Viber, whatever proprietary thing Apple is currently pushing, or the thousands of other options/apps. I guess we can just add this one to that long list.

This is nothing like the ones you list, this is local only no internet

-

It's definitely limiting. LoRa wan meshed network is more useful. But most people don't have a LoRa capable device. I could see something like this at a protest or public event at least. If there were enough nodes in the area the network could span hundreds to thousands of feet with the right conditions. But that's a big ask ATM.

Meshtastic requires bespoke hardware, it’ll always stay a marginal tool

This requires: an iPhone.

And someone will make a bridge from this to Meshtastic in a while anyway

-

Briar doesn’t have an iOS client an never will

This doesn’t have an android client

Like I said, Briar is better

-

This is nothing like the ones you list, this is local only no internet

Okay. But one of my points still stands that there are already a bunch of p2p Bluetooth-based messaging apps out there.

-

Smack by Bitchup

Move bitch get out the way

-

And LocalSend

QuickShare, AirDrop and LocalSend all use WiFi, which can be a problem when using a VPN (it is for me).

-

Okay. But one of my points still stands that there are already a bunch of p2p Bluetooth-based messaging apps out there.

And more is better so people get used to using them and skip the telcos and other stuff that can be tracked

-

Isnt that what QuickShare and Airdrop solves?

Airdrop is two people at a time. Say we had a group of 8 people I don’t want to do 7 air drop exchanges to get all the photos.

-

QuickShare, AirDrop and LocalSend all use WiFi, which can be a problem when using a VPN (it is for me).

Turn it off temporarily?