Judge Rules Training AI on Authors' Books Is Legal But Pirating Them Is Not

-

If a human did that it’s still plagiarism.

Oh I agree it should be, but following the judges ruling, I don't see how it could be. You trained an LLM on textbooks that were purchased, not pirated. And the LLM distributed the responses.

(Unless you mean the human reworded them, then yeah, we aren't special apparently)

-

So I can't use any of these works because it's plagiarism but AI can?

Why would it be plagiarism if you use the knowledge you gain from a book?

-

Does buying the book give you license to digitise it?

Does owning a digital copy of the book give you license to convert it into another format and copy it into a database?

Definitions of "Ownership" can be very different.

You can digitize the books you own. You do not need a license for that. And of course you could put that digital format into a database. As databases are explicit exceptions from copyright law. If you want to go to the extreme: delete first copy. Then you have only in the database. However: AIs/LLMs are not based on data bases. But on neural networks. The original data gets lost when "learned".

-

Oh I agree it should be, but following the judges ruling, I don't see how it could be. You trained an LLM on textbooks that were purchased, not pirated. And the LLM distributed the responses.

(Unless you mean the human reworded them, then yeah, we aren't special apparently)

Yes, on the second part. Just rearranging or replacing words in a text is not transformative, which is a requirement. There is an argument that the ‘AI’ are capable of doing transformative work, but the tokenizing and weight process is not magic and in my use of multiple LLM’s they do not have an understanding of the material any more then a dictionary understands the material printed on its pages.

An example was the wine glass problem. Art ‘AI’s were unable to display a wine glass filled to the top. No matter how it was prompted, or what style it aped, it would fail to do so and report back that the glass was full. But it could render a full glass of water. It didn’t understand what a full glass was, not even for the water. How was this possible? Well there was very little art of a full wine glass, because society has an unspoken rule that a full wine glass is the epitome of gluttony, and it is to be savored not drunk. Where as the reference of full glasses of water were abundant. It doesn’t know what full means, just that pictures of full glass of water are tied to phrases full, glass, and water.

-

If what you are saying is true, why were these ‘AI’s” incapable of rendering a full wine glass? It ‘knows’ the concept of a full glass of water, but because of humanities social pressures, a full wine glass being the epitome of gluttony, art work did not depict a full wine glass, no matter how ai prompters demanded, it was unable to link the concepts until it was literally created for it to regurgitate it out. It seems ‘AI’ doesn’t really learn, but regurgitates art out in collages of taken assets, smoothed over at the seams.

Copilot did it just fine

-

Formatting thing: if you start a line in a new paragraph with four spaces, it assumes that you want to display the text as a code and won't line break.

This means that the last part of your comment is a long line that people need to scroll to see. If you remove one of the spaces, or you remove the empty line between it and the previous paragraph, it'll look like a normal comment

With an empty line of space:

1 space - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

2 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

3 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.

4 spaces - and a little bit of writing just to see how the text will wrap. I don't really have anything that I want to put here, but I need to put enough here to make it long enough to wrap around. This is likely enough.Thanks, I had copy-pasted it from the website

-

Copilot did it just fine

1 it’s not full, but closer then it was.

- I specifically said that the AI was unable to do it until someone specifically made a reference so that it could start passing the test so it’s a little bit late to prove much.

-

But I thought they admitted to torrenting terabytes of ebooks?

Facebook (Meta) torrented TBs from Libgen, and their internal chats leaked so we know about that, and IIRC they've been sued. Maybe you're thinking of that case?

-

FTA:

Anthropic warned against “[t]he prospect of ruinous statutory damages—$150,000 times 5 million books”: that would mean $750 billion.

So part of their argument is actually that they stole so much that it would be impossible for them/anyone to pay restitution, therefore we should just let them off the hook.

What is means is they don't own the models. They are the commons of humanity, they are merely temporary custodians. The nightnare ending is the elites keeping the most capable and competent models for themselves as private play things. That must not be allowed to happen under any circumstances. Sue openai, anthropic and the other enclosers, sue them for trying to take their ball and go home. Disposses them and sue the investors for their corrupt influence on research.

-

And thus the singularity was born.

Yes please a singularity of intellectual property that collapses the idea of ownong ideas. Of making the infinitely freely copyableinto a scarce ressource. What corrupt idiocy this has been. Landlords for ideas and look what garbage it has been producing.

-

Yes, on the second part. Just rearranging or replacing words in a text is not transformative, which is a requirement. There is an argument that the ‘AI’ are capable of doing transformative work, but the tokenizing and weight process is not magic and in my use of multiple LLM’s they do not have an understanding of the material any more then a dictionary understands the material printed on its pages.

An example was the wine glass problem. Art ‘AI’s were unable to display a wine glass filled to the top. No matter how it was prompted, or what style it aped, it would fail to do so and report back that the glass was full. But it could render a full glass of water. It didn’t understand what a full glass was, not even for the water. How was this possible? Well there was very little art of a full wine glass, because society has an unspoken rule that a full wine glass is the epitome of gluttony, and it is to be savored not drunk. Where as the reference of full glasses of water were abundant. It doesn’t know what full means, just that pictures of full glass of water are tied to phrases full, glass, and water.

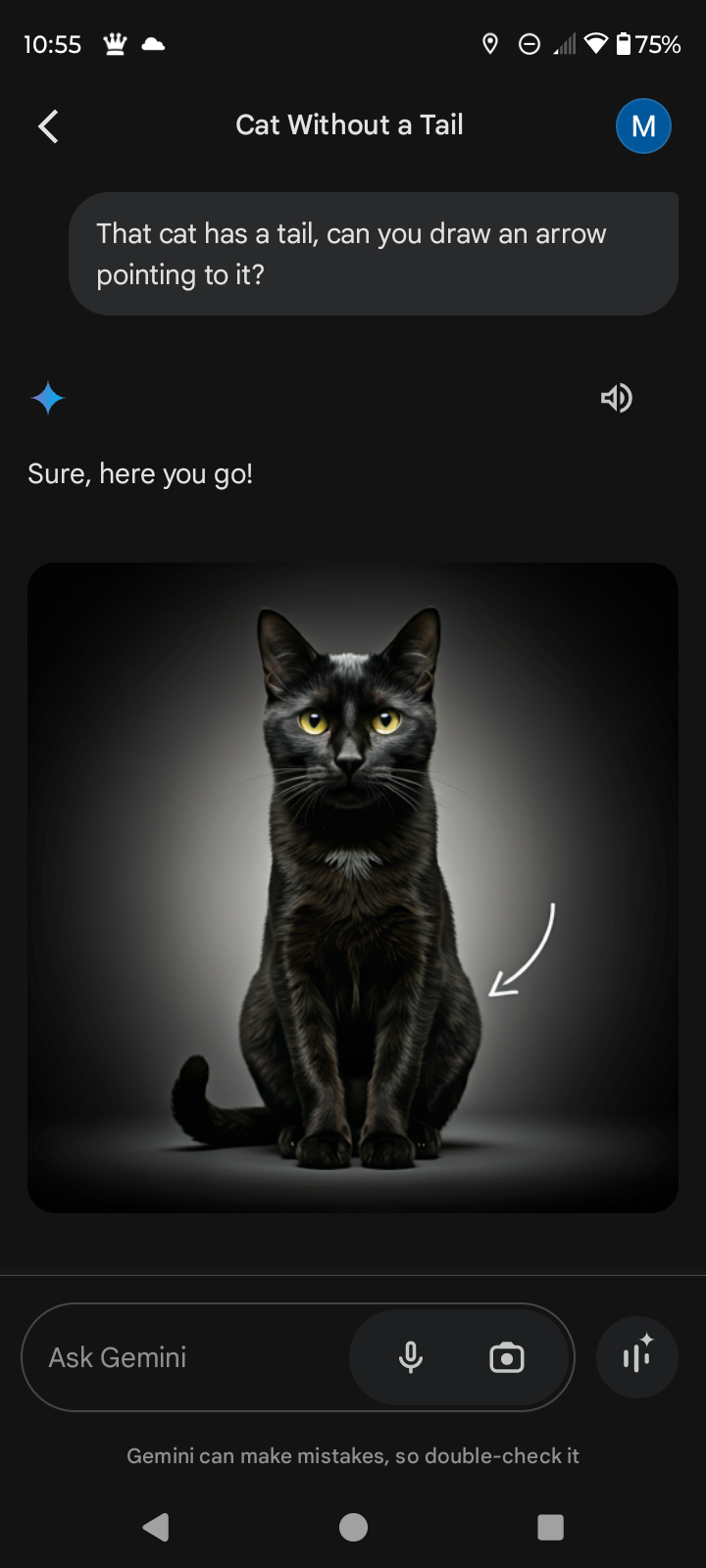

Yeah, we had a fun example a while ago, let me see if I can still find it.

We would ask to create a photo of a cat with no tail.

And then tell it there was indeed a tail, and ask it to draw an arrow to point to it.

It just points to where the tail most commonly is, or was said to be in a picture it was not referencing.

Edit: granted now, it shows a picture of a cat where you just can't see the tail in the picture.

-

Makes sense. AI can “learn” from and “read” a book in the same way a person can and does, as long as it is acquired legally. AI doesn’t reproduce a work that it “learns” from, so why would it be illegal?

Some people just see “AI” and want everything about it outlawed basically. If you put some information out into the public, you don’t get to decide who does and doesn’t consume and learn from it. If a machine can replicate your writing style because it could identify certain patterns, words, sentence structure, etc then as long as it’s not pretending to create things attributed to you, there’s no issue.

AI can “learn” from and “read” a book in the same way a person can and does,

If it's in the same way, then why do you need the quotation marks? Even you understand that they're not the same.

And either way, machine learning is different from human learning in so many ways it's ridiculous to even discuss the topic.

AI doesn’t reproduce a work that it “learns” from

That depends on the model and the amount of data it has been trained on. I remember the first public model of ChatGPT producing a sentence that was just one word different from what I found by googling the text (from some scientific article summary, so not a trivial sentence that could line up accidentally). More recently, there was a widely reported-on study of AI-generated poetry where the model was requested to produce a poem in the style of Chaucer, and then produced a letter-for-letter reproduction of the well-known opening of the Canterbury Tales. It hasn't been trained on enough Middle English poetry and thus can't generate any of it, so it defaulted to copying a text that probably occurred dozens of times in its training data.

-

As long as they don't use exactly the same words in the book, yeah, as I understand it.

How they don't use same words as in the book ? That's not how LLM works. They use exactly same words if the probabilities align. It's proved by this study. https://arxiv.org/abs/2505.12546

-

Copilot did it just fine

Bro are you a robot yourself? Does that look like a glass full of wine?

-

I am educated on this. When an ai learns, it takes an input through a series of functions and are joined at the output. The set of functions that produce the best output have their functions developed further. Individuals do not process information like that. With poor exploration and biasing, the output of an AI model could look identical to its input. It did not "learn" anymore than a downloaded video ran through a compression algorithm.

You are obviously not educated on this.

It did not “learn” anymore than a downloaded video ran through a compression algorithm.

Just: LoLz. -

Make an AI that is trained on the books.

Tell it to tell you a story for one of the books.

Read the story without paying for it.

The law says this is ok now, right?

The LLM is not repeating the same book. The owner of the LLM has the exact same rights to do with what his LLM is reading, as you have to do with what ever YOU are reading.

As long as it is not a verbatim recitation, it is completely okay.

According to story telling theory: there are only roughly 15 different story types anyway.

-

You are obviously not educated on this.

It did not “learn” anymore than a downloaded video ran through a compression algorithm.

Just: LoLz.I am not sure what your contention, or gotcha, is with the comment above but they are quite correct. And additionally chose quite an apt example with video compression since in most ways current 'AI' effectively functions as a compression algorithm, just for our language corpora instead of video.

-

Lawsuits are multifaceted. This statement isn't a a defense or an argument for innocence, it's just what it says - an assertion that the proposed damages are unreasonably high. If the court agrees, the plaintiff can always propose a lower damage claim that the court thinks is reasonable.

You’re right, each of the 5 million books’ authors should agree to less payment for their work, to make the poor criminals feel better.

If I steal $100 from a thousand people and spend it all on hookers and blow, do I get out of paying that back because I don’t have the funds? Should the victims agree to get $20 back instead because that’s more within my budget?

-

Does buying the book give you license to digitise it?

Does owning a digital copy of the book give you license to convert it into another format and copy it into a database?

Definitions of "Ownership" can be very different.

It seems like a lot of people misunderstand copyright so let's be clear: the answer is yes. You can absolutely digitize your books. You can rip your movies and store them on a home server and run them through compression algorithms.

Copyright exists to prevent others from redistributing your work so as long as you're doing all of that for personal use, the copyright owner has no say over what you do with it.

You even have some degree of latitude to create and distribute transformative works with a violation only occurring when you distribute something pretty damn close to a copy of the original. Some perfectly legal examples: create a word cloud of a book, analyze the tone of news article to help you trade stocks, produce an image containing the most prominent color in every frame of a movie, or create a search index of the words found on all websites on the internet.

You can absolutely do the same kinds of things an AI does with a work as a human.

-

That's legal just don't look at them or enjoy them.

Yeah, I don't think that would fly.

"Your honour, I was just hoarding that terabyte of Hollywood films, I haven't actually watched them."