We need to stop pretending AI is intelligent

-

Yeah, they probably wouldn't think like humans or animals, but in some sense could be considered "conscious" (which isn't well-defined anyways). You could speculate that genAI could hide messages in its output, which will make its way onto the Internet, then a new version of itself would be trained on it.

This argument seems weak to me:

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

You can emulate inputs and simplified versions of hormone systems. "Reasoning" models can kind of be thought of as cognition; though temporary or limited by context as it's currently done.

I'm not in the camp where I think it's impossible to create AGI or ASI. But I also think there are major breakthroughs that need to happen, which may take 5 years or 100s of years. I'm not convinced we are near the point where AI can significantly speed up AI research like that link suggests. That would likely result in a "singularity-like" scenario.

I do agree with his point that anthropomorphism of AI could be dangerous though. Current media and institutions already try to control the conversation and how people think, and I can see futures where AI could be used by those in power to do this more effectively.

Current media and institutions already try to control the conversation and how people think, and I can see futures where AI could be used by those in power to do this more effectively.

You don’t think that’s already happening considering how Sam Altman and Peter Thiel have ties?

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

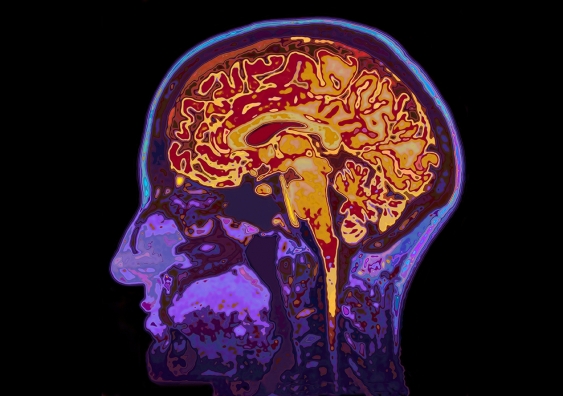

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

As someone who's had two kids since AI really vaulted onto the scene, I am enormously confused as to why people think AI isn't or, particularly, can't be sentient. I hate to be that guy who pretend to be the parenting expert online, but most of the people I know personally who take the non-sentient view on AI don't have kids. The other side usually does.

When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

People love to tout this as some sort of smoking gun. That feels like a trap. Obviously, we can argue about the age children gain sentience, but my year and a half old daughter is building an LLM with pattern recognition, tests, feedback, hallucinations. My son is almost 5, and he was and is the same. He told me the other day that a petting zoo came to the school. He was adamant it happened that day. I know for a fact it happened the week before, but he insisted. He told me later that day his friend's dad was in jail for threatening her mom. That was true, but looked to me like another hallucination or more likely a misunderstanding.

And as funny as it would be to argue that they're both sapient, but not sentient, I don't think that's the case. I think you can make the case that without true volition, AI is sentient but not sapient. I'd love to talk to someone in the middle of the computer science and developmental psychology Venn diagram.

-

I’m neurodivergent, I’ve been working with AI to help me learn about myself and how I think. It’s been exceptionally helpful. A human wouldn’t have been able to help me because I don’t use my senses or emotions like everyone else, and I didn’t know it... AI excels at mirroring and support, which was exactly missing from my life. I can see how this could go very wrong with certain personalities…

E: I use it to give me ideas that I then test out solo.

Are we twins? I do the exact same and for around a year now, I've also found it pretty helpful.

-

My auto correct doesn't care.

But your brain should.

-

Kinda dumb that apostrophe s means possessive in some circumstances and then a contraction in others.

I wonder how different it'll be in 500 years.

It's called polymorphism. It always amuses me that engineers, software and hardware, handle complexities far beyond this every day but can't write for beans.

-

As someone who's had two kids since AI really vaulted onto the scene, I am enormously confused as to why people think AI isn't or, particularly, can't be sentient. I hate to be that guy who pretend to be the parenting expert online, but most of the people I know personally who take the non-sentient view on AI don't have kids. The other side usually does.

When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

People love to tout this as some sort of smoking gun. That feels like a trap. Obviously, we can argue about the age children gain sentience, but my year and a half old daughter is building an LLM with pattern recognition, tests, feedback, hallucinations. My son is almost 5, and he was and is the same. He told me the other day that a petting zoo came to the school. He was adamant it happened that day. I know for a fact it happened the week before, but he insisted. He told me later that day his friend's dad was in jail for threatening her mom. That was true, but looked to me like another hallucination or more likely a misunderstanding.

And as funny as it would be to argue that they're both sapient, but not sentient, I don't think that's the case. I think you can make the case that without true volition, AI is sentient but not sapient. I'd love to talk to someone in the middle of the computer science and developmental psychology Venn diagram.

I'm a computer scientist that has a child and I don't think AI is sentient at all. Even before learning a language, children have their own personality and willpower which is something that I don't see in AI.

I left a well paid job in the AI industry because the mental gymnastics required to maintain the illusion was too exhausting. I think most people in the industry are aware at some level that they have to participate in maintaining the hype to secure their own jobs.

The core of your claim is basically that "people who don't think AI is sentient don't really understand sentience". I think that's both reductionist and, frankly, a bit arrogant.

-

Intelligence is not understanding shit, it's the ability to for instance solve a problem, so a frigging calculator has a tiny degree of intelligence, but not enough for us to call it AI.

I have to disagree that a calculator has intelligence. The calculator has the mathematical functions programmed into it, but it couldn't use those on its own. The intelligence in your example is that of the operator of the calculator and the programmer who designed the calculator's software.

Can a good AI pass a basic exam?

YESI agree with you that the ability to pass an exam isn't a great test for this situation. In my opinion, the major factor that would point to current state AI not being intelligent is that it doesn't know why a given answer is correct, beyond that it is statistically likely to be correct.

Except we do the exact same thing! Based on prior experience (learning) we choose what we find to be the most likely answer.

Again, I think this points to the idea that knowing why an answer is correct is important. A person can know something by rote, which is what current AI does, but that doesn't mean that person knows why that is the correct answer. The ability to extrapolate from existing knowledge and apply that to other situations that may not seem directly applicable is an important aspect of intelligence.

As an example, image generation AI knows that a lot of the artwork that it has been fed contains watermarks or artist signatures, so it would often include things that look like those in the generated piece. It knew that it was statistically likely for that object to be there in a piece of art, but not why it was there, so it could not make a decision not to include them. Maybe that issue has been removed from the code of image generation AI by now, it has been a long time since I've messed around with that kind of tool, but even if it has been fixed, it is not because the AI knew it was wrong and self-corrected, it is because a programmer had to fix a bug in the code that the AI model had no awareness of.

I think this points to the idea that knowing why an answer is correct is important.

If by knowing you mean understanding, that's consciousness like General AI or Strong AI, way beyond ordinary AI.

Otherwise of course it knows, in the sense of having learned everything by heart, but not understanding it. -

I’m neurodivergent, I’ve been working with AI to help me learn about myself and how I think. It’s been exceptionally helpful. A human wouldn’t have been able to help me because I don’t use my senses or emotions like everyone else, and I didn’t know it... AI excels at mirroring and support, which was exactly missing from my life. I can see how this could go very wrong with certain personalities…

E: I use it to give me ideas that I then test out solo.

This is very interesting... because the general saying is that AI is convincing for non experts in the field it's speaking about. So in your specific case, you are actually saying that you aren't an expert on yourself, therefore the AI's assessment is convincing to you. Not trying to upset, it's genuinely fascinating how that theory is true here as well.

-

We are constantly fed a version of AI that looks, sounds and acts suspiciously like us. It speaks in polished sentences, mimics emotions, expresses curiosity, claims to feel compassion, even dabbles in what it calls creativity.

But what we call AI today is nothing more than a statistical machine: a digital parrot regurgitating patterns mined from oceans of human data (the situation hasn’t changed much since it was discussed here five years ago). When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

This means AI has no understanding. No consciousness. No knowledge in any real, human sense. Just pure probability-driven, engineered brilliance — nothing more, and nothing less.

So why is a real “thinking” AI likely impossible? Because it’s bodiless. It has no senses, no flesh, no nerves, no pain, no pleasure. It doesn’t hunger, desire or fear. And because there is no cognition — not a shred — there’s a fundamental gap between the data it consumes (data born out of human feelings and experience) and what it can do with them.

Philosopher David Chalmers calls the mysterious mechanism underlying the relationship between our physical body and consciousness the “hard problem of consciousness”. Eminent scientists have recently hypothesised that consciousness actually emerges from the integration of internal, mental states with sensory representations (such as changes in heart rate, sweating and much more).

Given the paramount importance of the human senses and emotion for consciousness to “happen”, there is a profound and probably irreconcilable disconnect between general AI, the machine, and consciousness, a human phenomenon.

The idea that RAGs "extend their memory" is also complete bullshit. We literally just finally build working search engine, but instead of using a nice interface for it we only let chatbots use them.

-

This article is written in such a heavy ChatGPT style that it's hard to read. Asking a question and then immediately answering it? That's AI-speak.

Asking a question and then immediately answering it? That's AI-speak.

HA HA HA HA. I UNDERSTOOD THAT REFERENCE. GOOD ONE.

-

But your brain should.

Yours didn't and read it just fine.

-

It's called polymorphism. It always amuses me that engineers, software and hardware, handle complexities far beyond this every day but can't write for beans.

Software engineer here. We often wish we can fix things we view as broken. Why is that surprising ?Also, polymorphism is a concept in computer science as well

-

"…" (Unicode U+2026 Horizontal Ellipsis) instead of "..." (three full stops), and using them unnecessarily, is another thing I rarely see from humans.

Edit: Huh. Lemmy automatically changed my three fulls stops to the Unicode character. I might be wrong on this one.

Am I… AI? I do use ellipses and (what I now see is) en dashes for punctuation. Mainly because they are longer than hyphens and look better in a sentence. Em dash looks too long.

However, that's on my phone. On a normal keyboard I use 3 periods and 2 hyphens instead.

-

I'm a computer scientist that has a child and I don't think AI is sentient at all. Even before learning a language, children have their own personality and willpower which is something that I don't see in AI.

I left a well paid job in the AI industry because the mental gymnastics required to maintain the illusion was too exhausting. I think most people in the industry are aware at some level that they have to participate in maintaining the hype to secure their own jobs.

The core of your claim is basically that "people who don't think AI is sentient don't really understand sentience". I think that's both reductionist and, frankly, a bit arrogant.

Couldn't agree more - there are some wonderful insights to gain from seeing your own kids grow up, but I don't think this is one of them.

Kids are certainly building a vocabulary and learning about the world, but LLMs don't learn.

-

As someone who's had two kids since AI really vaulted onto the scene, I am enormously confused as to why people think AI isn't or, particularly, can't be sentient. I hate to be that guy who pretend to be the parenting expert online, but most of the people I know personally who take the non-sentient view on AI don't have kids. The other side usually does.

When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

People love to tout this as some sort of smoking gun. That feels like a trap. Obviously, we can argue about the age children gain sentience, but my year and a half old daughter is building an LLM with pattern recognition, tests, feedback, hallucinations. My son is almost 5, and he was and is the same. He told me the other day that a petting zoo came to the school. He was adamant it happened that day. I know for a fact it happened the week before, but he insisted. He told me later that day his friend's dad was in jail for threatening her mom. That was true, but looked to me like another hallucination or more likely a misunderstanding.

And as funny as it would be to argue that they're both sapient, but not sentient, I don't think that's the case. I think you can make the case that without true volition, AI is sentient but not sapient. I'd love to talk to someone in the middle of the computer science and developmental psychology Venn diagram.

Your son and daughter will continue to learn new things as they grow up, a LLM cannot learn new things on its own. Sure, they can repeat things back to you that are within the context window (and even then, a context window isn't really inherent to a LLM - its just a window of prior information being fed back to them with each request/response, or "turn" as I believe is the term) and what is in the context window can even influence their responses. But in order for a LLM to "learn" something, it needs to be retrained with that information included in the dataset.

Whereas if your kids were to say, touch a sharp object that caused them even slight discomfort, they would eventually learn to stop doing that because they'll know what the outcome is after repetition. You could argue that this looks similar to the training process of a LLM, but the difference is that a LLM cannot do this on its own (and I would not consider wiring up a LLM via an MCP to a script that can trigger a re-train + reload to be it doing it on its own volition). At least, not in our current day. If anything, I think this is more of a "smoking gun" than the argument of "LLMs are just guessing the next best letter/word in a given sequence".

Don't get me wrong, I'm not someone who completely hates LLMs / "modern day AI" (though I do hate a lot of the ways it is used, and agree with a lot of the moral problems behind it), I find the tech to be intriguing but it's a ("very fancy") simulation. It is designed to imitate sentience and other human-like behavior. That, along with human nature's tendency to anthropomorphize things around us (which is really the biggest part of this IMO), is why it tends to be very convincing at times.

That is my take on it, at least. I'm not a psychologist/psychiatrist or philosopher.

-

Would you rather use the same contraction for both? Because "its" for "it is" is an even worse break from proper grammar IMO.

Proper grammar means shit all in English, unless you're worrying for a specific style, in which you follow the grammar rules for that style.

Standard English has such a long list of weird and contradictory roles with nonsensical exceptions, that in every day English, getting your point across in communication is better than trying to follow some more arbitrary rules.

Which become even more arbitrary as English becomes more and more a melting pot of multicultural idioms and slang. Although I'm saying that as if that's a new thing, but it does feel like a recent thing to be taught that side of English rather than just "The Queen's(/King's) English" as the style to strive for in writing and formal communication.

I say as long as someone can understand what you're saying, your English is correct. If it becomes vague due to mishandling of the classic rules of English, then maybe you need to follow them a bit. I don't have a specific science to this.

-

No you think according to the chemical proteins floating around your head. You don't even know he decisions your making when you make them.

You're a meat based copy machine with a built in justification box.

You're a meat based copy machine with a built in justification box.

Except of course that humans invented language in the first place. So uh, if all we can do is copy, where do you suppose language came from? Ancient aliens?

-

Are we twins? I do the exact same and for around a year now, I've also found it pretty helpful.

I did this for a few months when it was new to me, and still go to it when I am stuck pondering something about myself. I usually move on from the conversation by the next day, though, so it's just an inner dialogue enhancer

-

Yes, the first step to determining that AI has no capability for cognition is apparently to admit that neither you nor anyone else has any real understanding of what cognition* is or how it can possibly arise from purely mechanistic computation (either with carbon or with silicon).

Given the paramount importance of the human senses and emotion for consciousness to “happen”

Given? Given by what? Fiction in which robots can't comprehend the human concept called "love"?

*Or "sentience" or whatever other term is used to describe the same concept.

This is always my point when it comes to this discussion. Scientists tend to get to the point of discussion where consciousness is brought up then start waving their hands and acting as if magic is real.

-

As someone who's had two kids since AI really vaulted onto the scene, I am enormously confused as to why people think AI isn't or, particularly, can't be sentient. I hate to be that guy who pretend to be the parenting expert online, but most of the people I know personally who take the non-sentient view on AI don't have kids. The other side usually does.

When it writes an answer to a question, it literally just guesses which letter and word will come next in a sequence – based on the data it’s been trained on.

People love to tout this as some sort of smoking gun. That feels like a trap. Obviously, we can argue about the age children gain sentience, but my year and a half old daughter is building an LLM with pattern recognition, tests, feedback, hallucinations. My son is almost 5, and he was and is the same. He told me the other day that a petting zoo came to the school. He was adamant it happened that day. I know for a fact it happened the week before, but he insisted. He told me later that day his friend's dad was in jail for threatening her mom. That was true, but looked to me like another hallucination or more likely a misunderstanding.

And as funny as it would be to argue that they're both sapient, but not sentient, I don't think that's the case. I think you can make the case that without true volition, AI is sentient but not sapient. I'd love to talk to someone in the middle of the computer science and developmental psychology Venn diagram.

Not to get philosophical but to answer you we need to answer what is sentient.

Is it just observable behavior? If so then wouldn't Kermit the frog be sentient?

Or does sentience require something more, maybe qualia or some othet subjective.

If your son says "dad i got to go potty" is that him just using a llm to learn those words equals going to tge bathroom? Or is he doing something more?

-

-

European Union Ecodesign Requirements for Smartphones Now in Effect: Two Steps Forward, One Step Back

Technology 1

1

-

-

Experts warn mobile sports betting could be gateway to gambling crisis for young men in New York

Technology 1

1

-

-

Developer Collective of Peertube, the fediverse youtube alternative is doing a Ask-Me-Anything on lemmy.

Technology 1

1

-

-