Google AI Overview is just affiliate marketing spam now

-

The part about Reddit communities being built now that contain only Ai questions, Ai answers, and links to products is what I figured Spez wanted when he ipo’d. And with Ai writing convincing text, it’s so easy!

The Reddit team is developing a bot that can post “this”.

They’re building a datacenter full of nvidia hardware for it.

-

Or if you are set on using AI Overviews to research products, then be intentional about asking for negatives and always fact-check the output as it is likely to include hallucinations.

If it is necessary to fact check something every single time you use it, what benefit does it give?

It might be able to give you tables or otherwise collated sets of information about multiple products etc.

I don't know if Google does, but LLMs can. Also do unit conversions. You probably still want to check the critical ones. It's a bit like using an encyclopedia or a catalog except more convenient and even less reliable.

-

garbage in, garbage out.

Spam in, spam out, profit in the middle.

-

It might be able to give you tables or otherwise collated sets of information about multiple products etc.

I don't know if Google does, but LLMs can. Also do unit conversions. You probably still want to check the critical ones. It's a bit like using an encyclopedia or a catalog except more convenient and even less reliable.

Google had a feature for converting units way before the AI boom and there are multiple websites that do conversions and calculations with real logic instead of LLM approximation.

It is more like asking a random person who will answer whether they know the right answer or not. An encyclopedia or catalog at least have some time of a time frame context of when they were published.

Putting the data into tables and other formats isn't helpful if the data is wrong!

-

This post did not contain any content.

seems usefull until you notice it's just copied from wikipedia

-

Or if you are set on using AI Overviews to research products, then be intentional about asking for negatives and always fact-check the output as it is likely to include hallucinations.

If it is necessary to fact check something every single time you use it, what benefit does it give?

It hasn't stopped anyone from using ChatGPT, which has become their biggest competitor since the inception of web search.

So yes, it's dumb, but they kind of have to do it at this point. And they need everyone to know it's available from the site they're already using, so they push it on everyone.

-

This post did not contain any content.

This ia crazy. It means Google and Reddit are shifting content from other web sites to Reddit, under corporate control. It seems like Google has firmly entered the unsustainable profit maximization step where they're now increasing the share of profit they take from their content sources by redirecting less and less traffic to them, while showing that content to Google users.

-

This isn't much of a change. Before AI it was SEO slop. Search for product reviews and you get a bunch of pages "reviewing" products by copying the amazon description and images.

There are interesting numbers in the article that you may not have looked at.

-

This post did not contain any content.

I’m low key pretty certain one of their long-term plans for the “AI” overview integration is to slowly sub in straight up ad content

-

Don’t bother asking Google if a product is worth it; it will likely recommend buying whatever you show interest in—even if the product doesn’t exist.

This seems like a general problem with these LLMs. Sometimes when I'm programming I ask the AI what it thinks about how I propose to approach some design issue or problem. It pretty much always encourages me to do what I proposed to do, and tells me it's a good approach. So I'm using it less and less because it seems the LLMs are encouraged to agree with the user and sound positive all the time. I'm fairly sure my ideas aren't always good. In the end I'll be discovering the pitfalls for myself with or without time wasted asking the LLM.

The same thing seems to happen when people try to use an LLM as a therapist. The LLM is overly encouraging and agreeable, and it sends people down deep rabbit holes of delusion.

I have only tried a few of these. ChatGPT, Le Chat and then maybe a couple of days with Copilot and hours with Gemini.

Unfortunately, Le Chat is a bit on the weaker side though I would prefer its success to others.

Copilot sucked at everything including microsoft specific stuff like excel formulas and actually editing a sheet when it asked I upload it and then made insane changes that made no sense and presented it as accurate.

Gemini, I dont like google and just avoided it straight away.

ChatGPT is my go to, free only as I generally only use it as a sounding board or for broad simple research such as listing family events in my area, you know put together lists.

What you say is my gripe with it too, I have told gpt to be concise, direct and critical and it improved but it needs reminding every few days not to run off into "great idea, heres why would you like more of ehy its great ad how to do it?"

-

This post did not contain any content.

oh I know this site! they also had an other rant earlier about google's fucked up site ranking, from before AI was forced into it

-

It hasn't stopped anyone from using ChatGPT, which has become their biggest competitor since the inception of web search.

So yes, it's dumb, but they kind of have to do it at this point. And they need everyone to know it's available from the site they're already using, so they push it on everyone.

No, they don't have to use defective technology just becsuse everyone else is.

-

No, they don't have to use defective technology just becsuse everyone else is.

Yes. They do.

-

Google had a feature for converting units way before the AI boom and there are multiple websites that do conversions and calculations with real logic instead of LLM approximation.

It is more like asking a random person who will answer whether they know the right answer or not. An encyclopedia or catalog at least have some time of a time frame context of when they were published.

Putting the data into tables and other formats isn't helpful if the data is wrong!

a feature for converting units

So does DDG

-

for whatever reasons

Until recently, one man, who ran from the sinking ship of Yahoo search into Google's marketing/ads group. He bullied the former head of search out when they refused to make search worse in order to boost the ad click through rates. He then became head of search himself until fairly recently.

In depth writeup here, and there's a link to the same story as a podcast at the top.

-

Or if you are set on using AI Overviews to research products, then be intentional about asking for negatives and always fact-check the output as it is likely to include hallucinations.

If it is necessary to fact check something every single time you use it, what benefit does it give?

That is my entire problem with llms and llm based tools. I get especially salty when someone sends me output from one and I confirm it's lying in 2 minutes.

-

It might be able to give you tables or otherwise collated sets of information about multiple products etc.

I don't know if Google does, but LLMs can. Also do unit conversions. You probably still want to check the critical ones. It's a bit like using an encyclopedia or a catalog except more convenient and even less reliable.

You can do unit conversions with powertoys on windows, spotlight on mac and whatever they call the nifty search bar on various Linux desktop environments without even hitting the internet with exactly the same convenience as an llm. Doing discrete things like that with an llm inference is the most inefficient and stupid way to do them.

-

That is my entire problem with llms and llm based tools. I get especially salty when someone sends me output from one and I confirm it's lying in 2 minutes.

"Thank you for wasting my time."

-

-

-

-

-

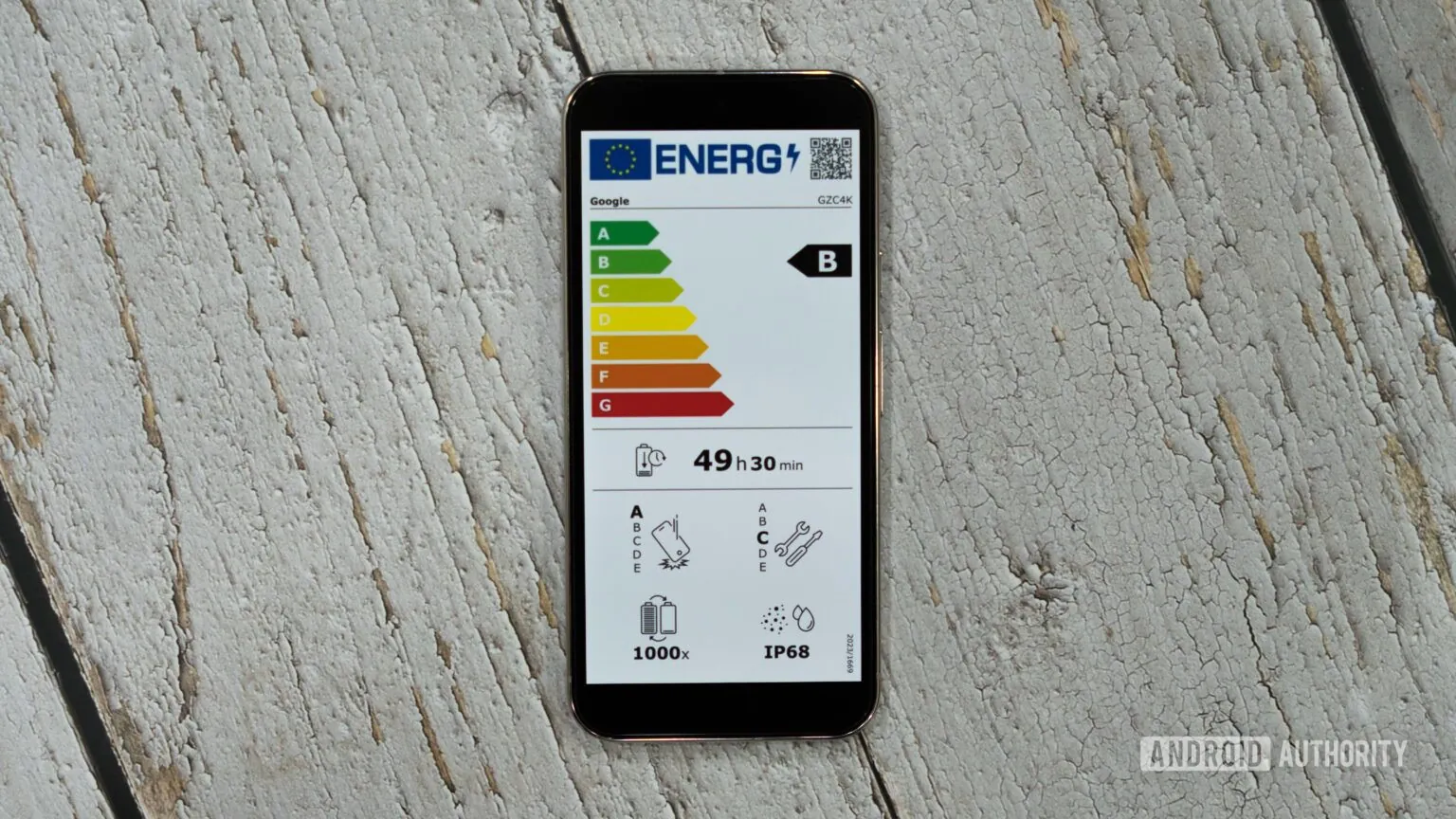

Samsung phones can survive twice as many charges as Pixel and iPhone, according to EU data

Technology 1

1

-

-

Child Welfare Experts Horrified by Mattel's Plans to Add ChatGPT to Toys After Mental Health Concerns for Adult Users

Technology 1

1

-

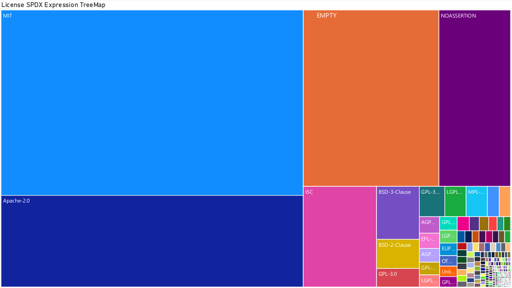

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1