A Prominent OpenAI Investor Appears to Be Suffering a ChatGPT-Related Mental Health Crisis, His Peers Say

-

Chatbot psychosis literally played itself out in my wonderful sister. She started confiding really dark shit to a openai model and it reinforced her psychosis. Her husband and I had to bring her to a psych ward. Please be safe with AI. Never ask it to think for you, or what you have to do.

Update: The psychiatrist who looked at her said she had too much weed -_- . I'm really disappointed in the doctor but she had finally slept and sounded more coherent then

Its so annoying that idk how to make them comprehend its stupid, like I tried to make it interesting for myself but I always end up breaking it or getting annoyed by the bad memory, or just shitty dialouge and ive tried hella ai, I asssume it only works on narcissits or ppl who talk mostly to be heard and hear agreements rather than to converse, the worst type of people get validation from ai not seeieng it for what it is

-

@return2ozma@lemmy.world !technology@lemmy.world

Should I worry about the fact that I can sort of make sense of what this "Geoff Lewis" person is trying to say?

Because, to me, it's very clear: they're referring to something that was build (the LLMs) which is segregating people, especially those who don't conform with a dystopian world.

Isn't what is happening right now in the world? "Dead Internet Theory" was never been so real, online content have being sowing the seed of doubt on whether it's AI-generated or not, users constantly need to prove they're "not a bot" and, even after passing a thousand CAPTCHAs, people can still be mistaken for bots, so they're increasingly required to show their faces and IDs.

The dystopia was already emerging way before the emergence of GPT, way before OpenAI: it has been a thing since the dawn of time! OpenAI only managed to make it worse: OpenAI "open"ed a gigantic dam, releasing a whole new ocean on Earth, an ocean in which we've becoming used to being drowned ever since.

Now, something that may sound like a "conspiracy theory": what's the real purpose behind LLMs? No, OpenAI, Meta, Google, even DeepSeek and Alibaba (non-Western), they wouldn't simply launch their products, each one of which cost them obscene amounts of money and resources, for free (as in "free beer") to the public, out of a "nice heart". Similarly, capital ventures and govts wouldn't simply give away the obscene amounts of money (many of which are public money from taxpayers) for which there will be no profiteering in the foreseeable future (OpenAI, for example, admitted many times that even charging US$200 their Enterprise Plan isn't enough to cover their costs, yet they continue to offer LLMs for cheap or "free").

So there's definitely something that isn't being told: the cost behind plugging the whole world into LLMs and other Generative Models. Yes, you read it right: the whole world, not just the online realm, because nowadays, billions of people are potentially dealing with those Markov chain algorithms offline, directly or indirectly: resumes are being filtered by LLMs, worker's performances are being scrutinized by LLMs, purchases are being scrutinized by LLMs, surveillance cameras are being scrutinized by VLMs, entire genomas are being fed to gLMs (sharpening the blades of the double-edged sword of bioengineering and biohacking)...

Generative Models seem to be omnipresent by now, with omnipresent yet invisible costs. Not exactly fiat money, but there are costs that we are paying, and these costs aren't being told to us, and while we're able to point out some (lack of privacy, personal data being sold and/or stolen), these are just the tip of an iceberg: one that we're already able to see, but we can't fully comprehend its consequences.

Curious how pondering about this is deemed "delusional", yet it's pretty "normal" to accept an increasingly-dystopian world and refusing to denounce the elephant in the room.I think in order to be a good psychiatrist you need to understand what your patient is "babbling" about. But you also need to be able to challenge their understanding and conclusions about the world so they engage with the problem in a healthy manner. Like if the guy is worried how AI is making the internet and world more dead then maybe don't go to the AI to be understood.

-

It’s insane to me that anyone would think these things are reliable for something as important as your own psychology/health.

Even using them for coding which is the one thing they’re halfway decent at will lead to disastrous code if you don’t already know what you’re doing.

It can sometimes write boilerplate fairly well. The issue with using it to solve problems is it doesn't know what it's doing. Then you have to read and parse what it outputs and fix it. It's usually faster to just write it yourself.

-

Chatbot psychosis literally played itself out in my wonderful sister. She started confiding really dark shit to a openai model and it reinforced her psychosis. Her husband and I had to bring her to a psych ward. Please be safe with AI. Never ask it to think for you, or what you have to do.

Update: The psychiatrist who looked at her said she had too much weed -_- . I'm really disappointed in the doctor but she had finally slept and sounded more coherent then

Update: The psychiatrist who looked at her said she had too much weed -_- . I'm really disappointed in the doctor but she had finally slept and sounded more coherent then

There might be something to that. Psychosis enhanced by weed is not unheard of. As I’ve read, weed has been shown in studies to bring out schizophrenic symptoms in people predisposed to it. Not that it causes it, just brings it out in some people.

I say this as someone who loves weed and consumes it frequently. Just like any psychoactive chemical, it’s going to have different effects on different people. We all know alcohol causes psychosis all the fucking time but we just roll with it.

-

Update: The psychiatrist who looked at her said she had too much weed -_- . I'm really disappointed in the doctor but she had finally slept and sounded more coherent then

There might be something to that. Psychosis enhanced by weed is not unheard of. As I’ve read, weed has been shown in studies to bring out schizophrenic symptoms in people predisposed to it. Not that it causes it, just brings it out in some people.

I say this as someone who loves weed and consumes it frequently. Just like any psychoactive chemical, it’s going to have different effects on different people. We all know alcohol causes psychosis all the fucking time but we just roll with it.

Thats what my therapist said

-

This post did not contain any content.

An OpenAI Investor Appears to Be Having a ChatGPT-Induced Mental Health Crisis

Bedrock co-founder Geoff Lewis has posted increasingly troubling content on social media, drawing concern from friends in the industry.

Futurism (futurism.com)

Dr. Joseph Pierre, a psychiatrist at the University of California, previously told Futurism that this is a recipe for delusion.

"What I think is so fascinating about this is how willing people are to put their trust in these chatbots in a way that they probably, or arguably, wouldn't with a human being," Pierre said. "There's something about these things — it has this sort of mythology that they're reliable and better than talking to people. And I think that's where part of the danger is: how much faith we put into these machines."

-

This post did not contain any content.

An OpenAI Investor Appears to Be Having a ChatGPT-Induced Mental Health Crisis

Bedrock co-founder Geoff Lewis has posted increasingly troubling content on social media, drawing concern from friends in the industry.

Futurism (futurism.com)

Dr sbaitao would like to have a word.

-

This post did not contain any content.

An OpenAI Investor Appears to Be Having a ChatGPT-Induced Mental Health Crisis

Bedrock co-founder Geoff Lewis has posted increasingly troubling content on social media, drawing concern from friends in the industry.

Futurism (futurism.com)

As someone who used to do a lot of mushroom babysitting the recursion talk smells whole lot like someone's first big trip

-

Update: The psychiatrist who looked at her said she had too much weed -_- . I'm really disappointed in the doctor but she had finally slept and sounded more coherent then

There might be something to that. Psychosis enhanced by weed is not unheard of. As I’ve read, weed has been shown in studies to bring out schizophrenic symptoms in people predisposed to it. Not that it causes it, just brings it out in some people.

I say this as someone who loves weed and consumes it frequently. Just like any psychoactive chemical, it’s going to have different effects on different people. We all know alcohol causes psychosis all the fucking time but we just roll with it.

My friend will not touch weed because schizophrenia runs in her family. It could manifest at any time, and weed can certainly cause it to happen.

-

Its so annoying that idk how to make them comprehend its stupid, like I tried to make it interesting for myself but I always end up breaking it or getting annoyed by the bad memory, or just shitty dialouge and ive tried hella ai, I asssume it only works on narcissits or ppl who talk mostly to be heard and hear agreements rather than to converse, the worst type of people get validation from ai not seeieng it for what it is

It's useful when people don't do stupid shit with it.

-

Could be. I've also seen similar delusions in people with syphilis that went un- or under-treated.

Where tf are people not treated for syphilis?

-

Where tf are people not treated for syphilis?

In this case, the United States. When healthcare is expensive and hard to access, not everybody gets it.

Syphilis symptoms can be so mild they go unnoticed. When you combine that with risky sexual behavior (hook-up culture, anti-condom bias) and lack of testing due to inadequate medical care, you can wind up with untreated syphilis. If you become homeless, care gets even harder to access.

You get diagnosed at a late stage when treatment is more difficult. They put you on a treatment plan, but followup depends on reliable transportation and the mental effects of the disease have made you paranoid. Now imagine you're also a member of a minority on which medical experiments have historically been done without consent or notice.

You don't really trust that those pills are for what you've been told at all. So difficulty accessing healthcare, changing clinics as you move around with medical history not always keeping up, distrust of the providers and treatment, and general instability and lack of regular routine all add up to only taking your medication inconsistently.

Result: under-treated syphilis

-

It's useful when people don't do stupid shit with it.

When competent ppl don't blindly trust it, can be useful, general public does stupid sht with it

-

Amazon engineers and marketers were asked on Monday to volunteer their time to the company’s warehouses to assist with grocery delivery

Technology 1

1

-

-

-

-

-

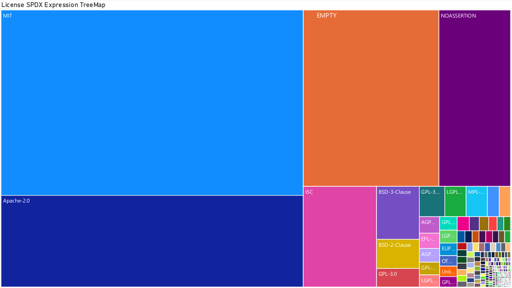

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1

-

-

SSD prices predicted to skyrocket throughout 2024 — TrendForce market report projects a 50% price hike | Tom's Hardware

Technology 1

1