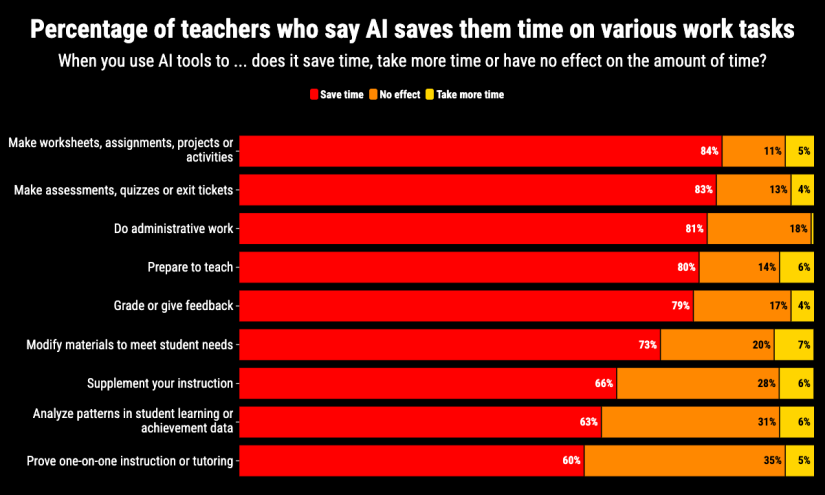

60% of Teachers Used AI This Year and Saved up to 6 Hours of Work a Week

-

Telling people to stop doing something because it burns the planet doesn't really changes their mind in general unfortunately. Best you can do is put the numbers in their face so that they can't avoid the truth. But that only works on people who care.

That is sadly the truth with many things. People just don't care unless it personally affects them. And even then it depends if it hits hard enough

.

. -

There is value in AI, blindly rejecting all use is just as stupid as blindly embracing all uses. Teacher are especially important in shaping how the next generation will be using it. They are at the front, discovering how students uses it what is good and what is bad. Finding way to improve their teaching is a good use. Saving time grading is a bad use.

Which ai service to use or not and why is another important facet. A trustworthy service can enshitify real fast, teaching what to look for is also something necessary. Nobody is fully competent in that matter, it still need to be discovered.

Exactly. If anyone is going to be using AI at the forefront, it should be teachers, for two reasons:

- so they can teach students its proper use because it will come up

- education is closely tracked, so we can see the impact of teachers relying on AI in student outcomes

I think this is fantastic!

-

i'm reporting the post because it is from a blatant disinfo house that spreads rhetoric about "critical race theory" and other obvious dogwhistles

it is not a coincidence that AI is being pushed so hard by conservative racists

? Media bias fact check says it's left-center w/ high factual accuracy. The poll itself is from Gallup, which is quite reputable, and The Walton Family Foundation, which is also reputable.

Are you thinking of something else?

-

I actually don't know that much about LLM's. I do know they require a ton of energy to train the models. But once those are trained, the smaller models especially, don't require that much to run, right? I once tried to run a local one to see how much it took, and my gpu maxed out for a few seconds and the LLM spit out text and it was done. While when playing games, the gpu maxes out for hours.

Again, i don't know super much about them as i have only used it a few times over the years to break down big tasks into smaller tasks for my AuDHD when i am very overwhelmed, and it was kinda nice for that.

The image generation stuff is pretty bad though from what i have read. Plus it steals peoples art. Fuck that shit.

Please do tell me if i understand wrong. Because i don't want to contribute to a bunch of bad shit ruining the climate.

- It's not like the companies train one model and they use it for months until they need new version. They train new models all the time to update them and test new ideas.

- They don't use small models. Typical LLMs offered by ChatGPT or Claude are the big ones

- They process thousands of queries per second so their GPUs are maxed out all the time, not just for few seconds.

-

? Media bias fact check says it's left-center w/ high factual accuracy. The poll itself is from Gallup, which is quite reputable, and The Walton Family Foundation, which is also reputable.

Are you thinking of something else?

There is no room here for your credulity. No, polls are not accurate. No, the Walmart tax dodge charity foundation is not "reputable."

-

There is no room here for your credulity. No, polls are not accurate. No, the Walmart tax dodge charity foundation is not "reputable."

Do you have an alternate source that proves your point, or is your entire argument "because I said so"? Whether something avoids taxes or not has little to do with its credibility, you'll need stronger evidence than that.

And I don't know what you mean by "polls are not accurate." Yeah, they're unreliable for certain things (I.e. predicting election results) because people lie and change their minds, and elections are generally decided on a per-state basis, so just one or two "flipping" is enough to turn an election. They're more reliable for other things, like tracking sentiment across a longer period of time.

I certainly don't buy the "6 hours saved per week" statement here (that's self-reported and a small sample size), but I do buy that teachers are using AI more and more to assist w/ their work. Surveys can only tell you so much, and it's important to not read too much into them, but that doesn't mean they're worthless or misleading.

-

- It's not like the companies train one model and they use it for months until they need new version. They train new models all the time to update them and test new ideas.

- They don't use small models. Typical LLMs offered by ChatGPT or Claude are the big ones

- They process thousands of queries per second so their GPUs are maxed out all the time, not just for few seconds.

Wouldn't it then help to run the smaller ones locally instead of using the big ones like ChatGPT?

I read that one called Deepmind or something in china took a lot less to train and is just as strong. Is that true?

What do people usually use LLM's for? I know they suck for most things people are using them for like coding. But what do people use them for that justifies all the hype?

Again, please don't think i am trying to justify it. I just don't know super much about them.

-

I literally only ever use it to rewrite things I’ve already written or to get my thoughts started when I’m having writer’s block (professional writing - not a creative writer). I really don’t understand why people use it beyond that. I don’t like having to check its homework all the damn time

Basically it’s just a tool for getting me to phrase or look at something differently. I can get tunnel vision when I’m writing or trying to come up with ideas.

I could give you a few real-life examples where it’s been helpful to me, but honestly, there are probably hundreds more depending on the person—as long as it’s used properly and not treated as flawless or final.

I’m a kindergarten teacher.

-

I describe what we’ve done in class, and it turns that into a short caption for the school’s daily social media post. Saves a bit of time.

-

For weekly assessments, I speak freely about each child’s week, and it generates a well-written comment. That’s a moderate time-saver, and I learn better phrasing from its output as I'm a non-native English speaker.

-

It helps me brainstorm new daily activity ideas based on specific goals or parameters. I choose the ones that fit and tweak them as needed.

-

When I’ve tried multiple strategies with a difficult child, I use it to get fresh suggestions for guidance or behavior management. I still apply my own experience to decide what works best.

-

It helped me plan a trip based on location, time, and several other factors—and it provided a lot of useful details I hadn’t considered.

-

It’s replaced Google for many tasks: it’s faster, often more accurate (if prompted clearly), and definitely more efficient for basic info.

-

I also use it for translation, and in many cases, it gives better or more natural results than Google Translate.

-

It helped me rewriting this very comment (till point 7) as I'm busy with something else so I saved time spellchecking and rephrasing.

-

-

Wouldn't it then help to run the smaller ones locally instead of using the big ones like ChatGPT?

I read that one called Deepmind or something in china took a lot less to train and is just as strong. Is that true?

What do people usually use LLM's for? I know they suck for most things people are using them for like coding. But what do people use them for that justifies all the hype?

Again, please don't think i am trying to justify it. I just don't know super much about them.

Small models can only handle limited set of tasks. To cover a lot of different tasks you would need a lot of small models. What DeepSeek did was build a lot of small models with each acting as an expert on one topic (more or less). It's more energy efficient to train but not necessarily to run as you have to chain a lot of small models to get good results.

What do people use LLM for? Asking questions you would normally ask Google. Google sucks now so it's easier to ask ChatGPT. You can also use it for simple tasks like checking text for grammar errors, writing emails and so on.

-

Yes, we are. I have a maths teacher friend, who complains endlessly about the shit that Sam Altman lies about, and yet they pay for ChatGPT and refuse to simply not use it. I swear it's worse than meth in terms of how it grabs some people.

Its HIGHLY addictive, especially to idiots. They feel smart using it. Which is so ironic I can't stand it.

-

i'm reporting the post because it is from a blatant disinfo house that spreads rhetoric about "critical race theory" and other obvious dogwhistles

it is not a coincidence that AI is being pushed so hard by conservative racists

Yep, and who owns the models? Billionaires. What are billionaires always? Conservatives. It isn't hard to connect the dots on why this path is not a good one.

-

Teachers use AI to generate assignments, kids use AI to generate answers, teachers use AI to grade answers.

Yeah, we're cooked.

Well, it can't clean toilets or build roads, so you can still do that for your elite billionaire overlords, and they may give you a prison cell to exist in!

-

This post did not contain any content.

Survey: 60% of Teachers Used AI This Year and Saved up to 6 Hours of Work a Week

New study of 2,232 U.S. public school teachers found they use artificial intelligence tools to create worksheets, modify student materials, make tests

(www.the74million.org)

The poll, published by the research firm and the Walton Family Foundation... Walton Family Foundation provides financial support to The 74.

What kind of fool would believe anything from these grifters?

Phony AF at its face.

-

-

Homeland Security Warns about the Spike in China-Based Technology Firms’ Smuggling of Signal Jammers

Technology 1

1

-

-

-

Experts warn mobile sports betting could be gateway to gambling crisis for young men in New York

Technology 1

1

-

YouTube relaxes moderation rules to allow more controversial content. Videos are allowed if "freedom of expression value may outweigh harm risk"

Technology 1

1

-

Pope Betting Odds: Bettors Lose Millions Predicting the New Pope as Polymarket Edge Fizzles Out

Technology 1

1

-