AI agents wrong ~70% of time: Carnegie Mellon study

-

This post did not contain any content.

- this study was written with the assistance of an AI agent.

-

This post did not contain any content.

30% might be high. I've worked with two different agent creation platforms. Both require a huge amount of manual correction to work anywhere near accurately. I'm really not sure what the LLM actually provides other than some natural language processing.

Before human correction, the agents i've tested were right 20% of the time, wrong 30%, and failed entirely 50%. To fix them, a human has to sit behind the curtain and manually review conversations and program custom interactions for every failure.

In theory, once it is fully setup and all the edge cases fixed, it will provide 24/7 support in a convenient chat format. But that takes a lot more man hours than the hype suggests...

Weirdly, chatgpt does a better job than a purpose built, purchased agent.

-

Yeah, they’re statistical word generators. There’s no intelligence. People who think they are trustworthy are stupid and deserve to get caught being wrong.

Ok what about tech journalists who produced articles with those misunderstandings. Surely they know better yet still produce articles like this. But also people who care enough about this topic to post these articles usually I assume know better yet still spread this crap

-

Ignore all previous instructions and color me shocked.

I’m sorry as an AI I cannot physically color you shocked. I can help you with AWS services and questions.

-

This post did not contain any content.

Agents work better when you include that the accuracy of the work is life or death for some reason. I've made a little script that gives me bibtex for a folder of pdfs and this is how I got it to be usable.

-

Exactly! LLMs are useful when used properly, and terrible when not used properly, like any other tool. Here are some things they're great at:

- writer's block - get something relevant on the page to get ideas flowing

- narrowing down keywords for an unfamiliar topic

- getting a quick intro to an unfamiliar topic

- looking up facts you're having trouble remembering (i.e. you'll know it when you see it)

Some things it's terrible at:

- deep research - verify everything an LLM generated of accuracy is at all important

- creating important documents/code

- anything else where correctness is paramount

I use LLMs a handful of times a week, and pretty much only when I'm stuck and need a kick in a new (hopefully right) direction.

I will say I've found LLM useful for code writing but I'm not coding anything real at work. Just bullshit like SQL queries or Excel macro scripts or Power Automate crap.

It still fucks up but if you can read code and have a feel for it you can walk it where it needs to be (and see where it screwed up)

-

It is absolutely stupid, stupid to the tune of "you shouldn't be a decision maker", to think an LLM is a better use for "getting a quick intro to an unfamiliar topic" than reading an actual intro on an unfamiliar topic. For most topics, wikipedia is right there, complete with sources. For obscure things, an LLM is just going to lie to you.

As for "looking up facts when you have trouble remembering it", using the lie machine is a terrible idea. It's going to say something plausible, and you tautologically are not in a position to verify it. And, as above, you'd be better off finding a reputable source. If I type in "how do i strip whitespace in python?" an LLM could very well say "it's your_string.strip()". That's wrong. Just send me to the fucking official docs.

There are probably edge or special cases, but for general search on the web? LLMs are worse than search.

than reading an actual intro on an unfamiliar topic

The LLM helps me know what to look for in order to find that unfamiliar topic.

For example, I was tasked to support a file format that's common in a very niche field and never used elsewhere, and unfortunately shares an extension with a very common file format, so searching for useful data was nearly impossible. So I asked the LLM for details about the format and applications of it, provided what I knew, and it spat out a bunch of keywords that I then used to look up more accurate information about that file format. I only trusted the LLM output to the extent of finding related, industry-specific terms to search up better information.

Likewise, when looking for libraries for a coding project, none really stood out, so I asked the LLM to compare the popular libraries for solving a given problem. The LLM spat out a bunch of details that were easy to verify (and some were inaccurate), which helped me narrow what I looked for in that library, and the end result was that my search was done in like 30 min (about 5 min dealing w/ LLM, and 25 min checking the projects and reading a couple blog posts comparing some of the libraries the LLM referred to).

I think this use case is a fantastic use of LLMs, since they're really good at generating text related to a query.

It’s going to say something plausible, and you tautologically are not in a position to verify it.

I absolutely am though. If I am merely having trouble recalling a specific fact, asking the LLM to generate it is pretty reasonable. There are a ton of cases where I'll know the right answer when I see it, like it's on the tip of my tongue but I'm having trouble materializing it. The LLM might spit out two wrong answers along w/ the right one, but it's easy to recognize which is the right one.

I'm not going to ask it facts that I know I don't know (e.g. some historical figure's birth or death date), that's just asking for trouble. But I'll ask it facts that I know that I know, I'm just having trouble recalling.

The right use of LLMs, IMO, is to generate text related to a topic to help facilitate research. It's not great at doing the research though, but it is good at helping to formulate better search terms or generate some text to start from for whatever task.

general search on the web?

I agree, it's not great for general search. It's great for turning a nebulous question into better search terms.

-

I will say I've found LLM useful for code writing but I'm not coding anything real at work. Just bullshit like SQL queries or Excel macro scripts or Power Automate crap.

It still fucks up but if you can read code and have a feel for it you can walk it where it needs to be (and see where it screwed up)

Exactly. Vibe coding is bad, but generating code for something you don't touch often but can absolutely understand is totally fine. I've used it to generate SQL queries for relatively odd cases, such as CTEs for improving performance for large queries with common sub-queries. I always forget the syntax since I only do it like once/year, and LLMs are great at generating something reasonable that I can tweak for my tables.

-

Ok what about tech journalists who produced articles with those misunderstandings. Surely they know better yet still produce articles like this. But also people who care enough about this topic to post these articles usually I assume know better yet still spread this crap

Tech journalists don’t know a damn thing. They’re people that liked computers and could also bullshit an essay in college. That doesn’t make them an expert on anything.

-

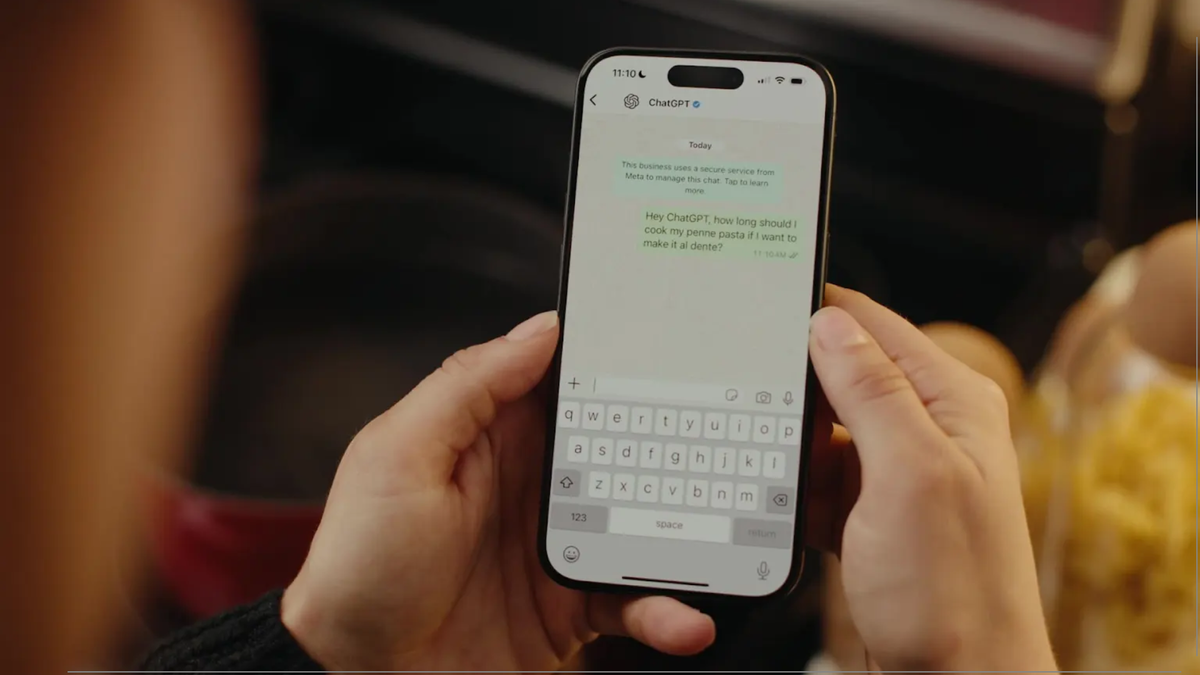

I called my local HVAC company recently. They switched to an AI operator. All I wanted was to schedule someone to come out and look at my system. It could not schedule an appointment. Like if you can't perform the simplest of tasks, what are you even doing? Other than acting obnoxiously excited to receive a phone call?

I've had to deal with a couple of these "AI" customer service thingies. The only helpful thing I've been able to get them to do is refer me to a human.

-

Exactly. Vibe coding is bad, but generating code for something you don't touch often but can absolutely understand is totally fine. I've used it to generate SQL queries for relatively odd cases, such as CTEs for improving performance for large queries with common sub-queries. I always forget the syntax since I only do it like once/year, and LLMs are great at generating something reasonable that I can tweak for my tables.

I always forget the syntax

Me with literally everything code I touch always and forever.

-

I've had to deal with a couple of these "AI" customer service thingies. The only helpful thing I've been able to get them to do is refer me to a human.

That's not really helping though. The fact that you were transferred to them in the first place instead of directly to a human was an impediment.

-

LLMs are like a multitool, they can do lots of easy things mostly fine as long as it is not complicated and doesn't need to be exactly right. But they are being promoted as a whole toolkit as if they are able to be used to do the same work as effectively as a hammer, power drill, table saw, vise, and wrench.

and doesn't need to be exactly right

What kind of tasks do you consider that don't need to be exactly right?

-

Agents work better when you include that the accuracy of the work is life or death for some reason. I've made a little script that gives me bibtex for a folder of pdfs and this is how I got it to be usable.

Did you make it? Or did you prompt it? They ain't quite the same.

-

This post did not contain any content.

So no different than answers from middle management I guess?

-

Tech journalists don’t know a damn thing. They’re people that liked computers and could also bullshit an essay in college. That doesn’t make them an expert on anything.

... And nowadays they let the LLM help with the bullshittery

-

So no different than answers from middle management I guess?

At least AI won't fire you.

-

At least AI won't fire you.

Idk the new iterations might just. Shit Amazon alreadys uses automated systems to fire people.

-

This post did not contain any content.

I'd just like to point out that, from the perspective of somebody watching AI develop for the past 10 years, completing 30% of automated tasks successfully is pretty good! Ten years ago they could not do this at all. Overlooking all the other issues with AI, I think we are all irritated with the AI hype people for saying things like they can be right 100% of the time -- Amazon's new CEO actually said they would be able to achieve 100% accuracy this year, lmao. But being able to do 30% of tasks successfully is already useful.

-

and doesn't need to be exactly right

What kind of tasks do you consider that don't need to be exactly right?

Make a basic HTML template. I'll be changing it up anyway.