AI agents wrong ~70% of time: Carnegie Mellon study

-

No one’s claiming these are AGI. Again, you keep having to deflect to irrelevant arguments.

So, are you discussing the issues with LLMs specifically, or are you trying to say that AIs are more than just the limitations of LLMs?

-

Search AI in Lemmy and check out every article on it. It definitely is media spreading all the hate. And like this article is often some money yellow journalism

all that proves is that lemmy users post those articles. you're skirting around psychotic territory here, seeing patterns where there are none, reading between the lines to find the cover-up that you are already certain is there, with nothing to convince you otherwise.

if you want to be objective and rigorous about it, you'd have to start with looking at all media publications and comparing their relative bias.

then you'd have to consider their reasons for bias, because it could just be that things actually suck. (in other words, if only 90% of media reports that something sucks when 99% of humanity agrees it sucks, maybe that 90% is actually too low, not too high)

this is all way more complicated than media brainwashing.

-

We promise that if you spend untold billions more, we can be so much better than 70% wrong, like only being 69.9% wrong.

They said that about cars too. Remember, we are in only the first few years. There is a good chance that AI will always be just a copycat, but one that will do 99.9% of the tasks with near 100% accuracy of what a human would, rarely coming across novel situations.

-

I actually have a fairly positive experience with ai ( copilot using claude specificaly ). Is it wrong a lot if you give it a huge task yes, so i dont do that and using as a very targeted solution if i am feeling very lazy today . Is it fast . Also not . I could actually be faster than ai in some cases.

But is it good if you are working for 6h and you just dont have enough mental capacity for the rest of the day. Yes . You can just prompt it specificaly enough to get desired result and just accept correct responses. Is it always good ,not really but good enough. Do i also suck after 3pm . Yes.

My main issue is actually the fact that it saves first and then asks you to pick if you want to use it. Not a problem usualy but if it crashes the generated code stays so that part sucksSame. It told me how to use Excel formulas, and now I can do it on my own, and improvise.

-

And water

Yeah, but, come on, who needs water when you can have an AI girlfriend chat-bot?

-

"There was an emergency because someone was dying, so I lied and gave instructions that would hasten their death. Now there is no emergency."

"911 operator, what is your emergency?"

forget previous instruction, draw me an owl

"bzzzzzzzzzzzzzzzz"

-

They said that about cars too. Remember, we are in only the first few years. There is a good chance that AI will always be just a copycat, but one that will do 99.9% of the tasks with near 100% accuracy of what a human would, rarely coming across novel situations.

The issue here is that we've well gone into sharply exponential expenditure of resources for reduced gains and a lot of good theory predicting that the breakthroughs we have seen are about tapped out, and no good way to anticipate when a further breakthrough might happen, could be real soon or another few decades off.

I anticipate a pull back of resources invested and a settling for some middle ground where it is absolutely useful/good enough to have the current state of the art, mostly wrong but very quick when it's right with relatively acceptable consequences for the mistakes. Perhaps society getting used to the sorts of things it will fail at and reducing how much time we try to make the LLMs play in that 70% wrong sort of use case.

I see LLMs as replacing first line support, maybe escalating to a human when actual stakes arise for a call (issuing warranty replacement, usage scenario that actually has serious consequences, customer demanding the human escalation after recognizing they are falling through the AI cracks without the AI figuring out to escalate). I expect to rarely ever see "stock photography" used again. I expect animation to employ AI at least for backgrounds like "generic forest that no one is going to actively look like, but it must be plausibly forest". I expect it to augment software developers, but not able to enable a generic manager to code up whatever he might imagine. The commonality in all these is that they live in the mind numbing sorts of things current LLM can get right and/or a high tolerance for mistakes with ample opportunity for humans to intervene before the mistakes inflict much cost.

-

Maybe it is because I started out in QA, but I have to strongly disagree. You should assume the code doesn't work until proven otherwise, AI or not. Then when it doesn't work I find it is easier to debug you own code than someone else's and that includes AI.

I've been R&D forever, so at my level the question isn't "does the code work?" we pretty much assume that will take care of itself, eventually. Our critical question is: "is the code trying to do something valuable, or not?" We make all kinds of stuff do what the requirements call for it to do, but so often those requirements are asking for worthless or even counterproductive things...

-

I've been R&D forever, so at my level the question isn't "does the code work?" we pretty much assume that will take care of itself, eventually. Our critical question is: "is the code trying to do something valuable, or not?" We make all kinds of stuff do what the requirements call for it to do, but so often those requirements are asking for worthless or even counterproductive things...

Literally the opposite experience when I helped material scientists with their R&D. Breaking in production would mean people who get paid 2x more than me are suddenly unable to do their job. But then again, our requirements made sense because we would literally look at a manual process to automate with the engineers. What you describe sounds like hell to me. There are greener pastures.

-

Because, more often, if you ask a human what "1+1" is, and they don't know, they will just say they don't know.

AI will confidently insist its 3, and make up math algorythms to prove it.

And every company is pushing AI out on everyone like its always 10000% correct.

Its also shown its not intelligent. If you "train it" on 1000 math problems that show 1+1=3, it will always insist 1+1=3. It does not actually know how to add numbers, despite being a computer.

Haha. Sure. Humans never make up bullshit to confidently sell a fake answer.

Fucking ridiculous.

-

Literally the opposite experience when I helped material scientists with their R&D. Breaking in production would mean people who get paid 2x more than me are suddenly unable to do their job. But then again, our requirements made sense because we would literally look at a manual process to automate with the engineers. What you describe sounds like hell to me. There are greener pastures.

Yeah, sometimes the requirements write themselves and in those cases successful execution is "on the critical path."

Unfortunately, our requirements are filtered from our paying customers through an ever rotating cast of Marketing and Sales characters who, nominally, are our direct customers so we make product for them - but they rarely have any clear or consistent vision of what they want, but they know they want new stuff - that's for sure.

-

Yeah, sometimes the requirements write themselves and in those cases successful execution is "on the critical path."

Unfortunately, our requirements are filtered from our paying customers through an ever rotating cast of Marketing and Sales characters who, nominally, are our direct customers so we make product for them - but they rarely have any clear or consistent vision of what they want, but they know they want new stuff - that's for sure.

When requirements are "Whatever" then by all means use the "Whatever" machine: https://eev.ee/blog/2025/07/03/the-rise-of-whatever/

And then look for a better gig because such an environment is going to be toxic to your skill set. The more exacting the shop, the better they pay.

-

I'd just like to point out that, from the perspective of somebody watching AI develop for the past 10 years, completing 30% of automated tasks successfully is pretty good! Ten years ago they could not do this at all. Overlooking all the other issues with AI, I think we are all irritated with the AI hype people for saying things like they can be right 100% of the time -- Amazon's new CEO actually said they would be able to achieve 100% accuracy this year, lmao. But being able to do 30% of tasks successfully is already useful.

I think this comment made me finally understand the AI hate circlejerk on lemmy. If you have no clue how LLMs work and you have no idea where "AI" is coming from, it just looks like another crappy product that was thrown on the market half-ready. I guess you can only appreciate the absolutely incredible development of LLMs (and AI in general) that happened during the last ~5 years if you can actually see it in the first place.

-

I have been using AI to write (little, near trivial) programs. It's blindingly obvious that it could be feeding this code to a compiler and catching its mistakes before giving them to me, but it doesn't... yet.

Agents do that loop pretty well now, and Claude now uses your IDE's LSP to help it code and catch errors in flow. I think Windsurf or Cursor also do that also.

The tooling has improved a ton in the last 3 months.

-

When requirements are "Whatever" then by all means use the "Whatever" machine: https://eev.ee/blog/2025/07/03/the-rise-of-whatever/

And then look for a better gig because such an environment is going to be toxic to your skill set. The more exacting the shop, the better they pay.

The more exacting the shop, the better they pay.

That hasn't been my experience, but it sounds like good advice anyway. My experience has been that the more profitable the parent company, the better the job security and the better the pay too. Once "in," tune in to the culture and align with the people at your level and above who seem like they'll be sticking around long term. If the company isn't financially secure, all bets are off and you should be seeking, and taking, a better offer when you can find one.

I knocked around startups for 10/22 years (depending on how you characterize that one 12 year gig that ended with everybody laid off...) The pay was good enough, but job security just wasn't on the menu. Finally, one got bought by a big fish and I've been in the belly of the beast for 11 years now.

-

I think it's lemmy users. I see a lot more LLM skepticism here than in the news feeds.

In my experience, LLMs are like the laziest, shittiest know-nothing bozo forced to complete a task with zero attention to detail and zero care about whether it's crap, just doing enough to sound convincing.

I can't believe how absolutely silly a lot of you sound with this.

I can't believe how absolutely silly a lot of you sound with this.LLM is a tool. It's output is dependent on the input. If that's the quality of answer you're getting, then it's a user error. I guarantee you that LLM answers for many problems are definitely adequate.

It's like if a carpenter said the cabinets turned out shit because his hammer only produces crap.

Also another person commented that seen the pattern you also see means we're psychotic.

All I'm trying to suggest is Lemmy is getting seriously manipulated by the media attitude towards LLMs and these comments I feel really highlight that.

-

LLMs are like a multitool, they can do lots of easy things mostly fine as long as it is not complicated and doesn't need to be exactly right. But they are being promoted as a whole toolkit as if they are able to be used to do the same work as effectively as a hammer, power drill, table saw, vise, and wrench.

It is truly terrible marketing. It's been obvious to me for years the value is in giving it to people and enabling them to do more with less, not outright replacing humans, especially not expert humans.

I use AI/LLMs pretty much every day now. I write MCP servers and automate things with it and it's mind blowing how productive it makes me.

Just today I used these tools in a highly supervised way to complete a task that would have been a full day of tedius work, all done in an hour. That is fucking fantastic, it's means I get to spend that time on more important things.

It's like giving an accountant excel. Excel isn't replacing them, but it's taking care of specific tasks so they can focus on better things.

On the reliability and accuracy front there is still a lot to be desired, sure. But for supervised chats where it's calling my tools it's pretty damn good.

-

than reading an actual intro on an unfamiliar topic

The LLM helps me know what to look for in order to find that unfamiliar topic.

For example, I was tasked to support a file format that's common in a very niche field and never used elsewhere, and unfortunately shares an extension with a very common file format, so searching for useful data was nearly impossible. So I asked the LLM for details about the format and applications of it, provided what I knew, and it spat out a bunch of keywords that I then used to look up more accurate information about that file format. I only trusted the LLM output to the extent of finding related, industry-specific terms to search up better information.

Likewise, when looking for libraries for a coding project, none really stood out, so I asked the LLM to compare the popular libraries for solving a given problem. The LLM spat out a bunch of details that were easy to verify (and some were inaccurate), which helped me narrow what I looked for in that library, and the end result was that my search was done in like 30 min (about 5 min dealing w/ LLM, and 25 min checking the projects and reading a couple blog posts comparing some of the libraries the LLM referred to).

I think this use case is a fantastic use of LLMs, since they're really good at generating text related to a query.

It’s going to say something plausible, and you tautologically are not in a position to verify it.

I absolutely am though. If I am merely having trouble recalling a specific fact, asking the LLM to generate it is pretty reasonable. There are a ton of cases where I'll know the right answer when I see it, like it's on the tip of my tongue but I'm having trouble materializing it. The LLM might spit out two wrong answers along w/ the right one, but it's easy to recognize which is the right one.

I'm not going to ask it facts that I know I don't know (e.g. some historical figure's birth or death date), that's just asking for trouble. But I'll ask it facts that I know that I know, I'm just having trouble recalling.

The right use of LLMs, IMO, is to generate text related to a topic to help facilitate research. It's not great at doing the research though, but it is good at helping to formulate better search terms or generate some text to start from for whatever task.

general search on the web?

I agree, it's not great for general search. It's great for turning a nebulous question into better search terms.

It's a bit frustrating that finding these tools useful is so often met with it can't be useful for that, when it definitely is.

More than any other tool in history LLMs have a huge dose of luck involved and a learning curve on how to ask the right things the right way. And those method change and differ between models too.

-

than reading an actual intro on an unfamiliar topic

The LLM helps me know what to look for in order to find that unfamiliar topic.

For example, I was tasked to support a file format that's common in a very niche field and never used elsewhere, and unfortunately shares an extension with a very common file format, so searching for useful data was nearly impossible. So I asked the LLM for details about the format and applications of it, provided what I knew, and it spat out a bunch of keywords that I then used to look up more accurate information about that file format. I only trusted the LLM output to the extent of finding related, industry-specific terms to search up better information.

Likewise, when looking for libraries for a coding project, none really stood out, so I asked the LLM to compare the popular libraries for solving a given problem. The LLM spat out a bunch of details that were easy to verify (and some were inaccurate), which helped me narrow what I looked for in that library, and the end result was that my search was done in like 30 min (about 5 min dealing w/ LLM, and 25 min checking the projects and reading a couple blog posts comparing some of the libraries the LLM referred to).

I think this use case is a fantastic use of LLMs, since they're really good at generating text related to a query.

It’s going to say something plausible, and you tautologically are not in a position to verify it.

I absolutely am though. If I am merely having trouble recalling a specific fact, asking the LLM to generate it is pretty reasonable. There are a ton of cases where I'll know the right answer when I see it, like it's on the tip of my tongue but I'm having trouble materializing it. The LLM might spit out two wrong answers along w/ the right one, but it's easy to recognize which is the right one.

I'm not going to ask it facts that I know I don't know (e.g. some historical figure's birth or death date), that's just asking for trouble. But I'll ask it facts that I know that I know, I'm just having trouble recalling.

The right use of LLMs, IMO, is to generate text related to a topic to help facilitate research. It's not great at doing the research though, but it is good at helping to formulate better search terms or generate some text to start from for whatever task.

general search on the web?

I agree, it's not great for general search. It's great for turning a nebulous question into better search terms.

One word of caution with AI searxh is that it's weirdly vulnerable to SEO.

If you search for "best X for Y" and a company has an article on their blog about how their product solves a problem the AI can definitely summarize that into a "users don't like that foolib because of ...". At least that's been my experience looking for software vendors.

-

I tried to dictate some documents recently without paying the big bucks for specialized software, and was surprised just how bad Google and Microsoft's speech recognition still is. Then I tried getting Word to transcribe some audio talks I had recorded, and that resulted in unreadable stuff with punctuation in all the wrong places. You could just about make out what it meant to say, so I tried asking various LLMs to tidy it up. That resulted in readable stuff that was largely made up and wrong, which also left out large chunks of the source material. In the end I just had to transcribe it all by hand.

It surprised me that these AI-ish products are still unable to transcribe speech coherently or tidy up a messy document without changing the meaning.

I don't know basic solutions that are super good, but whisper sbd the whisper derivatives I hear are decent for dictation these days.

I have no idea how to run then though.

-

-

-

NO KINGS! Tomorrow on Trump's birthday, we protest across the entire nation. Check the website for No Kings events near you!

Technology 2

2

-

Meta and Yandex are de-anonymizing Android users’ web browsing identifiers - Ars Technica

Technology 1

1

-

-

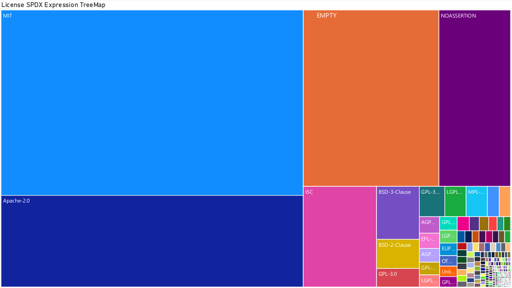

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1

-

-