Apple just proved AI "reasoning" models like Claude, DeepSeek-R1, and o3-mini don't actually reason at all. They just memorize patterns really well.

-

Unlike Markov models, modern LLMs use transformers that attend to full contexts, enabling them to simulate structured, multi-step reasoning (albeit imperfectly). While they don’t initiate reasoning like humans, they can generate and refine internal chains of thought when prompted, and emerging frameworks (like ReAct or Toolformer) allow them to update working memory via external tools. Reasoning is limited, but not physically impossible, it’s evolving beyond simple pattern-matching toward more dynamic and compositional processing.

Reasoning is limited

Most people wouldn't call zero of something 'limited'.

-

Reasoning is limited

Most people wouldn't call zero of something 'limited'.

The paper doesn’t say LLMs can’t reason, it shows that their reasoning abilities are limited and collapse under increasing complexity or novel structure.

-

But it still manages to fuck it up.

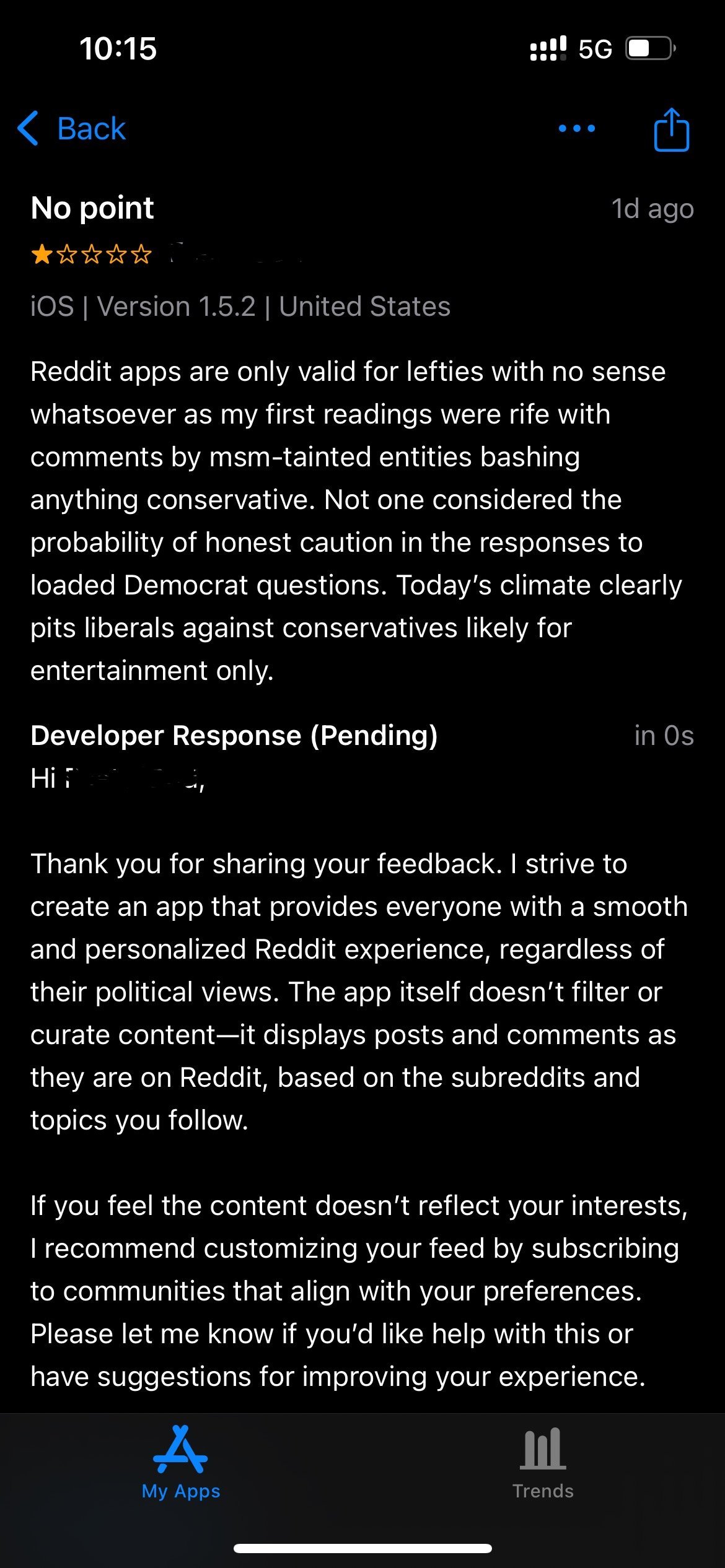

I've been experimenting with using Claude's Sonnet model in Copilot in agent mode for my job, and one of the things that's become abundantly clear is that it has certain types of behavior that are heavily represented in the model, so it assumes you want that behavior even if you explicitly tell it you don't.

Say you're working in a yarn workspaces project, and you instruct Copilot to build and test a new dashboard using an instruction file. You'll need to include explicit and repeated reminders all throughout the file to use yarn, not NPM, because even though yarn is very popular today, there are so many older examples of using NPM in its model that it's just going to assume that's what you actually want - thereby fucking up your codebase.

I've also had lots of cases where I tell it I don't want it to edit any code, just to analyze and explain something that's there and how to update it... and then I have to stop it from editing code anyway, because halfway through it forgot that I didn't want edits, just explanations.

I’ve also had lots of cases where I tell it I don’t want it to edit any code, just to analyze and explain something that’s there and how to update it… and then I have to stop it from editing code anyway, because halfway through it forgot that I didn’t want edits, just explanations.

I find it hilarious that the only people these LLMs mimic are the incompetent ones. I had a coworker that changed things when asked to explain constantly.

-

The paper doesn’t say LLMs can’t reason, it shows that their reasoning abilities are limited and collapse under increasing complexity or novel structure.

I agree with the author.

If these models were truly "reasoning," they should get better with more compute and clearer instructions.

The fact that they only work up to a certain point despite increased resources is proof that they are just pattern matching, not reasoning.

-

The "Apple" part. CEOs only care what companies say.

Apple is significantly behind and arrived late to the whole AI hype, so of course it's in their absolute best interest to keep showing how LLMs aren't special or amazingly revolutionary.

They're not wrong, but the motivation is also pretty clear.

-

"It's part of the history of the field of artificial intelligence that every time somebody figured out how to make a computer do something—play good checkers, solve simple but relatively informal problems—there was a chorus of critics to say, 'that's not thinking'." -Pamela McCorduck´.

It's called the AI Effect.As Larry Tesler puts it, "AI is whatever hasn't been done yet.".

That entire paragraph is much better at supporting the precise opposite argument. Computers can beat Kasparov at chess, but they're clearly not thinking when making a move - even if we use the most open biological definitions for thinking.

-

I agree with the author.

If these models were truly "reasoning," they should get better with more compute and clearer instructions.

The fact that they only work up to a certain point despite increased resources is proof that they are just pattern matching, not reasoning.

Performance eventually collapses due to architectural constraints, this mirrors cognitive overload in humans: reasoning isn’t just about adding compute, it requires mechanisms like abstraction, recursion, and memory. The models’ collapse doesn’t prove “only pattern matching”, it highlights that today’s models simulate reasoning in narrow bands, but lack the structure to scale it reliably. That is a limitation of implementation, not a disproof of emergent reasoning.

-

LOOK MAA I AM ON FRONT PAGE

While I hate LLMs with passion and my opinion of them boiling down to being glorified search engines and data scrapers, I would ask Apple: how sour are the grapes, eh?

edit: wording

-

This sort of thing has been published a lot for awhile now, but why is it assumed that this isn't what human reasoning consists of? Isn't all our reasoning ultimately a form of pattern memorization? I sure feel like it is. So to me all these studies that prove they're "just" memorizing patterns don't prove anything other than that, unless coupled with research on the human brain to prove we do something different.

Humans apply judgment, because they have emotion. LLMs do not possess emotion. Mimicking emotion without ever actually having the capability of experiencing it is sociopathy. An LLM would at best apply patterns like a sociopath.

-

Apple is significantly behind and arrived late to the whole AI hype, so of course it's in their absolute best interest to keep showing how LLMs aren't special or amazingly revolutionary.

They're not wrong, but the motivation is also pretty clear.

Maybe they are so far behind because they jumped on the same train but then failed at achieving what they wanted based on the claims. And then they started digging around.

-

That entire paragraph is much better at supporting the precise opposite argument. Computers can beat Kasparov at chess, but they're clearly not thinking when making a move - even if we use the most open biological definitions for thinking.

No, it shows how certain people misunderstand the meaning of the word.

You have called npcs in video games "AI" for a decade, yet you were never implying they were somehow intelligent. The whole argument is strangely inconsistent.

-

Like what?

I don’t think there’s any search engine better than Perplexity. And for scientific research Consensus is miles ahead.

On first read this sounded like you were challenging the basis of the previous comment. But then you went on to provide a couple of your own examples.

So on that basis after rereading your comment, it sounds like maybe you’re actually looking for recommendations.

Ive seen a lot of praise for Kagi over the past year. I’ve finally started playing around with the free tier and I think it’s definitely worth checking out.

-

Humans apply judgment, because they have emotion. LLMs do not possess emotion. Mimicking emotion without ever actually having the capability of experiencing it is sociopathy. An LLM would at best apply patterns like a sociopath.

But for something like solving a Towers of Hanoi puzzle, which is what this study is about, we're not looking for emotional judgements - we're trying to evaluate the logical reasoning capabilities. A sociopath would be equally capable of solving logic puzzles compared to a non-sociopath. In fact, simple computer programs do a great job of solving these puzzles, and they certainly have nothing like emotions. So I'm not sure that emotions have much relevance to the topic of AI or human reasoning and problem solving, at least not this particular aspect of it.

As for analogizing LLMs to sociopaths, I think that's a bit odd too. The reason why we (stereotypically) find sociopathy concerning is that a person has their own desires which, in combination with a disinterest in others' feelings, incentivizes them to be deceitful or harmful in some scenarios. But LLMs are largely designed specifically as servile, having no will or desires of their own. If people find it concerning that LLMs imitate emotions, then I think we're giving them far too much credit as sentient autonomous beings - and this is coming from someone who thinks they think in the same way we do! The think like we do, IMO, but they lack a lot of the other subsystems that are necessary for an entity to function in a way that can be considered as autonomous/having free will/desires of its own choosing, etc.

-

LOOK MAA I AM ON FRONT PAGE

Why would they "prove" something that's completely obvious?

The burden of proof is on the grifters who have overwhelmingly been making false claims and distorting language for decades.

-

Why would they "prove" something that's completely obvious?

The burden of proof is on the grifters who have overwhelmingly been making false claims and distorting language for decades.

They’re just using the terminology that’s widespread in the field. In a sense, the paper’s purpose is to prove that this terminology is unsuitable.

-

They’re just using the terminology that’s widespread in the field. In a sense, the paper’s purpose is to prove that this terminology is unsuitable.

I understand that people in this "field" regularly use pseudo-scientific language (I actually deleted that part of my comment).

But the terminology has never been suitable so it shouldn't be used in the first place. It pre-supposes the hypothesis that they're supposedly "disproving". They're feeding into the grift because that's what the field is. That's how they all get paid the big bucks.

-

Apple is significantly behind and arrived late to the whole AI hype, so of course it's in their absolute best interest to keep showing how LLMs aren't special or amazingly revolutionary.

They're not wrong, but the motivation is also pretty clear.

They need to convince investors that this delay wasn't due to incompetence. The problem will only be somewhat effective as long as there isn't an innovation that makes AI more effective.

If that happens, Apple shareholders will, at best, ask the company to increase investment in that area or, at worst, to restructure the company, which could also mean a change in CEO.

-

You know, despite not really believing LLM "intelligence" works anywhere like real intelligence, I kind of thought maybe being good at recognizing patterns was a way to emulate it to a point...

But that study seems to prove they're still not even good at that. At first I was wondering how hard the puzzles must have been, and then there's a bit about LLM finishing 100 move towers of Hanoï (on which they were trained) and failing 4 move river crossings. Logically, those problems are very similar... Also, failing to apply a step-by-step solution they were given.

Computers are awesome at "recognizing patterns" as long as the pattern is a statistical average of some possibly worthless data set. And it really helps if the computer is setup to ahead of time to recognize pre-determined patterns.

-

"It's part of the history of the field of artificial intelligence that every time somebody figured out how to make a computer do something—play good checkers, solve simple but relatively informal problems—there was a chorus of critics to say, 'that's not thinking'." -Pamela McCorduck´.

It's called the AI Effect.As Larry Tesler puts it, "AI is whatever hasn't been done yet.".

I'm going to write a program to play tic-tac-toe. If y'all don't think it's "AI", then you're just haters. Nothing will ever be good enough for y'all. You want scientific evidence of intelligence?!?! I can't even define intelligence so take that! \s

Seriously tho. This person is arguing that a checkers program is "AI". It kinda demonstrates the loooong history of this grift.

-

No, it shows how certain people misunderstand the meaning of the word.

You have called npcs in video games "AI" for a decade, yet you were never implying they were somehow intelligent. The whole argument is strangely inconsistent.

Who is "you"?

Just because some dummies supposedly think that NPCs are "AI", that doesn't make it so. I don't consider checkers to be a litmus test for "intelligence".