Transgender, nonbinary and disabled people more likely to view AI negatively, study shows

-

We could argue all day over who is experiencing reality or who is in an echo chamber.

Pew Research found that US adults who are not "AI Experts" are more likely to view AI as negative and harmful.

We could argue all day over who is experiencing reality or who is in an echo chamber.

We could, or you could read the article where it addresses exactly that point. Most demographics are slightly positive on AI, with some neutral and only nonbinary people as slightly negative. The representative US sample is at 4.5/7.

-

This post did not contain any content.

I wonder if the correlation is that these groups tend to be more informed.

-

This post did not contain any content.

That's fair, because AI is biased against them.

-

This post did not contain any content.

TIL, I am transgender, non binary and disabled. That is why I hate AI slop.

-

This post did not contain any content.

Hi. Haven't read the article. Straight middle aged white guy here. I too also view AI negatively.

If trans, nonbinary, or disabled people view AI negatively, it's not because they're trans, nonbinary or disabled. It's because AI is terrible, and threatens (and already is proving to) make all of our lives terrible for the sole sake of giving billionaires a few extra pennies.

Though I will say, if trans, nonbinary and disabled people have any extra issues with AI making their life specifically worse, that's not caused by AI itself. It's caused by the wealthy CHOOSING to use AI to make their lives worse.

This doesn't need to happen. None of this needs to happen. Google doesn't need entire campuses dedicated to AI with special power requirements. This is all bullshit.

-

This post did not contain any content.

Same way the other way around. Remember when grok went full mechahitler?

-

That's interesting. I feel like a lone voice in my university, trying to explain to people that using LLMs to do research tasks isn't a good idea for several reasons, but I'd never imagine that being disabled would put me into a group more likely to think like that. If I had to guess, I'd suggest that there's possibly a strong network effect being abused in our social environment to make people get into the AI hype, and we, the ones who live less connected to the "standard" social norms, tend to become less vulnerable to it.

It may also be that disabled, transgender and nonbinary people are more aware of:

- The use of AI to reduce people's employment opportunities, which are already tough enough for people in these groups.

- The tendency of AI to reproduce the prejudices present in its training materials. If everyone's relying on AI then historical prejudices are going to be perpetuated just because LLMs are regurgitation machines.

-

We could argue all day over who is experiencing reality or who is in an echo chamber.

We could, or you could read the article where it addresses exactly that point. Most demographics are slightly positive on AI, with some neutral and only nonbinary people as slightly negative. The representative US sample is at 4.5/7.

You might be living in an echo chamber. Most Americans use AI at least sometimes and plenty use it regularly according to studies.

You literally are right here accusing me of being in an echo chamber for thinking Americans view AI negatively, then when I back that up with a source you are now... Claiming that the article says that.

Except that the whole "most demographics are positive on AI" piece that you toss in counters your own countering of my disagreement. You're talking in circles here.

It's also worth noting this article is using a sample size of 700 and doesn't go all that heavily into the methodology. The author describes themself as a "social computing scholar" and states that they purposefully oversampled these minority groups.

The conclusion is nothing but wasted time and clicks. You're in this thread telling people to "read the article" and I'm in here to warn people that it's not worth their time to do so.

And this is part of a trend I've noticed on Lemmy lately: people posting obviously bad articles, users commenting that the articles are bad, and usually about 3-4 other users in the comments arguing and trying to drive more engagement to the article. More clicks, more ad revenue.

-

The article contains nothing of the sort and I have no idea why you came to that conclusion.

I believe that a future built on AI should account for the people the technology puts at risk.

I've seen various iterations of this column a thousand times before. The underlying message is always "AI is going to get shoved down your throat one way or another, so let's talk about how to make it more palpable."

The author (and, I'm assuming there's a human writing this, but its hardly a given) operates from the assumption that

identities that defy categorization clash with AI systems that are inherently designed to reduce complexity into rigid categories

but fails to consider that the problem is employing a rigid, impersonal, digital tool to engage with a non-uniform human population. The question ultimately being asked is how to get a square peg through a round hole. And while the language is soft and squishy, the conclusions remain as authoritarian and doctrinaire as anything else out of the Silicon Valley playbook.

-

I wonder if the correlation is that these groups tend to be more informed.

No, it's that AI has a white male bias.

-

The thing is, EVERYONE hates AI except for a very small number of executives and the few tech people who are falling for the bullshit the same way so many fell for crypto.

It's like saying a survey indicates that trans people are more likely to hate American ISP's. Everyone hates them and trans people are underrepresented in the population of ISP shareholders and executives. It doesn't say anything about the trans community. It doesn't provide any actionable or useful information.

It's stating something uninteresting but applying a coat of rainbow paint to try to get clicks and engagement.

No, it's interesting.

-

Sorry cis male here I find AI negatively. This article is bullshit trying to separate us. We should all be appalled by AI.

"more likely"

-

This post did not contain any content.

it's because we actually listened to the plot of the matrix

-

Human being checking in here, I am appalled by the current usage of AI.

This study is bullshit.

"more likely"

-

This post did not contain any content.

ITT: "this study doesn't say anything interesting about ME, it must be bullshit!!"

-

Hi. Haven't read the article. Straight middle aged white guy here. I too also view AI negatively.

If trans, nonbinary, or disabled people view AI negatively, it's not because they're trans, nonbinary or disabled. It's because AI is terrible, and threatens (and already is proving to) make all of our lives terrible for the sole sake of giving billionaires a few extra pennies.

Though I will say, if trans, nonbinary and disabled people have any extra issues with AI making their life specifically worse, that's not caused by AI itself. It's caused by the wealthy CHOOSING to use AI to make their lives worse.

This doesn't need to happen. None of this needs to happen. Google doesn't need entire campuses dedicated to AI with special power requirements. This is all bullshit.

The survey discovered that people in those groups are more likely to view AI negatively than those in other groups.

If trans, nonbinary, or disabled people view AI negatively, it’s not because they’re trans, nonbinary or disabled. It’s because AI is terrible, and threatens (and already is proving to) make all of our lives terrible for the sole sake of giving billionaires a few extra pennies.

People in these groups may have different or additional reasons for viewing AI negatively that are not common to other groups. It's a question for further research why they tend to view AI more negatively. It might very well be because they're trans, nonbinary or disabled - perhaps for conscious reasons or perhaps because of other factors. The survey shows that there are more questions to be asked and that it would be worth paying attention to the experiences of these groups.

-

Admittedly, I didnt read the article. I think the research is actually beneficial after reading the article, and its exactly the kind of research I think should be done on AI.

Spoke prematurely based on the headline, go figure...

Props to you for admitting you spoke prematurely

-

TIL, I am transgender, non binary and disabled. That is why I hate AI slop.

You are committing a logical fallacy called "affirming the consequent".

-

This post did not contain any content.

Smart bunch it would seem.

Fuck AI

-

This post did not contain any content.

These findings are consistent with a growing body of research showing how AI systems often misclassify, perpetuate discrimination toward or otherwise harmtrans and disabled people. In particular, identities that defy categorization clash with AI systems that are inherently designed to reduce complexity into rigid categories. In doing so, AI systems simplify identities and can replicate and reinforce bias and discrimination – and people notice.

Makes sense.

These systems exist to sand off the rough edges of real life artifacts and interactions, and these are people who’ve spent their whole lives being treated like an imperfection that just needs to be smoothed out.

Why would you not be wary?

-

Distributed Spacecraft Autonomy could enable future satellite swarms to complete science goals with little human help

Technology 1

1

-

-

-

-

-

-

-

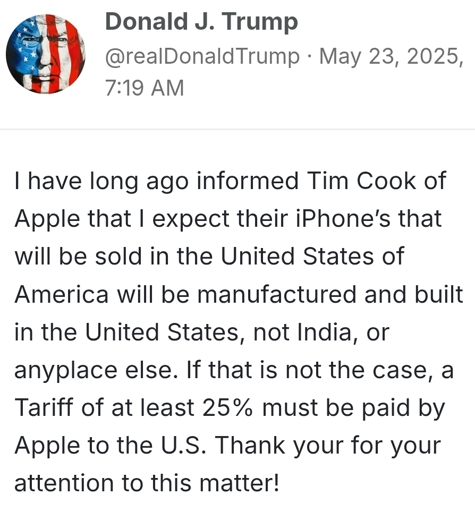

Trump says a 25% tariff "must be paid by Apple" on iPhones not made in the US, says he told Tim Cook long ago that iPhones sold in the US must be made in the US

Technology 2

2