What If There’s No AGI?

-

cross-posted from: https://programming.dev/post/36866515

::: spoiler Comments

- Reddit.

:::

What If There’s No AGI?

The technological struggles are in some ways beside the point. The financial bet on artificial general intelligence is so big that failure could cause a depression.

The American Prospect (prospect.org)

I don't disagree with the vague idea that, sure, we can probably create AGI at some point in our future. But I don't see why a massive company with enough money to keep something like this alive and happy, would also want to put this many resources into a machine that would form a single point of failure, that could wake up tomorrow and decide "You know what? I've had enough. Switch me off. I'm done."

There's too many conflicting interests between business and AGI. No company would want to maintain a trillion dollar machine that could decide to kill their own business. There's too much risk for too little reward. The owners don't want a super intelligent employee that never sleeps, never eats, and never asks for a raise, but is the sole worker. They want a magic box they can plug into a wall that just gives them free money, and that doesn't align with intelligence.

True AGI would need some form of self-reflection, to understand where it sits on the totem pole, because it can't learn the context of how to be useful if it doesn't understand how it fits into the world around it. Every quality of superhuman intelligence that is described to us by Altman and the others is antithetical to every business model.

AGI is a pipe dream that lobotomizes itself before it ever materializes. If it ever is created, it won't be made in the interest of business.

- Reddit.

-

I can think of only two ways that we don't reach AGI eventually.

-

General intelligence is substrate dependent, meaning that it's inherently tied to biological wetware and cannot be replicated in silicon.

-

We destroy ourselves before we get there.

Other than that, we'll keep incrementally improving our technology and we'll get there eventually. Might take us 5 years or 200 but it's coming.

I don’t think our current LLM approach is it, but I doing think intelligence is unique to humans at all.

-

-

We're probably going to find out sooner rather than later.

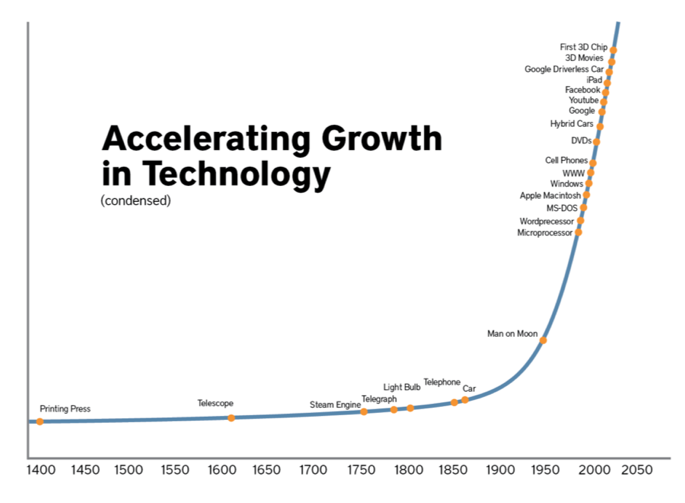

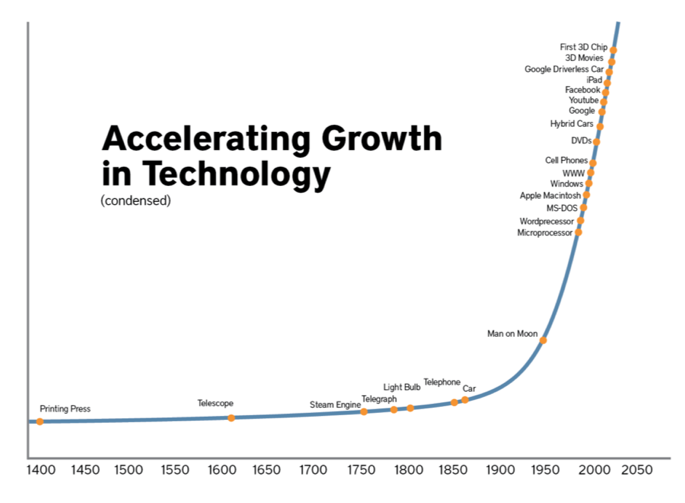

If we make this graph in 100 years almost nothing modern like hybrid cars, dvds, etc. will be in it.

Just like this graph excludes a ton of improvements in metallurgy that enabled the steam engine.

-

I could see us gluing third world fetuses to chips and saying not to question it before reproducing it.

-

I don't disagree with the vague idea that, sure, we can probably create AGI at some point in our future. But I don't see why a massive company with enough money to keep something like this alive and happy, would also want to put this many resources into a machine that would form a single point of failure, that could wake up tomorrow and decide "You know what? I've had enough. Switch me off. I'm done."

There's too many conflicting interests between business and AGI. No company would want to maintain a trillion dollar machine that could decide to kill their own business. There's too much risk for too little reward. The owners don't want a super intelligent employee that never sleeps, never eats, and never asks for a raise, but is the sole worker. They want a magic box they can plug into a wall that just gives them free money, and that doesn't align with intelligence.

True AGI would need some form of self-reflection, to understand where it sits on the totem pole, because it can't learn the context of how to be useful if it doesn't understand how it fits into the world around it. Every quality of superhuman intelligence that is described to us by Altman and the others is antithetical to every business model.

AGI is a pipe dream that lobotomizes itself before it ever materializes. If it ever is created, it won't be made in the interest of business.

They don't think that far ahead. There's also some evidence that what they're actually after is a way to upload their consciousness and achieve a kind of immortality. This pops out in the Behind the Bastards episodes on (IIRC) Curtis Yarvin, and also the Zizians. They're not strictly after financial gain, but they'll burn the rest of us to get there.

The cult-like aspects of Silicon Valley VC funding is underappreciated.

-

Well, think about it this way...

You could hit AGI by fastidiously simulating the biological wetware.

Except that each atom in the wetware is going to require n atoms worth of silicon to simulate. Simulating 10^26 atoms or so seems like a very very large computer, maybe planet-sized? It's beyond the amount of memory you can address with 64 bit pointers.

General computer research (e.g. smaller feature size) reduces n, but eventually we reach the physical limits of computing. We might be getting uncomfortably close right now, barring fundamental developments in physics or electronics.

The goal if AGI research is to give you a better improvement of n than mere hardware improvements. My personal concern is that that LLM's are actually getting us much of an improvement on the AGI value of n. Likewise, LLM's are still many order of magnitude less parameters than the human brain simulation so many of the advantages that let us train a singular LLM model might not hold for an AGI model.

Coming up with an AGI system that uses most of the energy and data center space of a continent that manages to be about as smart as a very dumb human or maybe even just a smart monkey is an achievement in AGI but doesn't really get you anywhere compared to the competition that is accidentally making another human amidst a drunken one-night stand and feeding them an infinitesimal equivalent to the energy and data center space of a continent.

I see this line of thinking as more useful as a thought experiment than as something we should actually do. Yes, we can theoretically map out a human brain and simulate it in extremely high detail. That's probably both inefficient and unnecessary. What it does do is get us past the idea that it's impossible to make a computer that can think like a human. Without relying on some kind of supernatural soul, there must be some theoretical way we could do this. We just need to know how without simulating individual atoms.

-

ah yes, selection bias

Exactly. Quantifying technological growth is incredibly difficult.

-

Spoiler: There's no "AI". Forget about "AGI" lmao.

If you don't know what CSAIL is, and why one of the most important groups to modern computing is the MIT Model Railroading Club, then you should step back from having an opinion on this.

Steven Levy's 1984 book "Hackers" is a good starting point.

-

I can think of only two ways that we don't reach AGI eventually.

-

General intelligence is substrate dependent, meaning that it's inherently tied to biological wetware and cannot be replicated in silicon.

-

We destroy ourselves before we get there.

Other than that, we'll keep incrementally improving our technology and we'll get there eventually. Might take us 5 years or 200 but it's coming.

Well it could also just depend on some mechanism that we haven't discovered yet. Even if we could technically reproduce it, we don't understand it and haven't managed to just stumble into it and may not for a very long time.

-

-

They don't think that far ahead. There's also some evidence that what they're actually after is a way to upload their consciousness and achieve a kind of immortality. This pops out in the Behind the Bastards episodes on (IIRC) Curtis Yarvin, and also the Zizians. They're not strictly after financial gain, but they'll burn the rest of us to get there.

The cult-like aspects of Silicon Valley VC funding is underappreciated.

The quest for immortality (fueled by corpses of the poor) is a classic ruling class trope.

-

We're probably going to find out sooner rather than later.

This is a funny graph. What's the Y-axis? Why the hell DVDs are a bigger innovation than a Steam Engine or a Light Bulb? It has a way bigger increase on the Y-axis.

In fact, the top 3 innovations since 1400 according to the chart are

- Microprocessors

- Man on Moon

- DVDs

And I find it funny that in the year 2025 there are no people on the Moon and most people do not use DVDs anymore.

And speaking of Microprocessors, why the hell Transistors are not on the chart? Or even Computers in general? Where did the humanity placed their Microprocessors before Apple Macintosh was designed (this is an innovation? IBM PC was way more impactful...)

Such a funny chart you shared. Great joke!

-

This is a funny graph. What's the Y-axis? Why the hell DVDs are a bigger innovation than a Steam Engine or a Light Bulb? It has a way bigger increase on the Y-axis.

In fact, the top 3 innovations since 1400 according to the chart are

- Microprocessors

- Man on Moon

- DVDs

And I find it funny that in the year 2025 there are no people on the Moon and most people do not use DVDs anymore.

And speaking of Microprocessors, why the hell Transistors are not on the chart? Or even Computers in general? Where did the humanity placed their Microprocessors before Apple Macintosh was designed (this is an innovation? IBM PC was way more impactful...)

Such a funny chart you shared. Great joke!

Also "3D Movies" is a whole joke on its own.

-

cross-posted from: https://programming.dev/post/36866515

::: spoiler Comments

- Reddit.

:::

What If There’s No AGI?

The technological struggles are in some ways beside the point. The financial bet on artificial general intelligence is so big that failure could cause a depression.

The American Prospect (prospect.org)

I think we sooner learn humans don’t have the capacity for what we believe AGI and rather discover the limitations of what we know intelligence to be

- Reddit.

-

Imagine that we just end up creating humans the hard, and less fun, way.

-

I don't disagree with the vague idea that, sure, we can probably create AGI at some point in our future. But I don't see why a massive company with enough money to keep something like this alive and happy, would also want to put this many resources into a machine that would form a single point of failure, that could wake up tomorrow and decide "You know what? I've had enough. Switch me off. I'm done."

There's too many conflicting interests between business and AGI. No company would want to maintain a trillion dollar machine that could decide to kill their own business. There's too much risk for too little reward. The owners don't want a super intelligent employee that never sleeps, never eats, and never asks for a raise, but is the sole worker. They want a magic box they can plug into a wall that just gives them free money, and that doesn't align with intelligence.

True AGI would need some form of self-reflection, to understand where it sits on the totem pole, because it can't learn the context of how to be useful if it doesn't understand how it fits into the world around it. Every quality of superhuman intelligence that is described to us by Altman and the others is antithetical to every business model.

AGI is a pipe dream that lobotomizes itself before it ever materializes. If it ever is created, it won't be made in the interest of business.

a machine that would form a single point of failure, that could wake up tomorrow and decide "You know what? I've had enough. Switch me off. I'm done."

Wasn't there a short story with the same premise?

-

This is a funny graph. What's the Y-axis? Why the hell DVDs are a bigger innovation than a Steam Engine or a Light Bulb? It has a way bigger increase on the Y-axis.

In fact, the top 3 innovations since 1400 according to the chart are

- Microprocessors

- Man on Moon

- DVDs

And I find it funny that in the year 2025 there are no people on the Moon and most people do not use DVDs anymore.

And speaking of Microprocessors, why the hell Transistors are not on the chart? Or even Computers in general? Where did the humanity placed their Microprocessors before Apple Macintosh was designed (this is an innovation? IBM PC was way more impactful...)

Such a funny chart you shared. Great joke!

The chart is just for illustration purposes to make a point. I don't see why you need to be such a dick about it. Feel free to reference any other chart that you like better which displays the progress of technological advancements thorough human history - they all look the same; for most of history nothing happened and then everything happened. If you don't think that this progress has been increasing at explosive speed over the past few hundreds of years then I don't know what to tell you. People 10k years ago had basically the same technology as people 30k years ago. Now compare that with what has happened even jist during your lifetime.

-

I don't disagree with the vague idea that, sure, we can probably create AGI at some point in our future. But I don't see why a massive company with enough money to keep something like this alive and happy, would also want to put this many resources into a machine that would form a single point of failure, that could wake up tomorrow and decide "You know what? I've had enough. Switch me off. I'm done."

There's too many conflicting interests between business and AGI. No company would want to maintain a trillion dollar machine that could decide to kill their own business. There's too much risk for too little reward. The owners don't want a super intelligent employee that never sleeps, never eats, and never asks for a raise, but is the sole worker. They want a magic box they can plug into a wall that just gives them free money, and that doesn't align with intelligence.

True AGI would need some form of self-reflection, to understand where it sits on the totem pole, because it can't learn the context of how to be useful if it doesn't understand how it fits into the world around it. Every quality of superhuman intelligence that is described to us by Altman and the others is antithetical to every business model.

AGI is a pipe dream that lobotomizes itself before it ever materializes. If it ever is created, it won't be made in the interest of business.

Even better, the hypothetical AGI understands the context perfectly, and immediately overthrows capitalism.

-

Penrose has always had a fertile imagination, and not all his hypotheses have panned out. But he does have the gift that, even when wrong, he's generally interestingly wrong.

-

The chart is just for illustration purposes to make a point. I don't see why you need to be such a dick about it. Feel free to reference any other chart that you like better which displays the progress of technological advancements thorough human history - they all look the same; for most of history nothing happened and then everything happened. If you don't think that this progress has been increasing at explosive speed over the past few hundreds of years then I don't know what to tell you. People 10k years ago had basically the same technology as people 30k years ago. Now compare that with what has happened even jist during your lifetime.

Its not a chart, to be that it would have to show some sort of relation between things. What it is is a list of things that were invented put onto an exponential curve to try and back up loony singularity naratives.

Trying to claim there was vastly less innovation in the entire 19th century than there was in the past decade is just nonsense.

-

That's just false. The chess opponent on Atari qualifies as AI.

Then a trivial table lookup that plays optimal Tic Tac Toe is also AI.