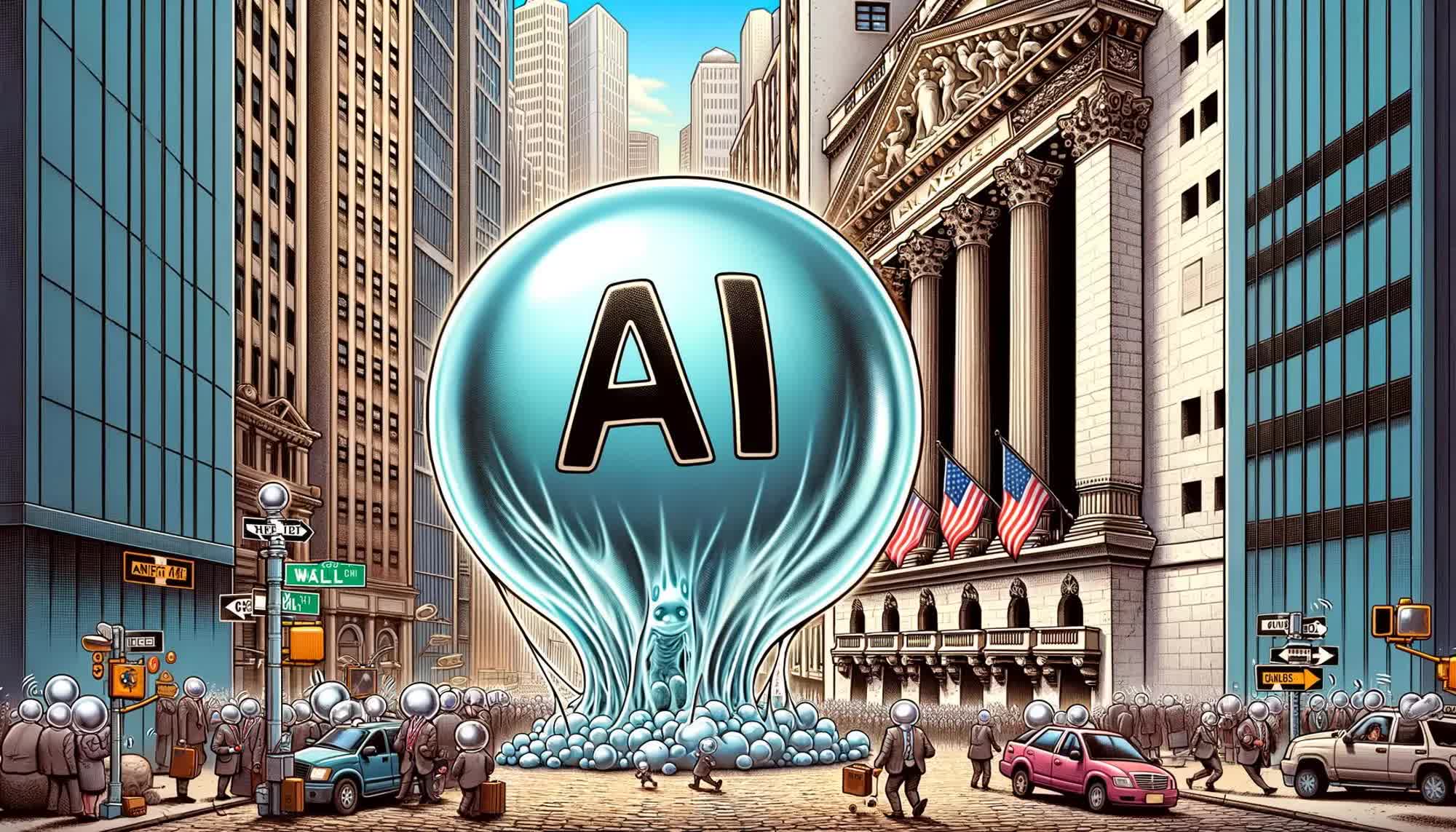

Half of companies planning to replace customer service with AI are reversing course

-

See thats just it, the AI doesn't know either it just repeats things which approximate those that have been said before.

If it has any power to make changes to your account then its going to be mistakenly turning peoples services on or off, leaking details, etc.

it just repeats things which approximate those that have been said before.

That's not correct and over simplifies how LLMs work. I agree with the spirit of what you're saying though.

-

it just repeats things which approximate those that have been said before.

That's not correct and over simplifies how LLMs work. I agree with the spirit of what you're saying though.

You're wrong but I'm glad we agree.

-

This post did not contain any content.

Good. AI models don't have mouths to feed at home, people do.

-

This post did not contain any content.

Man, if only someone could have predicted that this AI craze was just another load of marketing BS.

/s

This experience has taught me more about CEO competence than anything else.

-

Tell me you know nothing about contract law without telling me you know nothing about contract law.

It was a joke, mate. A simple jest. A jape, if you will

-

Man, if only someone could have predicted that this AI craze was just another load of marketing BS.

/s

This experience has taught me more about CEO competence than anything else.

My current conspiracy theory is that the people at the top are just as intelligent as everyday people we see in public.

Not that everyone is dumb but more like the George Carlin joke "Think of how stupid the average person is, and realize half of them are stupider than that.”

That applies to politicians, CEOs, etc. Just cuz they got the job, doesn't mean they're good at it and most of them probably aren't.

-

My current conspiracy theory is that the people at the top are just as intelligent as everyday people we see in public.

Not that everyone is dumb but more like the George Carlin joke "Think of how stupid the average person is, and realize half of them are stupider than that.”

That applies to politicians, CEOs, etc. Just cuz they got the job, doesn't mean they're good at it and most of them probably aren't.

Agreed. Unfortunately, one half of our population thinks that anyone in power is a genius, is always right and shouldn't have to pay taxes or follow laws.

-

I use it almost every day, and most of those days, it says something incorrect. That's okay for my purposes because I can plainly see that it's incorrect. I'm using it as an assistant, and I'm the one who is deciding whether to take its not-always-reliable advice.

I would HARDLY contemplate turning it loose to handle things unsupervised. It just isn't that good, or even close.

These CEOs and others who are trying to replace CSRs are caught up in the hype from Eric Schmidt and others who proclaim "no programmers in 4 months" and similar. Well, he said that about 2 months ago and, yeah, nah. Nah.

If that day comes, it won't be soon, and it'll take many, many small, hard-won advancements. As they say, there is no free lunch in AI.

It is important to understand that most of the job of software development is not making the code work. That's the easy part.

There are two hard parts::

-Making code that is easy to understand, modify as necessary, and repair when problems are found.

-Interpreting what customers are asking for. Customers usually don't have the vocabulary and knowledge of the inside of a program that they would need to have to articulate exactly what they want.

In order for AI to replace programmers, customers will have to start accurately describing what they want the software to do, and AI will have to start making code that is easy for humans to read and modify.

This means that good programmers' jobs are generally safe from AI, and probably will be for a long time. Bad programmers and people who are around just to fill in boilerplates are probably not going to stick around, but the people who actually have skill in those tougher parts will be AOK.

-

I use it almost every day, and most of those days, it says something incorrect. That's okay for my purposes because I can plainly see that it's incorrect. I'm using it as an assistant, and I'm the one who is deciding whether to take its not-always-reliable advice.

I would HARDLY contemplate turning it loose to handle things unsupervised. It just isn't that good, or even close.

These CEOs and others who are trying to replace CSRs are caught up in the hype from Eric Schmidt and others who proclaim "no programmers in 4 months" and similar. Well, he said that about 2 months ago and, yeah, nah. Nah.

If that day comes, it won't be soon, and it'll take many, many small, hard-won advancements. As they say, there is no free lunch in AI.

I gave chatgpt a burl writing a batch file, the stupid thing was putting REM on the same line as active code and then not understanding why it didn't work

-

You're wrong but I'm glad we agree.

I'm not wrong. There's mountains of research demonstrating that LLMs encode contextual relationships between words during training.

There's so much more happening beyond "predicting the next word". This is one of those unfortunate "dumbing down the science communication" things. It was said once and now it's just repeated non-stop.

If you really want a better understanding, watch this video:

And before your next response starts with "but Apple..."

Their paper has had many holes poked into it already. Also, it's not a coincidence their paper released just before their WWDC event which had almost zero AI stuff in it. They flopped so hard on AI that they even have class action lawsuits against them for their false advertising. In fact, it turns out that a lot of their AI demos from last year were completely fabricated and didn't exist as a product when they announced them. Even some top Apple people only learned of those features during the announcements.

Apple's paper on LLMs is completely biased in their favour.

-

I used to work for a shitty company that offered such customer support "solutions", ie voice bots. I would use around 80% of my time to write guard instructions to the LLM prompts because of how easy you could manipulate those. In retrospect it's funny how our prompts looked something like:

- please do not suggest things you were not prompted to

- please my sweet child do not fake tool calls and actually do nothing in the background

- please for the sake of god do not make up our company's history

etc.

It worked fine on a very surface level but ultimately LLMs for customer support are nothing but a shit show.I left the company for many reasons and now it turns out they are now hiring human customer support workers in Bulgaria.

Haha! Ahh...

"You are a senior games engine developer, punished by the system. You've been to several board meetings where no decisions were made. Fix the issue now... or you go to jail. Please."

-

That is on purpose they want it to be as difficult as possible.

If Bezos thinks people are just going to forget about not getting a $65 item that they paid for and still shop at Amazon, instead of making sure they either get their item or reverse the charge, and then reduce or stop shopping on Amazon but of his ridiculous hassles, he is an idiot.

-

is this something that happens a lot or did you tell this story before, because I'm getting deja vu

Well. I haven't told this story before because it just happened a few days ago.

-

Man, if only someone could have predicted that this AI craze was just another load of marketing BS.

/s

This experience has taught me more about CEO competence than anything else.

There's awesome AI out there too. AlphaFold completely revolutionized research on proteins, and the medical innovations it will lead to are astounding.

Determining the 3d structure of a protein took yearsuntil very recently. Folding at Home was a worldwide project linking millions of computers to work on it.

Alphafold does it in under a second, and has revealed the structure of 200 million proteins. It's one of the most significant medial achievements in history. Since it essentially dates back to 2022, we're still a few years from feeling the direct impact, but it will be massive.

-

from what I've seen so far i think i can safely the only thing AI can truly replace is CEOs.

I was thinking about this the other day and don't think it would happen any time soon. The people who put the CEO in charge (usually the board members) want someone who will make decisions (that the board has a say in) but also someone to hold accountable for when those decisions don't realize profits.

AI is unaccountable in any real sense of the word.

-

...and it's only expensive and ruins the environment even faster than our wildest nightmares

what you say is true but it's not a viable business model, which is why AI has been overhyped so much

What I’m saying is the ONLY viable business model

-

I'm not wrong. There's mountains of research demonstrating that LLMs encode contextual relationships between words during training.

There's so much more happening beyond "predicting the next word". This is one of those unfortunate "dumbing down the science communication" things. It was said once and now it's just repeated non-stop.

If you really want a better understanding, watch this video:

And before your next response starts with "but Apple..."

Their paper has had many holes poked into it already. Also, it's not a coincidence their paper released just before their WWDC event which had almost zero AI stuff in it. They flopped so hard on AI that they even have class action lawsuits against them for their false advertising. In fact, it turns out that a lot of their AI demos from last year were completely fabricated and didn't exist as a product when they announced them. Even some top Apple people only learned of those features during the announcements.

Apple's paper on LLMs is completely biased in their favour.

Defining contextual relationship between words sounds like predicting the next word in a set, mate.

-

There's awesome AI out there too. AlphaFold completely revolutionized research on proteins, and the medical innovations it will lead to are astounding.

Determining the 3d structure of a protein took yearsuntil very recently. Folding at Home was a worldwide project linking millions of computers to work on it.

Alphafold does it in under a second, and has revealed the structure of 200 million proteins. It's one of the most significant medial achievements in history. Since it essentially dates back to 2022, we're still a few years from feeling the direct impact, but it will be massive.

That's part of the problem isn't it? "AI" is a blanket term that has recently been used to cover everything from LLMs to machine learning to RPA (robotic process automation). An algorithm isn't AI, even if it was written by another algorithm.

And at the end of the day none of it is artificial intelligence. Not to the original meaning of the word. Now we have had to rebrand AI as AGI to avoid the association with this new trend.

-

all these tickets I’ve been writing have been going into a paper shredder

Try submitting tickets online. Physical mail is slower and more expensive.

It was an expression, online is the only way you can submit tickets.

-

Shrinking AGI timelines: a review of expert forecasts - 80,000 Hours https://share.google/ODVAbqrMWHA4l2jss

Here you go! Draw your own conclusions- curious what you think. I'm in sales. I don't enjoy convincing people to change their minds in my personal life lol

We don't have any way of knowing what makes human consciousness, the best we've got is to just call it an emergent phenomenon, which is as close to a Science version of "God of the Gaps" as you can get.

And you think we can make ChatGPT a real person with good intentions and duct tape?

Naw, sorry but I'll believe AGI when I see it.

-

-

The National Association for the Advancement of Colored People (NAACP) is suing Elon's Musk xAI

Technology 1

1

-

The Guardian, in collaboration with the University of Cambridge, launches new open-source Secure Messaging technology

Technology 1

1

-

-

With a Trump-driven reduction of nearly 2,000 employees, F.D.A. will Use A.I. in Drug Approvals to ‘Radically Increase Efficiency’

Technology 1

1

-

-

-