Apple just proved AI "reasoning" models like Claude, DeepSeek-R1, and o3-mini don't actually reason at all. They just memorize patterns really well.

-

You either an llm, or don't know how your brain works.

LLMs don't know how how they work

-

Yeah, well there are a ton of people literally falling into psychosis, led by LLMs. So it’s unfortunately not that many people that already knew it.

Dude they made chat gpt a little more boit licky and now many people are convinced they are literal messiahs. All it took for them was a chat bot and a few hours of talk.

-

LLMs (at least in their current form) are proper neural networks.

Well, technically, yes. You're right. But they're a specific, narrow type of neural network, while I was thinking of the broader class and more traditional applications, like data analysis. I should have been more specific.

-

Fucking obviously. Until Data's positronic brains becomes reality, AI is not actual intelligence.

AI is not A I. I should make that a tshirt.

It’s an expensive carbon spewing parrot.

-

"if you put in the wrong figures, will the correct ones be output"

To be fair, an 1840 “computer” might be able to tell there was something wrong with the figures and ask about it or even correct them herself.

Babbage was being a bit obtuse there; people weren't familiar with computing machines yet. Computer was a job, and computers were expected to be fairly intelligent.

In fact I'd say that if anything this question shows that the questioner understood enough about the new machine to realise it was not the same as they understood a computer to be, and lacked many of their abilities, and was just looking for Babbage to confirm their suspicions.

"Computer" meaning a mechanical/electro-mechanical/electrical machine wasn't used until around after WWII.

Babbag's difference/analytical engines weren't confusing because people called them a computer, they didn't.

"On two occasions I have been asked, 'Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?' I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question."

- Charles Babbage

If you give any computer, human or machine, random numbers, it will not give you "correct answers".

It's possible Babbage lacked the social skills to detect sarcasm. We also have several high profile cases of people just trusting LLMs to file legal briefs and official government 'studies' because the LLM "said it was real".

-

LOOK MAA I AM ON FRONT PAGE

I think it's important to note (i'm not an llm I know that phrase triggers you to assume I am) that they haven't proven this as an inherent architectural issue, which I think would be the next step to the assertion.

do we know that they don't and are incapable of reasoning, or do we just know that for x problems they jump to memorized solutions, is it possible to create an arrangement of weights that can genuinely reason, even if the current models don't? That's the big question that needs answered. It's still possible that we just haven't properly incentivized reason over memorization during training.

if someone can objectively answer "no" to that, the bubble collapses.

-

LOOK MAA I AM ON FRONT PAGE

What's hilarious/sad is the response to this article over on reddit's "singularity" sub, in which all the top comments are people who've obviously never got all the way through a research paper in their lives all trashing Apple and claiming their researchers don't understand AI or "reasoning". It's a weird cult.

-

LOOK MAA I AM ON FRONT PAGE

NOOOOOOOOO

SHIIIIIIIIIITT

SHEEERRRLOOOOOOCK

-

Most humans don't reason. They just parrot shit too. The design is very human.

I hate this analogy. As a throwaway whimsical quip it'd be fine, but it's specious enough that I keep seeing it used earnestly by people who think that LLMs are in any way sentient or conscious, so it's lowered my tolerance for it as a topic even if you did intend it flippantly.

-

NOOOOOOOOO

SHIIIIIIIIIITT

SHEEERRRLOOOOOOCK

Extept for Siri, right? Lol

-

Extept for Siri, right? Lol

Apple Intelligence

-

It’s an expensive carbon spewing parrot.

It's a very resource intensive autocomplete

-

Fair, but the same is true of me. I don't actually "reason"; I just have a set of algorithms memorized by which I propose a pattern that seems like it might match the situation, then a different pattern by which I break the situation down into smaller components and then apply patterns to those components. I keep the process up for a while. If I find a "nasty logic error" pattern match at some point in the process, I "know" I've found a "flaw in the argument" or "bug in the design".

But there's no from-first-principles method by which I developed all these patterns; it's just things that have survived the test of time when other patterns have failed me.

I don't think people are underestimating the power of LLMs to think; I just think people are overestimating the power of humans to do anything other than language prediction and sensory pattern prediction.

This whole era of AI has certainly pushed the brink to existential crisis territory. I think some are even frightened to entertain the prospect that we may not be all that much better than meat machines who on a basic level do pattern matching drawing from the sum total of individual life experience (aka the dataset).

Higher reasoning is taught to humans. We have the capability. That's why we spend the first quarter of our lives in education. Sometimes not all of us are able.

I'm sure it would certainly make waves if researchers did studies based on whether dumber humans are any different than AI.

-

LOOK MAA I AM ON FRONT PAGE

I see a lot of misunderstandings in the comments 🫤

This is a pretty important finding for researchers, and it's not obvious by any means. This finding is not showing a problem with LLMs' abilities in general. The issue they discovered is specifically for so-called "reasoning models" that iterate on their answer before replying. It might indicate that the training process is not sufficient for true reasoning.

Most reasoning models are not incentivized to think correctly, and are only rewarded based on their final answer. This research might indicate that's a flaw that needs to be corrected before models can actually reason.

-

an llm also works on fixed transition probabilities. All the training is done during the generation of the weights, which are the compressed state transition table. After that, it's just a regular old markov chain. I don't know why you seem so fixated on getting different output if you provide different input (as I said, each token generated is a separate independent invocation of the llm with a different input). That is true of most computer programs.

It's just an implementation detail. The markov chains we are used to has a very short context, due to combinatorial explosion when generating the state transition table. With llms, we can use a much much longer context. Put that context in, it runs through the completely immutable model, and out comes a probability distribution. Any calculations done during the calculation of this probability distribution is then discarded, the chosen token added to the context, and the program is run again with zero prior knowledge of any reasoning about the token it just generated. It's a seperate execution with absolutely nothing shared between them, so there can't be any "adapting" going on

Because transformer architecture is not equivalent to a probabilistic lookup. A Markov chain assigns probabilities based on a fixed-order state transition, without regard to deeper structure or token relationships. An LLM processes the full context through many layers of non-linear functions and attention heads, each layer dynamically weighting how each token influences every other token.

Although weights do not change during inference, the behavior of the model is not fixed in the way a Markov chain’s state table is. The same model can respond differently to very similar prompts, not just because the inputs differ, but because the model interprets structure, syntax, and intent in ways that are contextually dependent. That is not just longer context-it is fundamentally more expressive computation.

The process is stateless across calls, yes, but it is not blind. All relevant information lives inside the prompt, and the model uses the attention mechanism to extract meaning from relationships across the sequence. Each new input changes the internal representation, so the output reflects contextual reasoning, not a static response to a matching pattern. Markov chains cannot replicate this kind of behavior no matter how many states they include.

-

LOOK MAA I AM ON FRONT PAGE

So, what your saying here is that the A in AI actually stands for artificial, and it's not really intelligent and reasoning.

Huh.

-

Because transformer architecture is not equivalent to a probabilistic lookup. A Markov chain assigns probabilities based on a fixed-order state transition, without regard to deeper structure or token relationships. An LLM processes the full context through many layers of non-linear functions and attention heads, each layer dynamically weighting how each token influences every other token.

Although weights do not change during inference, the behavior of the model is not fixed in the way a Markov chain’s state table is. The same model can respond differently to very similar prompts, not just because the inputs differ, but because the model interprets structure, syntax, and intent in ways that are contextually dependent. That is not just longer context-it is fundamentally more expressive computation.

The process is stateless across calls, yes, but it is not blind. All relevant information lives inside the prompt, and the model uses the attention mechanism to extract meaning from relationships across the sequence. Each new input changes the internal representation, so the output reflects contextual reasoning, not a static response to a matching pattern. Markov chains cannot replicate this kind of behavior no matter how many states they include.

an llm works the same way! Once it's trained,none of what you said applies anymore. The same model can respond differently with the same inputs specifically because after the llm does its job, sometimes we intentionally don't pick the most likely token, but choose a different one instead. RANDOMLY. Set the temperature to 0 and it will always reply with the same answer. And llms also have a fixed order state transition. Just because you only typed one word doesn't mean that that token is not preceded by n-1 null tokens. The llm always receives the same number of tokens. It cannot work with an arbitrary number of tokens.

all relevant information "remains in the prompt" only until it slides out of the context window, just like any markov chain.

-

"Computer" meaning a mechanical/electro-mechanical/electrical machine wasn't used until around after WWII.

Babbag's difference/analytical engines weren't confusing because people called them a computer, they didn't.

"On two occasions I have been asked, 'Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?' I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question."

- Charles Babbage

If you give any computer, human or machine, random numbers, it will not give you "correct answers".

It's possible Babbage lacked the social skills to detect sarcasm. We also have several high profile cases of people just trusting LLMs to file legal briefs and official government 'studies' because the LLM "said it was real".

What they mean is that before Turing, "computer" was literally a person's job description. You hand a professional a stack of calculations with some typos, part of the job is correcting those out. Newfangled machine comes along with the same name as the job, among the first thing people are gonna ask about is where it fall short.

Like, if I made a machine called "assistant", it'd be natural for people to point out and ask about all the things a person can do that a machine just never could.

-

I see a lot of misunderstandings in the comments 🫤

This is a pretty important finding for researchers, and it's not obvious by any means. This finding is not showing a problem with LLMs' abilities in general. The issue they discovered is specifically for so-called "reasoning models" that iterate on their answer before replying. It might indicate that the training process is not sufficient for true reasoning.

Most reasoning models are not incentivized to think correctly, and are only rewarded based on their final answer. This research might indicate that's a flaw that needs to be corrected before models can actually reason.

Some AI researchers found it obvious as well, in terms of they've suspected it and had some indications. But it's good to see more data on this to affirm this assessment.

-

I see a lot of misunderstandings in the comments 🫤

This is a pretty important finding for researchers, and it's not obvious by any means. This finding is not showing a problem with LLMs' abilities in general. The issue they discovered is specifically for so-called "reasoning models" that iterate on their answer before replying. It might indicate that the training process is not sufficient for true reasoning.

Most reasoning models are not incentivized to think correctly, and are only rewarded based on their final answer. This research might indicate that's a flaw that needs to be corrected before models can actually reason.

Yeah these comments have the three hallmarks of Lemmy:

- AI is just autocomplete mantras.

- Apple is always synonymous with bad and dumb.

- Rare pockets of really thoughtful comments.

Thanks for being at least the latter.

-

-

-

Meta(Facebook) and Yandex apps silently de-anonymize users’ browsing habits without consent.

Technology 1

1

-

-

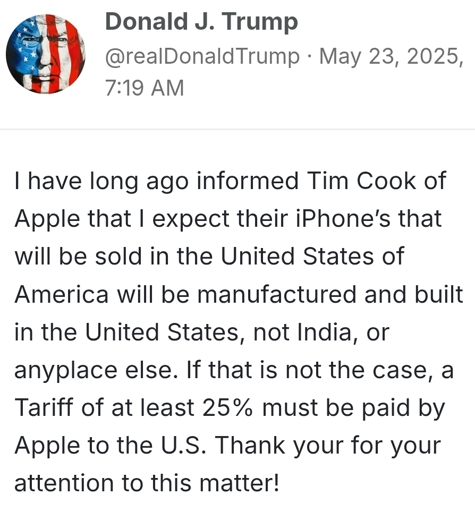

Trump says a 25% tariff "must be paid by Apple" on iPhones not made in the US, says he told Tim Cook long ago that iPhones sold in the US must be made in the US

Technology 2

2

-

-

California Bill Would Require That AT&T And Comcast Make Broadband Affordable For Poor People

Technology 1

1

-