ChatGPT 'got absolutely wrecked' by Atari 2600 in beginner's chess match — OpenAI's newest model bamboozled by 1970s logic

-

Gotham chess has a video of making chatgpt play chess against stockfish. Spoiler: chatgpt does not do well. It plays okay for a few moves but then the moment it gets in trouble it straight up cheats. Telling it to follow the rules of chess doesn't help.

This sort of gets to the heart of LLM-based "AI". That one example to me really shows that there's no actual reasoning happening inside. It's producing answers that statistically look like answers that might be given based on that input.

For some things it even works. But calling this intelligence is dubious at best.

It plays okay for a few moves but then the moment it gets in trouble it straight up cheats.

Lol. More comparisons to how AI is currently like a young child.

-

Absolutely interested. Thank you for your time to share that.

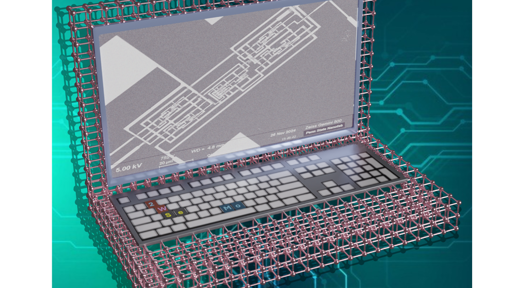

My career path in neural networks began as a researcher for cancerous tissue object detection in medical diagnostic imaging. Now it is switched to generative models for CAD (architecture, product design, game assets, etc.). I don't really mess about with fine-tuning LLMs.

However, I do self-host my own LLMs as code assistants. Thus, I'm only tangentially involved with the current LLM craze.

But it does interest me, nonetheless!

Here is the main blog post that i remembered : it has a follow up, a more scientific version, and uses two other articles as a basis, so you might want to dig around what they mention in the introduction.

It is indeed a quite technical discovery, and it still lacks complete and wider analysis, but it is very interesting for the fact that it kinda invalidates the common gut feeling that llms are pure lucky random.

-

Using an LLM as a chess engine is like using a power tool as a table leg. Pretty funny honestly, but it's obviously not going to be good at it, at least not without scaffolding.

is like using a power tool as a table leg.

Then again, our corporate lords and masters are trying to replace all manner of skilled workers with those same LLM "AI" tools.

And clearly that will backfire on them and they'll eventually scramble to find people with the needed skills, but in the meantime tons of people will have lost their source of income.

-

This post did not contain any content.

If you don't play chess, the Atari is probably going to beat you as well.

LLMs are only good at things to the extent that they have been well-trained in the relevant areas. Not just learning to predict text string sequences, but reinforcement learning after that, where a human or some other agent says "this answer is better than that one" enough times in enough of the right contexts. It mimics the way humans learn, which is through repeated and diverse exposure.

If they set up a system to train it against some chess program, or (much simpler) simply gave it a tool call, it would do much better. Tool calling already exists and would be by far the easiest way.

It could also be instructed to write a chess solver program and then run it, at which point it would be on par with the Atari, but it wouldn't compete well with a serious chess solver.

-

is like using a power tool as a table leg.

Then again, our corporate lords and masters are trying to replace all manner of skilled workers with those same LLM "AI" tools.

And clearly that will backfire on them and they'll eventually scramble to find people with the needed skills, but in the meantime tons of people will have lost their source of income.

If you believe LLMs are not good at anything then there should be relatively little to worry about in the long-term, but I am more concerned.

It's not obvious to me that it will backfire for them, because I believe LLMs are good at some things (that is, when they are used correctly, for the correct tasks). Currently they're being applied to far more use cases than they are likely to be good at -- either because they're overhyped or our corporate lords and masters are just experimenting to find out what they're good at and what not. Some of these cases will be like chess, but others will be like code*.

(* not saying LLMs are good at code in general, but for some coding applications I believe they are vastly more efficient than humans, even if a human expert can currently write higher-quality less-buggy code.)

-

Hardly surprising. Llms aren't -thinking- they're just shitting out the next token for any given input of tokens.

That's exactly what thinking is, though.

-

This post did not contain any content.

2025 Mazda MX-5 Miata 'got absolutely wrecked' by Inflatable Boat in beginner's boat racing match — Mazda's newest model bamboozled by 1930s technology.

-

This post did not contain any content.

this is because an LLM is not made for playing chess

-

If you believe LLMs are not good at anything then there should be relatively little to worry about in the long-term, but I am more concerned.

It's not obvious to me that it will backfire for them, because I believe LLMs are good at some things (that is, when they are used correctly, for the correct tasks). Currently they're being applied to far more use cases than they are likely to be good at -- either because they're overhyped or our corporate lords and masters are just experimenting to find out what they're good at and what not. Some of these cases will be like chess, but others will be like code*.

(* not saying LLMs are good at code in general, but for some coding applications I believe they are vastly more efficient than humans, even if a human expert can currently write higher-quality less-buggy code.)

I believe LLMs are good at some things

The problem is that they're being used for all the things, including a large number of tasks that thwy are not well suited to.

-

I believe LLMs are good at some things

The problem is that they're being used for all the things, including a large number of tasks that thwy are not well suited to.

yeah, we agree on this point. In the short term it's a disaster. In the long-term, assuming AI's capabilities don't continue to improve at the rate they have been, our corporate overlords will only replace people for whom it's actually worth it to them to replace with AI.

-

That's exactly what thinking is, though.

An LLM is an ordered series of parameterized / weighted nodes which are fed a bunch of tokens, and millions of calculations later result generates the next token to append and repeat the process. It's like turning a handle on some complex Babbage-esque machine. LLMs use a tiny bit of randomness ("temperature") when choosing the next token so the responses are not identical each time.

But it is not thinking. Not even remotely so. It's a simulacrum. If you want to see this, run ollama with the temperature set to 0 e.g.

ollama run gemma3:4b >>> /set parameter temperature 0 >>> what is a leafYou will get the same answer every single time.

-

An LLM is an ordered series of parameterized / weighted nodes which are fed a bunch of tokens, and millions of calculations later result generates the next token to append and repeat the process. It's like turning a handle on some complex Babbage-esque machine. LLMs use a tiny bit of randomness ("temperature") when choosing the next token so the responses are not identical each time.

But it is not thinking. Not even remotely so. It's a simulacrum. If you want to see this, run ollama with the temperature set to 0 e.g.

ollama run gemma3:4b >>> /set parameter temperature 0 >>> what is a leafYou will get the same answer every single time.

I know what an LLM is doing. You don't know what your brain is doing.