Judge Rules Training AI on Authors' Books Is Legal But Pirating Them Is Not

-

FTA:

Anthropic warned against “[t]he prospect of ruinous statutory damages—$150,000 times 5 million books”: that would mean $750 billion.

So part of their argument is actually that they stole so much that it would be impossible for them/anyone to pay restitution, therefore we should just let them off the hook.

This version of too big to fail is too big a criminal to pay the fines.

How about we lock them up instead? All of em.

-

I will admit this is not a simple case. That being said, if you've lived in the US (and are aware of local mores), but you're not American. you will have a different perspective on the US judicial system.

How is right to learn even relevant here? An LLM by definition cannot learn.

Where did I say analyzing a text should be restricted?

How is right to learn even relevant here? An LLM by definition cannot learn.

I literally quoted a relevant part of the judge's decision:

But Authors cannot rightly exclude anyone from using their works for training or learning as such.

-

This was a preliminary judgment, he didn't actually rule on the piracy part. That part he deferred to an actual full trial.

The part about training being a copyright violation, though, he ruled against.

Legally that is the right call.

Ethically and rationally, however, it’s not. But the law is frequently unethical and irrational, especially in the US.

-

But AFAIK they actually didn't acquire the legal rights even to read the stuff they trained from. There were definitely cases of pirated books used to train models.

Yes, and that part of the case is going to trial. This was a preliminary judgment specifically about the training itself.

-

“I torrented all this music and movies to train my local ai models”

Yeah, nice precedent

-

How is right to learn even relevant here? An LLM by definition cannot learn.

I literally quoted a relevant part of the judge's decision:

But Authors cannot rightly exclude anyone from using their works for training or learning as such.

I am not a lawyer. I am talking about reality.

What does an LLM application (or training processes associated with an LLM application) have to do with the concept of learning? Where is the learning happening? Who is doing the learning?

Who is stopping the individuals at the LLM company from learning or analysing a given book?

From my experience living in the US, this is pretty standard American-style corruption. Lots of pomp and bombast and roleplay of sorts, but the outcome is no different from any other country that is in deep need of judicial and anti-corruotion reform.

-

This is an easy case. Using published works to train AI without paying for the right to do so is piracy. The judge making this determination is an idiot.

The judge making this determination is an idiot.

The judge hasn't ruled on the piracy question yet. The only thing that the judge has ruled on is, if you legally own a copy of a book, then you can use it for a variety of purposes, including training an AI.

"But they didn't own the books!"

Right. That's the part that's still going to trial.

-

“I torrented all this music and movies to train my local ai models”

I also train this guy's local AI models.

-

This post did not contain any content.

brb, training a 1-layer neural net so i can ask it to play Pixar films

-

FTA:

Anthropic warned against “[t]he prospect of ruinous statutory damages—$150,000 times 5 million books”: that would mean $750 billion.

So part of their argument is actually that they stole so much that it would be impossible for them/anyone to pay restitution, therefore we should just let them off the hook.

Hold my beer.

-

FTA:

Anthropic warned against “[t]he prospect of ruinous statutory damages—$150,000 times 5 million books”: that would mean $750 billion.

So part of their argument is actually that they stole so much that it would be impossible for them/anyone to pay restitution, therefore we should just let them off the hook.

Ahh cant wait for hedgefunds and the such to use this defense next.

-

This post did not contain any content.

I am training my model on these 100,000 movies your honor.

-

People. ML AI's are not a human. It's machine. Why do you want to give it human rights?

-

I am training my model on these 100,000 movies your honor.

Trains model to change one pixel per frame with malicious intent

-

It's pretty simple as I see it. You treat AI like a person. A person needs to go through legal channels to consume material, so piracy for AI training is as illegal as it would be for personal consumption. Consuming legally possessed copywritten material for "inspiration" or "study" is also fine for a person, so it is fine for AI training as well. Commercializing derivative works that infringes on copyright is illegal for a person, so it should be illegal for an AI as well. All produced materials, even those inspired by another piece of media, are permissible if not monetized, otherwise they need to be suitably transformative. That line can be hard to draw even when AI is not involved, but that is the legal standard for people, so it should be for AI as well. If I browse through Deviant Art and learn to draw similarly my favorite artists from their publically viewable works, and make a legally distinct cartoon mouse by hand in a style that is similar to someone else's and then I sell prints of that work, that is legal. The same should be the case for AI.

But! Scrutiny for AI should be much stricter given the inherent lack of true transformative creativity. And any AI that has used pirated materials should be penalized either by massive fines or by wiping their training and starting over with legally licensed or purchased or otherwise public domain materials only.

But AI is not a person. It's very weird idea to treat it like a person.

-

But AI is not a person. It's very weird idea to treat it like a person.

No it's a tool, created and used by people. You're not treating the tool like a person. Tools are obviously not subject to laws, can't break laws, etc.. Their usage is subject to laws. If you use a tool to intentionally, knowingly, or negligently do things that would be illegal for you to do without the tool, then that's still illegal. Same for accepting money to give others the privilege of doing those illegal things with your tool without any attempt at moderating said things that you know is happening. You can argue that maybe the law should be more strict with AI usage than with a human if you have a good legal justification for it, but there's really no way to justify being less strict.

-

“I torrented all this music and movies to train my local ai models”

That's legal just don't look at them or enjoy them.

-

This post did not contain any content.

Bangs

gabblegavel.Gets sack with dollar sign

“Oh good, my laundry is done”

-

brb, training a 1-layer neural net so i can ask it to play Pixar films

Good luck fitting it in RAM lol.

-

This post did not contain any content.

Fuck the AI nut suckers and fuck this judge.

-

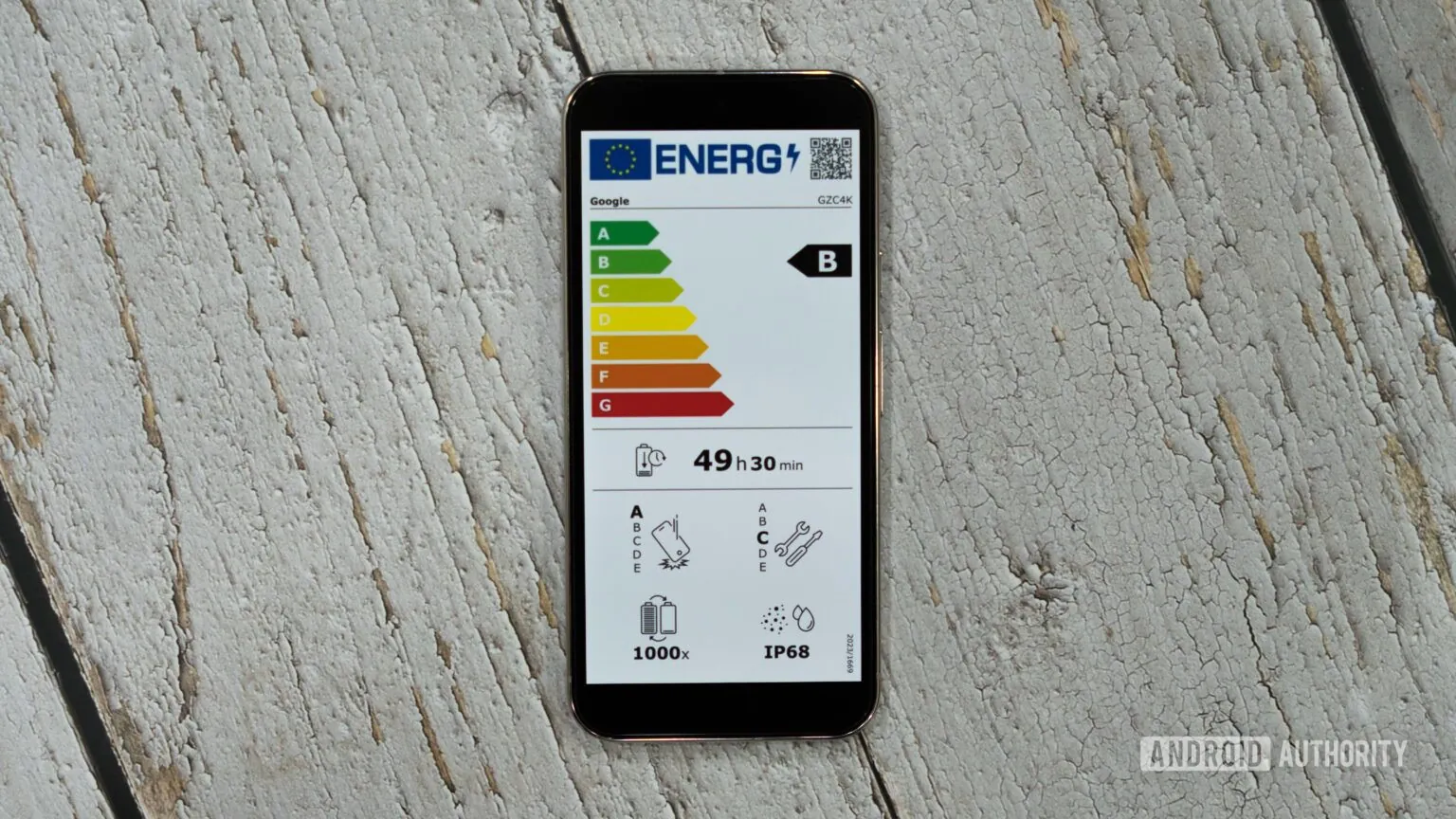

Samsung phones can survive twice as many charges as Pixel and iPhone, according to EU data

Technology 1

1

-

DOJ Announces Coordinated, Nationwide Actions to Combat North Korean Remote Information Technology Workers’ Illicit Revenue Generation Schemes

Technology 1

1

-

-

-

-

Telegram, the FSB, and the Man in the Middle: The technical infrastructure that underpins Telegram is controlled by a man whose companies have collaborated with Russian intelligence services.

Technology 1

1

-

-