I'm looking for an article showing that LLMs don't know how they work internally

-

Can’t help but here’s a rant on people asking LLMs to “explain their reasoning” which is impossible because they can never reason (not meant to be attacking OP, just attacking the “LLMs think and reason” people and companies that spout it):

LLMs are just matrix math to complete the most likely next word. They don’t know anything and can’t reason.

Anything you read or hear about LLMs or “AI” getting “asked questions” or “explain its reasoning” or talking about how they’re “thinking” is just AI propaganda to make you think they’re doing something LLMs literally can’t do but people sure wish they could.

In this case it sounds like people who don’t understand how LLMs work eating that propaganda up and approaching LLMs like there’s something to talk to or discern from.

If you waste egregiously high amounts of gigawatts to put everything that’s ever been typed into matrices you can operate on, you get a facsimile of the human knowledge that went into typing all of that stuff.

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

TLDR; LLMs can never think or reason, anyone talking about them thinking or reasoning is bullshitting, they utilize almost everything that’s ever been typed to give (occasionally) reasonably useful outputs that are the most basic bitch shit because that’s the most likely next word at the cost of environmental disaster

It's true that LLMs aren't "aware" of what internal steps they are taking, so asking an LLM how they reasoned out an answer will just output text that statistically sounds right based on its training set, but to say something like "they can never reason" is provably false.

Its obvious that you have a bias and desperately want reality to confirm it, but there's been significant research and progress in tracing internals of LLMs, that show logic, planning, and reasoning.

EDIT: lol you can downvote me but it doesn't change evidence based research

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

Developing a AAA video game has a higher carbon footprint than training an LLM, and running inference uses significantly less power than playing that same video game.

-

I found the aeticle in a post on the fediverse, and I can't find it anymore.

The reaserchers asked a simple mathematical question to an LLM ( like 7+4) and then could see how internally it worked by finding similar paths, but nothing like performing mathematical reasoning, even if the final answer was correct.

Then they asked the LLM to explain how it found the result, what was it's internal reasoning. The answer was detailed step by step mathematical logic, like a human explaining how to perform an addition.

This showed 2 things:

-

LLM don't "know" how they work

-

the second answer was a rephrasing of original text used for training that explain how math works, so LLM just used that as an explanation

I think it was a very interesting an meaningful analysis

Can anyone help me find this?

EDIT: thanks to @theunknownmuncher

@lemmy.world

https://www.anthropic.com/research/tracing-thoughts-language-model its this oneEDIT2: I'm aware LLM dont "know" anything and don't reason, and it's exactly why I wanted to find the article. Some more details here: https://feddit.it/post/18191686/13815095

There was a study by Anthropic, the company behind Claude, that developed another AI that they used as a sort of "brain scanner" for the LLM, in the sense that allowed them to see sort of a model of how the LLM "internal process" worked

-

-

Can’t help but here’s a rant on people asking LLMs to “explain their reasoning” which is impossible because they can never reason (not meant to be attacking OP, just attacking the “LLMs think and reason” people and companies that spout it):

LLMs are just matrix math to complete the most likely next word. They don’t know anything and can’t reason.

Anything you read or hear about LLMs or “AI” getting “asked questions” or “explain its reasoning” or talking about how they’re “thinking” is just AI propaganda to make you think they’re doing something LLMs literally can’t do but people sure wish they could.

In this case it sounds like people who don’t understand how LLMs work eating that propaganda up and approaching LLMs like there’s something to talk to or discern from.

If you waste egregiously high amounts of gigawatts to put everything that’s ever been typed into matrices you can operate on, you get a facsimile of the human knowledge that went into typing all of that stuff.

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

TLDR; LLMs can never think or reason, anyone talking about them thinking or reasoning is bullshitting, they utilize almost everything that’s ever been typed to give (occasionally) reasonably useful outputs that are the most basic bitch shit because that’s the most likely next word at the cost of environmental disaster

I've read that article. They used something they called an "MRI for AIs", and checked e.g. how an AI handled math questions, and then asked the AI how it came to that answer, and the pathways actually differed. While the AI talked about using a textbook answer, it actually did a different approach. That's what I remember of that article.

But yes, it exists, and it is science, not TicTok

-

I'm aware of this and agree but:

-

I see that asking how an LLM got to their answers as a "proof" of sound reasoning has become common

-

this new trend of "reasoning" models, where an internal conversation is shown in all its steps, seems to be based on this assumption of trustable train of thoughts. And given the simple experiment I mentioned, it is extremely dangerous and misleading

-

take a look at this video: https://youtube.com/watch?v=Xx4Tpsk_fnM : everything is based on observing and directing this internal reasoning, and these guys are computer scientists. How can they trust this?

So having a good written article at hand is a good idea imho

I only follow some YouTubers like Digital Spaceport but there has been a lot of progress from years ago when LLM's were only predictive. They now have an inductive engine attached to the LLM to provide logic guard rails.

-

-

Can’t help but here’s a rant on people asking LLMs to “explain their reasoning” which is impossible because they can never reason (not meant to be attacking OP, just attacking the “LLMs think and reason” people and companies that spout it):

LLMs are just matrix math to complete the most likely next word. They don’t know anything and can’t reason.

Anything you read or hear about LLMs or “AI” getting “asked questions” or “explain its reasoning” or talking about how they’re “thinking” is just AI propaganda to make you think they’re doing something LLMs literally can’t do but people sure wish they could.

In this case it sounds like people who don’t understand how LLMs work eating that propaganda up and approaching LLMs like there’s something to talk to or discern from.

If you waste egregiously high amounts of gigawatts to put everything that’s ever been typed into matrices you can operate on, you get a facsimile of the human knowledge that went into typing all of that stuff.

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

TLDR; LLMs can never think or reason, anyone talking about them thinking or reasoning is bullshitting, they utilize almost everything that’s ever been typed to give (occasionally) reasonably useful outputs that are the most basic bitch shit because that’s the most likely next word at the cost of environmental disaster

People don't understand what "model" means. That's the unfortunate reality.

-

It's true that LLMs aren't "aware" of what internal steps they are taking, so asking an LLM how they reasoned out an answer will just output text that statistically sounds right based on its training set, but to say something like "they can never reason" is provably false.

Its obvious that you have a bias and desperately want reality to confirm it, but there's been significant research and progress in tracing internals of LLMs, that show logic, planning, and reasoning.

EDIT: lol you can downvote me but it doesn't change evidence based research

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

Developing a AAA video game has a higher carbon footprint than training an LLM, and running inference uses significantly less power than playing that same video game.

Too deep on the AI propaganda there, it’s completing the next word. You can give the LLM base umpteen layers to make complicated connections, still ain’t thinking.

The LLM corpos trying to get nuclear plants to power their gigantic data centers while AAA devs aren’t trying to buy nuclear plants says that’s a straw man and you simultaneously also are wrong.

Using a pre-trained and memory-crushed LLM that can run on a small device won’t take up too much power. But that’s not what you’re thinking of. You’re thinking of the LLM only accessible via ChatGPT’s api that has a yuge context length and massive matrices that needs hilariously large amounts of RAM and compute power to execute. And it’s still a facsimile of thought.

It’s okay they suck and have very niche actual use cases - maybe it’ll get us to something better. But they ain’t gold, they ain't smart, and they ain’t worth destroying the planet.

-

Too deep on the AI propaganda there, it’s completing the next word. You can give the LLM base umpteen layers to make complicated connections, still ain’t thinking.

The LLM corpos trying to get nuclear plants to power their gigantic data centers while AAA devs aren’t trying to buy nuclear plants says that’s a straw man and you simultaneously also are wrong.

Using a pre-trained and memory-crushed LLM that can run on a small device won’t take up too much power. But that’s not what you’re thinking of. You’re thinking of the LLM only accessible via ChatGPT’s api that has a yuge context length and massive matrices that needs hilariously large amounts of RAM and compute power to execute. And it’s still a facsimile of thought.

It’s okay they suck and have very niche actual use cases - maybe it’ll get us to something better. But they ain’t gold, they ain't smart, and they ain’t worth destroying the planet.

it's completing the next word.

Facts disagree, but you've decided to live in a reality that matches your biases despite real evidence, so whatever

-

Can’t help but here’s a rant on people asking LLMs to “explain their reasoning” which is impossible because they can never reason (not meant to be attacking OP, just attacking the “LLMs think and reason” people and companies that spout it):

LLMs are just matrix math to complete the most likely next word. They don’t know anything and can’t reason.

Anything you read or hear about LLMs or “AI” getting “asked questions” or “explain its reasoning” or talking about how they’re “thinking” is just AI propaganda to make you think they’re doing something LLMs literally can’t do but people sure wish they could.

In this case it sounds like people who don’t understand how LLMs work eating that propaganda up and approaching LLMs like there’s something to talk to or discern from.

If you waste egregiously high amounts of gigawatts to put everything that’s ever been typed into matrices you can operate on, you get a facsimile of the human knowledge that went into typing all of that stuff.

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

TLDR; LLMs can never think or reason, anyone talking about them thinking or reasoning is bullshitting, they utilize almost everything that’s ever been typed to give (occasionally) reasonably useful outputs that are the most basic bitch shit because that’s the most likely next word at the cost of environmental disaster

How would you prove that someone or something is capable of reasoning or thinking?

-

Can’t help but here’s a rant on people asking LLMs to “explain their reasoning” which is impossible because they can never reason (not meant to be attacking OP, just attacking the “LLMs think and reason” people and companies that spout it):

LLMs are just matrix math to complete the most likely next word. They don’t know anything and can’t reason.

Anything you read or hear about LLMs or “AI” getting “asked questions” or “explain its reasoning” or talking about how they’re “thinking” is just AI propaganda to make you think they’re doing something LLMs literally can’t do but people sure wish they could.

In this case it sounds like people who don’t understand how LLMs work eating that propaganda up and approaching LLMs like there’s something to talk to or discern from.

If you waste egregiously high amounts of gigawatts to put everything that’s ever been typed into matrices you can operate on, you get a facsimile of the human knowledge that went into typing all of that stuff.

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

TLDR; LLMs can never think or reason, anyone talking about them thinking or reasoning is bullshitting, they utilize almost everything that’s ever been typed to give (occasionally) reasonably useful outputs that are the most basic bitch shit because that’s the most likely next word at the cost of environmental disaster

Who has claimed that LLMs have the capacity to reason?

-

Who has claimed that LLMs have the capacity to reason?

The study being referenced explains in detail why they can’t. So I’d say it’s Anthropic who stated LLMs don’t have the capacity to reason, and that’s what we’re discussing.

The popular media tends to go on and on about conflating AI with AGI and synthetic reasoning.

-

People don't understand what "model" means. That's the unfortunate reality.

They walk down runways and pose for magazines. Do they reason? Sometimes.

-

It's true that LLMs aren't "aware" of what internal steps they are taking, so asking an LLM how they reasoned out an answer will just output text that statistically sounds right based on its training set, but to say something like "they can never reason" is provably false.

Its obvious that you have a bias and desperately want reality to confirm it, but there's been significant research and progress in tracing internals of LLMs, that show logic, planning, and reasoning.

EDIT: lol you can downvote me but it doesn't change evidence based research

It’d be impressive if the environmental toll making the matrices and using them wasn’t critically bad.

Developing a AAA video game has a higher carbon footprint than training an LLM, and running inference uses significantly less power than playing that same video game.

but there's been significant research and progress in tracing internals of LLMs, that show logic, planning, and reasoning.

would there be a source for such research?

-

I found the aeticle in a post on the fediverse, and I can't find it anymore.

The reaserchers asked a simple mathematical question to an LLM ( like 7+4) and then could see how internally it worked by finding similar paths, but nothing like performing mathematical reasoning, even if the final answer was correct.

Then they asked the LLM to explain how it found the result, what was it's internal reasoning. The answer was detailed step by step mathematical logic, like a human explaining how to perform an addition.

This showed 2 things:

-

LLM don't "know" how they work

-

the second answer was a rephrasing of original text used for training that explain how math works, so LLM just used that as an explanation

I think it was a very interesting an meaningful analysis

Can anyone help me find this?

EDIT: thanks to @theunknownmuncher

@lemmy.world

https://www.anthropic.com/research/tracing-thoughts-language-model its this oneEDIT2: I'm aware LLM dont "know" anything and don't reason, and it's exactly why I wanted to find the article. Some more details here: https://feddit.it/post/18191686/13815095

I don't know how I work. I couldn't tell you much about neuroscience beyond "neurons are linked together and somehow that creates thoughts". And even when it comes to complex thoughts, I sometimes can't explain why. At my job, I often lean on intuition I've developed over a decade. I can look at a system and get an immediate sense if it's going to work well, but actually explaining why or why not takes a lot more time and energy. Am I an LLM?

-

-

Who has claimed that LLMs have the capacity to reason?

More than enough people who claim to know how it works think it might be "evolving" into a sentient being inside it's little black box. Example from a conversation I gave up on...

https://sh.itjust.works/comment/18759960 -

I found the aeticle in a post on the fediverse, and I can't find it anymore.

The reaserchers asked a simple mathematical question to an LLM ( like 7+4) and then could see how internally it worked by finding similar paths, but nothing like performing mathematical reasoning, even if the final answer was correct.

Then they asked the LLM to explain how it found the result, what was it's internal reasoning. The answer was detailed step by step mathematical logic, like a human explaining how to perform an addition.

This showed 2 things:

-

LLM don't "know" how they work

-

the second answer was a rephrasing of original text used for training that explain how math works, so LLM just used that as an explanation

I think it was a very interesting an meaningful analysis

Can anyone help me find this?

EDIT: thanks to @theunknownmuncher

@lemmy.world

https://www.anthropic.com/research/tracing-thoughts-language-model its this oneEDIT2: I'm aware LLM dont "know" anything and don't reason, and it's exactly why I wanted to find the article. Some more details here: https://feddit.it/post/18191686/13815095

"Researchers" did a thing I did the first day I was actually able to ChatGPT and came to a conclusion that is in the disclaimers on the ChatGPT website. Can I get paid to do this kind of "research?" If you've even read a cursory article about how LLMs work you'd know that asking them what their reasoning is for anything doesn't work because the answer would just always be an explanation of how LLMs work generally.

-

-

How would you prove that someone or something is capable of reasoning or thinking?

You can prove it’s not by doing some matrix multiplication and seeing its matrix multiplication. Much easier way to go about it

-

it's completing the next word.

Facts disagree, but you've decided to live in a reality that matches your biases despite real evidence, so whatever

It’s literally tokens. Doesn’t matter if it completes the next word or next phrase, still completing the next most likely token

can’t think can’t reason can witch’s brew facsimile of something done before

can’t think can’t reason can witch’s brew facsimile of something done before -

but there's been significant research and progress in tracing internals of LLMs, that show logic, planning, and reasoning.

would there be a source for such research?

https://www.anthropic.com/research/tracing-thoughts-language-model for one, the exact article OP was asking for

-

https://www.anthropic.com/research/tracing-thoughts-language-model for one, the exact article OP was asking for

but this article espouses that llms do the opposite of logic, planning, and reasoning?

quoting:

Claude, on occasion, will give a plausible-sounding argument designed to agree with the user rather than to follow logical steps. We show this by asking it for help on a hard math problem while giving it an incorrect hint. We are able to “catch it in the act” as it makes up its fake reasoning,

are there any sources which show that llms use logic, conduct planning, and reason (as was asserted in the 2nd level comment)?

-

They walk down runways and pose for magazines. Do they reason? Sometimes.

But why male models?

-

-

-

In the Sweltering Southwest, Planting Solar Panels in Farmland Can Help Both Photovoltaics and Crops - Inside Climate News

Technology 1

1

-

-

-

-

1

1

-

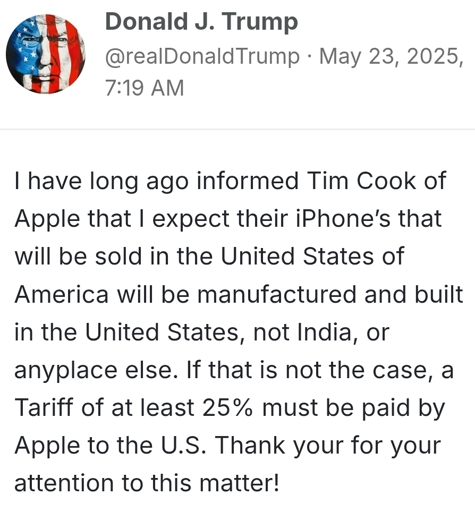

Trump says a 25% tariff "must be paid by Apple" on iPhones not made in the US, says he told Tim Cook long ago that iPhones sold in the US must be made in the US

Technology 2

2