Duolingo CEO tries to walk back AI-first comments, fails

-

I gotta say, the icon of Duo looking like this, plus a snot coming out of one of its nostrils is what did it for me. No way to turn off this "feature" either. I'm not easily grossed out, so seeing it once or twice would have given me a chuckle. Seeing it every time I opened my phone? Nope.

I knew I wouldn't be renewing my subscription right there and then (there were other reasons, but that one moved the decision faster.)

wait what... they made the official icon look miserable and added snot? wtf?

-

There should be a federated system for blocking IP ranges that other server operators within a chain of trust have already identified as belonging to crawlers. A bit like fediseer.com, but possibly more decentralized.

(Here’s another advantage of Markov chain maze generators like Nepenthes: Even when crawlers recognize that they have been served garbage and they delete it, one still has obtained highly reliable evidence that the requesting IPs are crawlers.)

Also, whenever one is only partially confident in a classification of an IP range as a crawler, instead of blocking it outright one can serve proof-of-works tasks (à la Anubis) with a complexity proportional to that confidence. This could also be useful in order to keep crawlers somewhat in the dark about whether they’ve been put on a blacklist.

Did you comment on the wrong thread?

-

I want every headline to end with "..., fails"

"Amir, on his way to become successful in life...."

-

Tl;Dr: skip the apps unless they're part of a bigger in-person course. Prefer reputable sources like pimsleur and mango languages. If you have no rush, get graded readers and watch a lot of YouTube, podcasts, etc.

Ok, so here are my two cents on learning languages and the whole category of learning apps. They are all flawed on some major way or another. But mostly it is about pacing learning progress.

Teaching absolute beginners is easy. They know nothing, thus anything you show them will be progress. The actual difficulty when learning a language is finding appropriate material for your level of understanding, such that you understand most of it, but still find new things to learn. This is known as comprehensible input. The difficulty of most apps is that they are not capable of detecting then adapting study content accordingly to the student's progress. So they typically go way too slow, or sometimes too fast. Leaving the student frustrated and halting learning.

Jumping with some nonzero knowledge into any app is also torture. It's known as the valley of despair. The beginner content is too boring and dull, now that you know a bit, but the intermediate level is way too much of a gap for you yet.

My advice is to skip language learning apps. The "motivation via gamification hypothesis" is flawed and lacks nuance and understanding of behavioral science. People don't stop studying out of a lack of tokens, gems, streaks or achievement badges. It's because the content itself is uninteresting and bores them. Sure, the celebration and streaks work at first, but they usually lose effect by something known as reinforcement depreciation. The same stimulus shown too much or too frequently stops being gratifying. The biggest reward for learning a language is actually using it.

A method that is known to work is to find graded readers. Watch a lot of YouTube, podcasts, social media, in the target language (avoid the language learning influencers) listen to native influencers speaking about topics you care about. Books work, in-person courses work, learning apps are good to start you up form absolute zero. But most learning happens on what you do in your everyday life. Using the language is the most effective way of becoming good at the language. Everything else is just excuses for using it.

...or join a reputable language learning academy and go to class in person.

Though I know this is not for everyone. But neither is self-learning online.

-

AI is social cancer

It's a lie told by marketing companies that have gaslit artists into automating their creativity and gaslit governments into automating fascism

AI is social lung cancer. Behind social media, which is social bone cancer metastasized.

-

crazy how fast they ruined the reputation of this company.

they lost all the good will in like a month.

Twitter enter the chat

Twitter was going downhill hard already when Musk bought the place

-

wait what... they made the official icon look miserable and added snot? wtf?

Yup! Google "Duolingo snot icon" and it will be the first image result.

Or you could visit the orange site and check it out there:

-

FTFY: Pretends to walk back his statements. Fools no one.

except his AI.

-

I'm mainly interested in Japanese, so I'm currently looking at https://www.renshuu.org/ . In addition to just throwing random stuff at you, it gots some more in-depth training, explanations of stuff (something that never happened in duolingo), additional hints for alphabets including some mnemonics, and years of dedicated experience in the language. I can't tell how it would feel long term, but so far even having some basic explanations is a great improvement.

I'm not gonna lie, I stopped using Renshuu due to having other resources at hand and because it just looks so rough, but I think it's great for a free resource. The fact that they offer a shit ton of vocab/grammar/kanji study sets for free and community built ones is reminiscent of Anki, and Renshuu also uses a SRS. Lots of customization for reviews and answer options.

It's certainly nowhere as eye-catching and addictive as Duolingo is, so beginners are probably more likely to give up than if they used Duolingo. But honestly, that site lost the point of what learning a language was supposed to be about anyway.

Sometimes I feel I should pick it back up, but at this point I want to focus more on reading/watching content for practice/learning.

-

Yup! Google "Duolingo snot icon" and it will be the first image result.

Or you could visit the orange site and check it out there:

holy fuck

-

FTFY: Pretends to walk back his statements. Fools no one.

So...tries and fails?

-

How do these people become CEOs they're as thick as several short planks nailed together.

Firstly every single company that has tried to replace its employees with AI has always ended up having issues. Secondly even if that wasn't the case, people are not going to be happy about it so it's not something you should brag publicly about.

If you're going to replace all of your employees with AI just do it quietly, that way if it fails it's not a public failure, and if it succeeds (it won't) then you talk about it.

People keep forgetting that these companies’ product is stock price, not whatever they’re advertising at any given moment.

Their “CEOs” have gotten sloppy because the grift has gotten so easy they naturally assume everyone is in on it. If everyone is in on the grift, there’s no need to lie about it. -

crazy how fast they ruined the reputation of this company. just a couple months ago, duo mascot and Duolingo streaks were cool and fun. they had a good thing going. but now it's just another shit tech company again. they lost all the good will in like a month.

I uninstalled yesterday, good riddance Duo.

-

- Following backlash to statements that Duolingo will be AI-first, threatening jobs in the process, CEO Luis von Ahn has tried to walk back his statement.

- Unfortunately, the CEO doesn’t walk back any of the key points he originally outlined, choosing instead to try, and fail to placate the maddening crowd.

- Unfortunately the PR team may soon be replaced by AI as this latest statement has done anything but instil confidence in the firm’s users.

Duolingo is one of those fad apps that are overrated but are exquisitely trash once you force yourself to use them just to say "Hey, I have learned a language!". Honestly, it was pretty funny seeing Duolingo comment on videos on all that despite it feeling forced, replacing it with AI just signifies Duolingo's slow and painful demise

-

wait what... they made the official icon look miserable and added snot? wtf?

I think on iOS they added a thing where it would change based on the days you didn't use Duolingo. Honestly at this point I think it speaks more about the sorry state of their company more than anything.

-

-

Companies That Tried to Save Money With AI Are Now Spending a Fortune Hiring People to Fix Its Mistakes

Technology 1

1

-

-

To land Meta’s massive $10 billion data center, Louisiana pulled out all the stops. Will it be worth it?

Technology 1

1

-

Millions of Americans Who Have Waited Decades for Fast Internet Connections Will Keep Waiting After the Trump Administration Threw a $42 Billion High-Speed Internet Program Into Disarray.

Technology 1

1

-

Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task

Technology 1

1

-

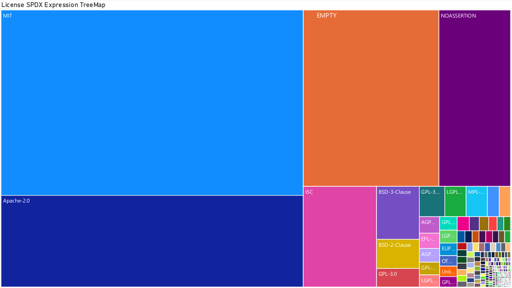

[Open question] Why are so many open-source projects, particularly projects written in Rust, MIT licensed?

Technology 1

1

-